This week I focused predominantly on setting up a working (locally hosted) webapp with integrated backend and database, in addition to a local server that is capable of receiving and storing drum set configurations. I will explain the functionality of both below.

The webapp (image below):

The webapp allows the user to completely customize their drum set. First they can choose to upload any sound files they want (.mp3, .mp4, or .wav). These sound files are stored both in the MySQL database and in a server localized directory. The database holds metadata about the sound files such as what user they correspond to, the file name, the name given to the file by the user, and the static url used to fetch the actual data of the sound file from the upload directory. The user can then construct a custom drum set by either searching for sound files they’ve uploaded and dragging them to the drum ring they wish to play that sound, or by searching for a saved drum set. Once they have a drum set they like, they can save the drum set so that they can quickly switch to sets they previously built and liked, or click the “Use this drum set” button, which triggers the process of sending the current drum set configuration to the locally running user server. The webapp allows for quick searching of sounds and saved drum sets and auto populates the drum set display when the user chooses a specific saved drum set . The app runs on localhost:5000 currently but will eventually be deployed and hosted remotely.

The local server:

Though currently very basic, the local sever is configured to run on the users port 8000. This is important as it defines where the webapp should send the drum set configurations to. The endpoint here is CORs enabled to ensure that the data can be sent across servers safely. Once a drum set configuration is received, the endpoint saves each sound in the configuration with a new file name of the form “drum_x.{file extension}”, where x represents the index of the drum that the sound corresponds to, and {file extension} is either .mp3, .mp4, or .wav. These files are then downloaded to a local directory called sounds, which is created if the user hasn’t already done so. This allows for very simple playback using a library like pyGame.

In addition to these two components, I worked on the design presentation slides and helped develop some new use case requirements which we based our design requirements off of. Namely, I came up with the requirement for the machined drumsticks to be below 200g, because as a drummer myself, increasing the weight much above the standard weight of a drumstick (~113g) would make playing DrumLite feel awkward and heavy. Furthermore, we developed the use case requirement of ensuring the 95% of the time that a drum ring is hit, the correct sound plays. This has to do with being confident that we detected the correct drum from the video footage, which can be difficult. To do this, we came up with the design requirement of using an exponential weighting over all the predicted outcomes for the drumsticks location. By applying a higher weight to the more recent frames of video, we think we can come up with a higher level of confidence on what drum was actually hit, and subsequently play the correct sound. This is a standard practice for many such problems where multiple frames need to be accurately analyzed in a short amount of time.

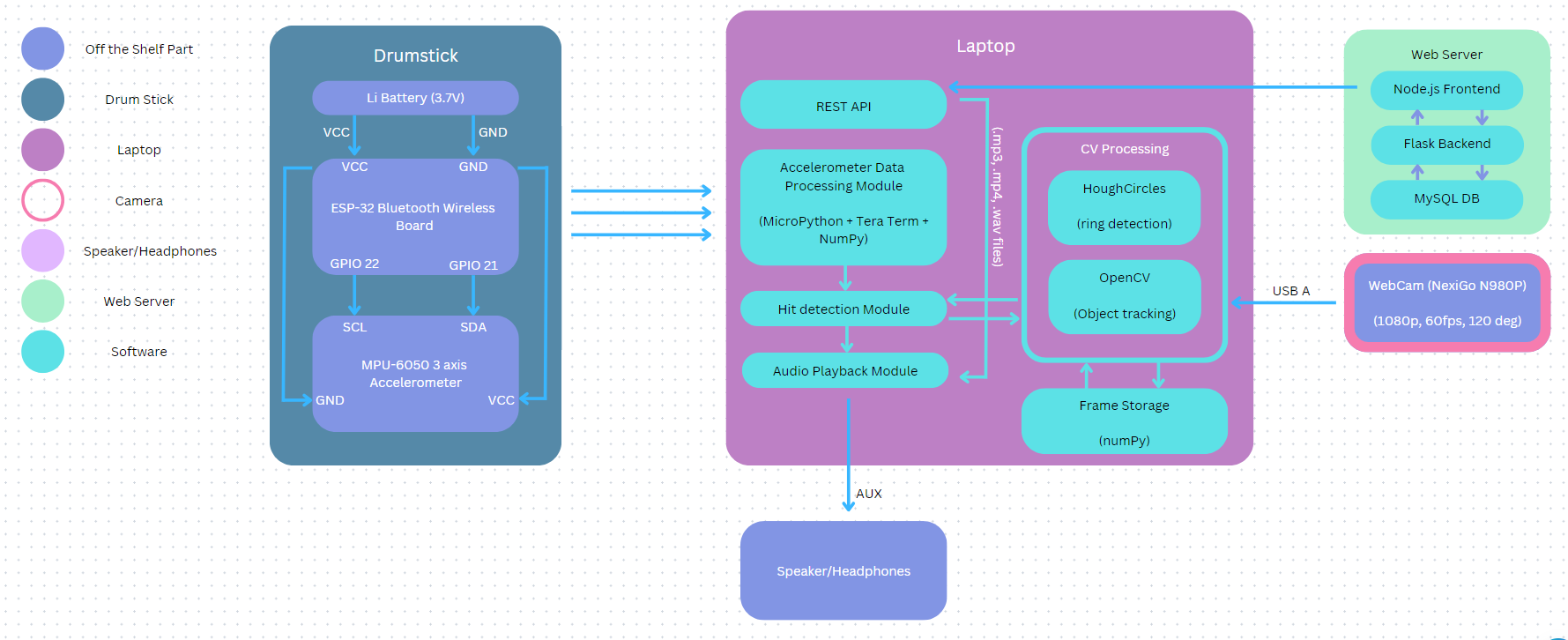

Lastly, I worked on a new diagram (also shown below) for how the basic workflow would look. It aids more as a graphic for the design presentation, but does convey a good amount of information about how the flow of data looks while using drumLite. My contributions of the project are coming along well and are on schedule. The plan was to get the webapp and local server working quickly so that we’d have a code base we can integrate with once we get our parts delivered and can actually start building out the code necessary to do image and accelerometer data processing.

In the next week my main focus will be creating a way to trigger a sequence of locally stored sounds within the local code base. I want to build a preliminary interface where a user inputs a sequence of drum id’s and delays and the corresponding sounds are sequentially played. This interface will be useful, as once the accelerometer data processing and computer vision modules are in a working state, we’d extract drum id’s from the video feed and pass these into the sound playing interface in the same way as is done in the above described simulation. The times at which to play the sounds (represented by delays in the simulation) would come from the accelerometer readings. Additionally, while it may be a reach task, I’d like to come up with a way to take the object detection script Belle wrote and use that to trigger the sound playing module I mentioned before.