This week, I presented our final presentation and started finalizing our project to get ready for the demo. We finally started building our mechanical parts and decided the placements of each components, so I helped with laser cutting our acrylic platform and determining the placement of the hardware components that will go on our wood structure. The mount brackets for the servo motors also came, so we figured out how to mount that to our acrylic platform. Based on the measurements of the mechanical structure that we have, I edited some parts of the hardware script to adjust the numbers that works with the placement of the camera and the ultrasonic sensor. I plan to start testing as soon as the mechanical build is finished, with a variety of real waste that we will use during the demo. In addition, I tried to fix the port issue when running the script in a loop, but because of lack of time I was unable to make much progress on this. But since our mechanical build is progressing faster than we expected, I expect to have much more time on finalizing the hardware scripts and hopefully fixing the port issue before the demo to be able to run and detect items continuously.

Ashley’s status report for 11/30

This week, I spent most of my time preparing for the final presentation and working more on the hardware components. Firstly, we changed the camera to a USB camera, because it has a better quality and is much easier to use with our Python script. I tested with it and made sure that the new camera works well and does not disrupt our existing system. I then worked on fixing the current script to allow continuous I/O between the Arduino and the Jetson, because ideally, for the final demo, we want to continue processing items instead of having to start the Python script every time we place an item. I was finally able to identify which part of the code was causing the bug when having a continuous loop, which was where the Jetson writes data to the serial. I spent a good amount of time trying to rewrite some logic from scratch and testing that it works at each step, but I wasn’t able to figure out exactly how I can fix it. But since I have identified the issue, hopefully I can make it work by next week.

Currently, I am on schedule as I am finishing preparing for the presentation and getting ready to build our mechanical parts. Next week, I hope to figure out the serial communication bug solved before our final demo and finish building our mechanical parts.

As I designed, implemented, and debugged my project, there were many new knowledges that I needed to learn. Since hardware programming is not my area of expertise, working on this subsystem required reviewing Arduino basics again and learning how the Pyserial library works. Since the hardware portion also included the camera and sending an image to the CV algorithm, I also had to familiarize myself with the OpenCV library. Lastly, working with the Jetson took a lot of effort because I was completely new to it. To overcome these, I made sure to read the documentations for the libraries/functions that I’m using. For the Jetson, I watched a lot of tutorial videos, especially for setting it up and integrating it with the other parts. Lastly, I communicated with my teammates effectively to get help if they had some prior knowledge.

Ashley’s Status Report for 11/16

This week, I continued working with the serial communication between Jetson and the Arduino. While working on it, I ran into a bug that when the Jetson continues listening to the Arduino in a loop, the first communication of data from the Arduino to Jetson to Arduino works, but the second communication always causes a port error. I was not able to find a way around this yet, so for now I made it so that the python script stops after the first communication. The camera also stopped working at one point when I tried to integrate the image capturing functionality into the Jetson code. The Jetson could not recognize the camera, even though it was working before. While I was not able to find the cause, it started working again after restarting the Jetson a couple of times. So for now, my system is now able to detect a nearby object, send a signal to the Jetson to capture the image, save the image, and then send a number back to the Arduino to turn the motor left or right based on the output (the CV algorithm will be run on the image to produce the actual output, which will replace the random number function that I have now. Currently the biggest problem in the hardware system is that the Jetson is a bit unstable, where occasionally the Jetson is unable to recognize the USB port or the camera as mentioned earlier. I hope that I will be able to find a cause for this, or replace some parts with new ones if that would be a fix.

I am currently on schedule, as my system now is able to be integrated with the other subsystems. I will also meet with my teammates tomorrow to work more on integrating the hardware with the CV and the web app before the interim demo.

To verify that my subsystem works, I would need to have the mechanical parts as soon as possible so that I can start testing with the actual recyclable/non-recyclable item to see if the system meets the user requirements for the operation time and the durability of the servo motor. I do not have the mechanical parts yet, which will be attached to the hardware in the upcoming weeks. However, I was able to create a tiny version of our actual product to test that the servo motor can turn a flat platform to drop an object by attaching some stuff:

Ashley’s Status Report for 11/9

This week, I spent most of the time familiarizing myself with Jetson and working on integrating it with the Arduino, which took a lot longer than expected. I ran into some issues while setting up the working environment for Jetson, because I was completely new to how it works. Also, I ran into some issues while trying to run the hardware code that I have previously written on Jetson. The serial communication, which worked when I connected the Arduino with the USB port on my laptop, did not work when I ran it with Jetson. This could be an issue with the setup or an error in the code that I need to fix, and I would need to do more research and debugging on this. Other than that, I also looked into the previous work done on the Jetson that contains the scripts for the computer vision and the camera capture functionality, in order to familiarize myself with these before integrating them with the other components of the hardware. While I wanted to get much more work done other than the Jetson setup and debugging, I had an extremely busy week with some unexpected events outside of school, which barely gave me any time to work on the project. As such I am slightly behind schedule, because my goal was to have the serial communication working on the Jetson by this week. Since I expect to have more time next week, I hope to figure out the error in the serial communication and have it working before the interim demo.

Ashley’s Status Report 11/2

This week, I mostly worked on writing code for the serial communication between Arduino and Jetson. I didn’t get to work with the actual Jetson, but I wrote the Python code that can be run on Jetson later to be combined with the CV algorithm. For now, I have a USB cable connected to my computer that allows serial communication with the Arduino. I made sure that the code for the ultrasonic sensor and the servo motor can work simultaneously, and made some mock functions to test that data can be sent back and forth in the order of operations that we aim to have. I also made sure that numbers and string data are sent and received correctly on both ends, since the Arduino will be receiving the classification as either recyclable, trash, or reject, and sending the weight of the item to the Jetson for the web app. I worked around with some ideal delay time between each operation, which will be adjusted when we actually start building our mechanical part.

I am slightly behind schedule as the weight sensor has not arrived yet. This was actually my fault, because I did not know that the order was not placed until the middle of this week. However, I have the code ready so that I will be able to start testing with it as soon as I receive it. Next week, I hope to finally work with running and testing code on Jetson, and integrating more hardware parts together in addition to the ultrasonic sensor and the servo motor.

Ashley’s Status Report for 10/26

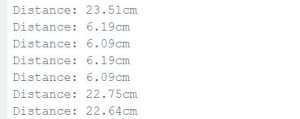

This week, I started testing the ultrasonic sensor and the servo motors with the Arduino code that I wrote. For the ultrasonic sensor, the code works as expected and the sensor is pretty accurate in determining the distance of a nearby object. For now, I have it so that it measures the distance every second. I held it from some distance above a flat surface, and tested with placing some objects above it. However, when I tested with some small, thin objects, there were times when the distance increased instead of decreasing. This might be due to the ultrasonic sensor not being fixed in place or because I’m measuring too often, so I will adjust this more when we start building our mechanical part. Also, the weight sensor will be better at sensing these thin objects once it’s incorporated. Here is the current output of the ultrasonic sensor in the serial monitor:

For the servo motor, I wrote a simple code that turns 90 degrees to the left/right every few seconds. The motor works as expected, so I can incorporate this functionality which will turn to a certain direction based on the output that the Jetson gives after the CV classification.

I am currently on schedule, but I have not received the weight sensor yet. Since the weight sensor was a recent change in our design, I might need a bit more time to finish the hardware implementation. But since the weight sensor is just an additional check in our object detection system, it should not take too much time. Next week, I hope to focus more on working with Jetson for the serial communication with Arduino and the camera capturing functionality with our Arducam. I also hope to conduct more thorough testing of the ultrasonic sensor and the servo motors, with different materials that can possibly placed on our platform.

Team Status Report for 10/20

In addition to the display screen attached to the front of the recycling bin, we decided to also incorporate a phone app that users can use to track how much they are recycling, receive recycling tips, and also keep a recycling streak, and earn badges for certain achievements. The reason why we added this change is because while a display screen is useful for users to see how much they are recycling, it can be difficult to show more complicated statistics on an LCD screen. Adding a web app will make the user experience better, and allow us to add more features that encourage users to recycle more. While most of the app can be implemented using free resources such as react, incorporating things like user authentication to connect the app to a specific recycling bin would require the use of AWS services, which we may need to pay for if we exceed the free tier’s limits. Luckily these AWS features are pretty cheap and shouldn’t cost more than a few dollars, and since we have a lot of room left in our budget, it will not impact our costs and budgeting very much.

Adding a major component to our project adds some more complexity to the design, as well as some risk. We will have to work out how to send data from the recycling system to the web app, but we have a lot of options, with the Jetson and Arduino both having wifi capabilities, or we could also add a Raspberry Pi (which has better internet connectivity features) specifically to support the web app. In the worst case scenario, we could also drop the web app and only show statistics on the OLED displays.

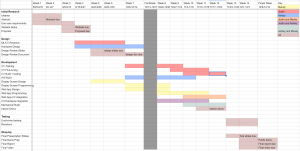

This is our updated gantt chart for our project with the added web app.

The following are answers to questions about how our design will meet the specific needs for global, cultural, and environmental factors.

Part A: Done by Mandy Hu

EcoSort is designed specifically for use within the city of Pittsburgh, as its object classification system of what can and cannot be recycled are based on Pittsburgh’s recycling laws. However, Pittsburgh’s recycling laws are very similar to laws in other parts of the country and the world that also use single-stream recycling. Therefore, although some specifications may be slightly different based on where in the world the user is, using EcoSort still will help improve recycling habits and decrease the amount of wishful recycling. That being said, we recognize that in certain parts of the world, recycling is already much more effective than in America, especially in countries such as South Korea and Germany, which use multi stream recycling. In these countries, EcoSort will not be particularly useful, as they already have well thought implemented recycling habits.

EcoSort is also made to be user friendly no matter where it is being used. The usage of it is similar to any regular trash can or recycling, with only the stipulation that items must be placed one at a time. The actual bins are the same as normal trash and recycling bins, and the mechanics to remove them and empty them are simple and require no knowledge about technology. Although it comes with an app, the bin does not require connection to the app in order to be used, so users who are not tech savvy may choose to forgo the app in order to simplify the process as well.

Part B: Done by Justin Wang

EcoSort’s target audience is Pittsburgh homes, and as a result it was designed using Pittsburgh recycling laws, namely single-stream recycling. However, EcoSort may be used by people with different language and cultural backgrounds, and we want EcoSort to be easy to use for anyone in our target demographic, no matter their background. To that end, the recycling and trash bins will be labeled with symbols that can be understood by anyone. For the bin that holds the recycling, we will choose a bin that has the recycling symbol (three arrows in a triangle), and is a different color. We will also label the bin slots (where the individual bins will go) with symbols. EcoSort will be easy to use, so as long as the bins are in the correct slots, a user will only have to place items in the center of the platform under the camera (this will also be labeled), and the sorting process will happen automatically.

If we go forward with the web app for recycling statistics tracking, then we can also look into supporting multiple languages. I am not the team’s expert of web apps, but doing some research I found that web app frameworks like Django or Flask support l10n, or localization, which can allow a web app to automatically translate its contents without have to change any code. We will look into this further as a part of planning the web app.

Part C: Done by Ashley Ryu

EcoSort aims to contribute to environmental sustainability by reducing recycling contamination, which has significant impacts on natural ecosystems and resource conservation. By automatically sorting items into either recycling or trash bins, users will properly recycle items even when they are unsure if an item is recyclable or not. For safety, when the system cannot classify an item, it will sort it into the trash, as it’s better to mislabel recyclable items as trash than to risk contaminating recycling with non-recyclables. This reduces the amount of non-recyclable waste that ends up contaminating recycling streams, improving the efficiency of recycling facilities. Proper sorting of trash reduces harmful waste in landfills, which helps reduce environmental damage to wildlife and ecosystems. In summary, EcoSort helps people develop better recycling habits by making sure more items are properly recycled and reused. This reduces waste, protects wildlife, and saves natural resources for the future.

Ashley’s Status Report for 10/20

Last week, I mainly focused on working on the design review with my teammates. For the hardware subsystem of our design, we thought that only having the ultrasonic sensor might not be enough to detect all item placement. A weight sensor in addition to the ultrasonic sensor could help improve the accuracy of item placement detection, for thin materials like paper. We also considered adding more ultrasonic sensors for better accuracy, which should not be too much of a change in code that I already have. The number of ultrasonic sensors used will be decided when I’m able to start testing with it.

While I did not have much time over fall break, I took some time to look into how I can use both the ultrasonic sensor and the weight sensor at the same time, and the possible models that I can order. I found a useful starter code and testing tutorial for the HX711 model. I hope to place an order for this by next week. In addition, we decided on the servo model and placed the order. So I also looked into the tutorial for this one too and found a tutorial that shows how I can control the angle and speed of the servo with Arduino.

I realized that working with hardware components and testing them require working with the actual components in person, and using online tools like Tinkercad has many limitations since the software does not have all the components that we are using. I hope to start testing and finalize hardware code on campus once I get back and receive the Arducam and the servo motor. I also hope to figure out how to integrate the camera functionality with Jetson while it’s being used for classification, as I am also in charge of the hardware integration.

Ashley’s Status Report for 10/5

Last Sunday, I worked on finalizing our design slides for the presentation. Later in the week on Thursday I picked up the ultrasonic sensor that was delivered. I wrote a piece of code in Arduino that detects the distance of the nearby object with the ultrasonic sensor, which can be incorporated in our product later. For now, it prints the distance to the object on the console but later this would trigger the camera to capture an image. I simulated this with the Tinkercad that I was using last week, and once I have all the physical components to connect it with the Arduino I will test to see how accurate our ultrasonic sensor is with the object detection. I also looked more into setting up serial communication between Arduino and Jetson, in order to implement the image capture triggering feature. And since our camera is now delivered (which is not picked up yet), I looked into how the Jetson can capture the image when it receives the signal that an object is detected. I found some Python code online that does a similar job, which does not look too complicated. By next week, along with working on our design report, I hope to start setting up all the hardware components and perform some basic tests.

Ashley’s Status Report for 9/28

This week I worked with my teammates on the design presentation and we made some changes to the design. The hardware part will mostly remain the same, so I continued with my research on the hardware components I will be working on. We finalized which model we wanted to use, and placed the orders for the ultrasonic distance sensor and the Arducam camera.

I also found a software called Tinkercad that allows you to simulate circuits. I saw that it includes the exact ultrasonic sensor that I wanted to use, and it allows you to write code. I played around with it a little and hopefully this will help me test and verify that it works before I start working with the actual components. Tinkercad lets me do something like this:

I am a little bit behind schedule, due to many overlapping deadlines and upcoming exams. I have done research on how the components work and what pieces of code I would have to write, but I don’t have any solid components yet. In the next week, since I expect to have a bit more time, I hope have a more solid design of the hardware and start setting them up once the orders arrive.