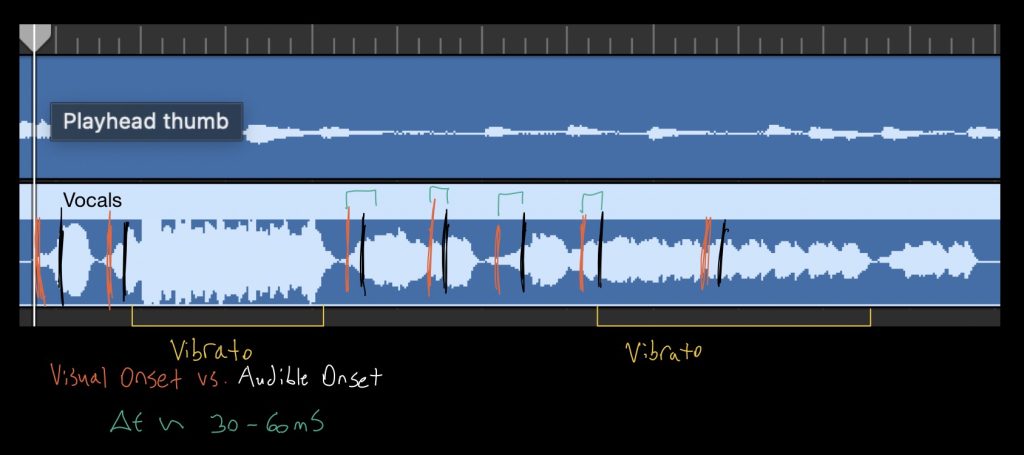

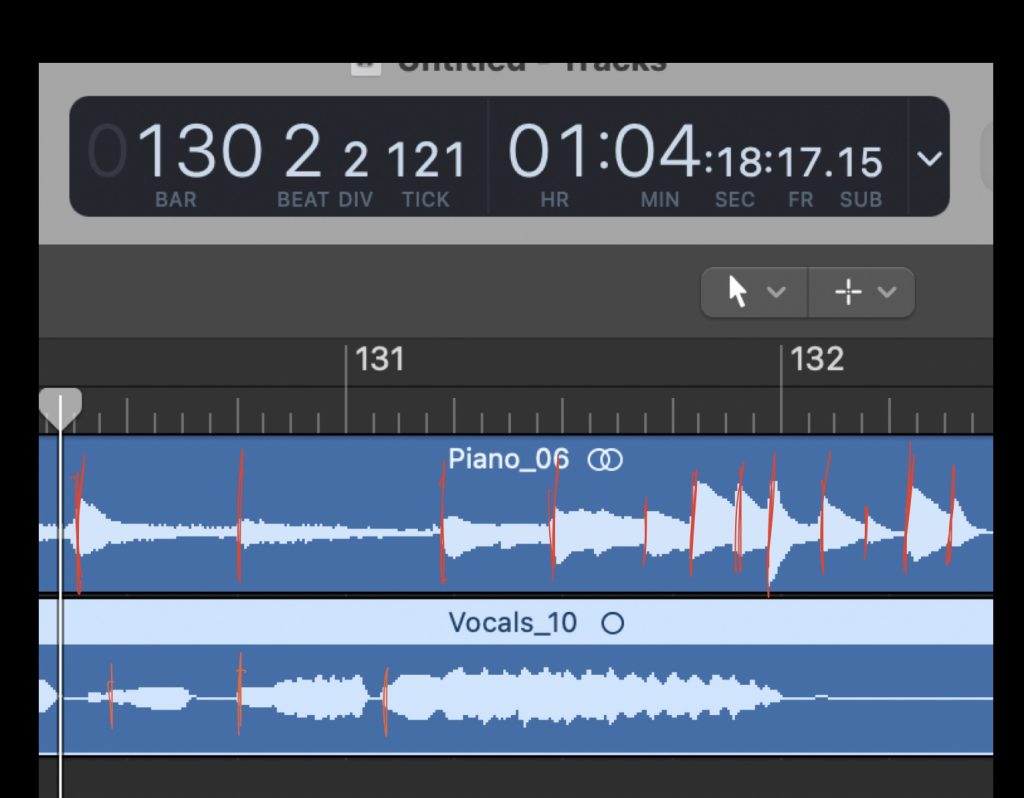

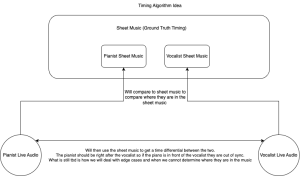

This week comprised of completing the design presentation and doing some initial prototyping on the timing comparison algorithm. I have built out a basic timing algorithm prototype in python using the expected data coming from the sheet music and audio data. From the sheet music I am getting data in the form of ‘d1/4′ for a note where the d stands for the music note d and the 1/4 stands for a quarter note. From the audio data I am going to be receiving a tuple in the form of (onset time, pitch) which is going to look like this: (0, ‘d’) where 0 is the onset time and d is the music note d.

In terms of implementation I have been thinking about what kind of data transformations I want to implement in order to properly analyze this data. I have considered transforming the sheet music into an array based on the what beat we are on, but the issue with this is it doesn’t distinguish between a new note and a sustained note. Because we are focusing on note onset for this timing data, I was considering using this array, and only focus on note onset for this beginning prototype. For example, if I receive the data [‘d1/4′, ‘d1/4’, ‘d1/2’, ‘d1/2’], because 1 beat is a quarter note, I would create a per beat data structure that would be [d, d, d, s, d, s] where s stands for sustained. I need to consider other edge cases such as notes that are less than a beat but that can be solved by just using an increment that is some fraction of a beat and scaling accordingly.

I have also started a github repo to contain our project code and pushed some prototype code to this.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Progress is on schedule so far.

What deliverables do you hope to complete in the next week?

For the next week I want to focus on getting real data from the music we have recorded and determine what the current timing latency is with python and if I should use a c++ implementation. I also want to finish a basic prototype before spring break based on the data we recorded. I have three exams next week so I might not be able to accomplish all of these but I think that this can be reasonably done.