This week I spent a lot of time this week making sure my system is robust and we are ready for the demo next week. We are going to demo on a simpler piece of music that doesn’t have complex chords, so am making sure the system is reliable. The current state of the system has it so it parses the sheet music data from the music xml and gives an output as so in the form of (pitch, onset time, duration)

It then takes this data and compares it to the audio data to find where the similarities of the two. This is done using a modified dynamic time warping algorithm and prioritizes onset time, then duration, then pitch when comparing two notes. This then outputs a list of (sheet music index, audio index) which are indexes where the audio matchest the sheet music. This looks like this:

This still needs a little work because when the audio data isn’t edited, there is a lot of noise that can disrupt the algorithm. This can be mitigated by doing pre processing on the data and to allow the algorithm to be more selective when matching indexes. Currently every index has to be match to another, but it can be modified so this is not the case. Once these changes are implemented it should be way more accurate. The preprocessing is going to be done by implementing a moving average to get rid of the erratic note anomalies. This should be relatively easy to implement. I will have to do more research on dynamic time warping and see if there is a signal processing technique I can implement to help with matching the erroneous notes, and if there isn’t, I will manually iterate through the list and remove audio data notes that match with sheet music data more than once which should increase accuracy.

I have worked on increasing the accuracy of the matching audio by changing parameters and fine tuning the distance function within my custom dynamic time warping implementation. I am still learning the details about how this works mathematically in order to create an optimized solution, but I am getting there. Getting better data from Ben will certainly help with this as well but I wan’t to make sure the system is robust even with noise.

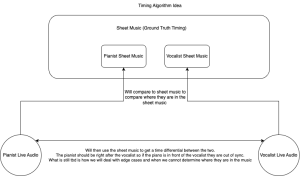

It does this for both the singer portion of the sheet music and the piano portion. It then finds where there are simultaneous notes in both portions in order to check there for whether they are in sync of out of sync. It gives an output of (singer index, piano index) of where to check in the sheet music data for similar notes. This is as so:

It the compares the audio data to the shared note points and sees if they are similar to a certain threshold. This threshold is currently .01 seconds. It then sends the sheet music time to the frontend which then highlights that note.

Verification:

Some of the verification tests I have currently run is when processing the sheet music data, manually comparing that data to the sheet music pdf to ensure it looks correct. This isn’t a perfect way to go about this but it works good enough for now and I don’t know of any automated way to do this because I am creating the data from the MusicXML. Perhaps a way to automate this verification is to create a new MusicXML file from the data I parsed and compare the two to ensure they look correct.

Some other tests I plan on doing is

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

I am on schedule. There is still a lot of refining to do before the final demo, but the core functionality is there and its looking very promising.

What deliverables do you hope to complete in the next week?

I want to keep working on improving the system so we can processing more complicated systems. For our demo we have it working with simple pieces, but I want to improve the chord handling and errors.