The past week, I’ve been working on the game state tracker and some integration tasks such as getting the camera to take pictures within our python program as opposed to just from the command line. Cody and I spent a good amount of time on this task that shouldn’t have taken so long. We were getting an error that the camera was busy and couldn’t take the picture. We ended up earning this was because we had initialized a cv2 camera instance we were thinking of using and forgot to comment it out.

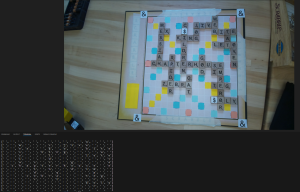

I had already written the functions for most of the intricacies of the game state tracker, so the rest was just interfacing with the MQTT protocol that Denis set up. Today, we tested the whole system together and it went quite well. I found a couple bugs in the check_board function I had written in terms of calculating the start and end column for a horizontal word. But after fixing that, when we pressed the “Check” button on the individual player touch screen, we got the point total and validity back! We are still deciding the convention we put in place for submitting words, whether we should require a check beforehand or not.

I am glad at the place we are in now, and hopefully we can finish up the home stretch in a relatively smooth manner. I’ve been working on the final report for the past three weeks, editing from the design report, so I think we should get that done soon.