The past couple weeks I’ve focused my time into integrating the different individual elements of our project as well as some of the smaller tasks that our project needs.

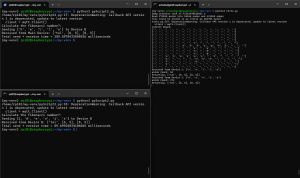

A major thing over the past couple weeks has been configuring our touch screens and creating scripts that will run on our raspberry Pi 0s and the Raspberry Pi 4. This was very difficult and took a lot of debugging and troubleshooting. However, we now have a consistent setup and are able to get the touch screen working almost every time. We will replicate this setup across the other 3 raspberry pi 0s.

We also designed a casing for the screens to contain the battery packs and converters.

I believe that we are on pace for the final demo and will be in a good place for the rest of the semester.

I gained a lot of skills throughout the semester, both clearly defined technical skills as well as softer problem-solving skills. I got the chance to work with new python modules, and a new protocol in MQTT. Learning about something like a new protocol and considering the tradeoffs of different options was a good exercise in design. Another good experience was debugging the LCD touchscreens, as encountering a snag like that, without a clear solution was a good chance to practice troubleshooting. Having to look through the boot logs and looking through old forums was definitely a learning experience.