Since the last status report, I have made a lot of progress on the hardware subsystem, and have helped with integration of our subsystems as well as gathered data for our ML models.

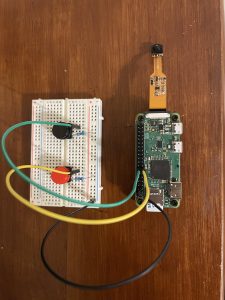

Regarding the hardware subsystem, the component case was finally printed and assembled, and is completely finished. Printing the case ended up being a lot more difficult than I thought, as I ran into issues where the print would fail halfway through, someone would stop my print, or the print would be successful, but have minor errors in the design that would require another reprint. Despite these problems, I have now assembled the glasses attachment, which is pictured below.

The camera is positioned on the right face, the buttons are positioned on the top face, and the charging port, the power switch, and other ports are positioned on the left face. As you can see, there is some minor discoloration, but fixing this is not a priority at the moment. If I have extra time next week, I should be able to fix this relatively easily.

Furthermore, I have helped with the integration of the subsystems. Specifically, I added code to the Raspberry Pi so that once the start button is pressed, it will not only send an image to the Jetson, but also receive the extracted description corresponding to that image. It then sends this description to our iOS app, where it is read aloud. Currently though, our code is running locally on our laptops instead of the Jetson, since we are prioritizing making our system functional first.

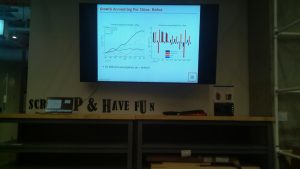

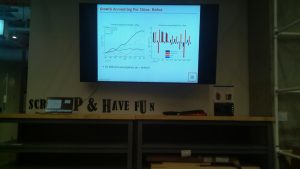

Finally, I spent many hours working on gathering image data for our ML models, as well as manually annotating our data. For our slide matching model, I used a script I had previously written on the Pi to gather images of slides that have slide numbers in boxes on the bottom right. One such picture is shown below. We were able to gather a couple hundred of these images. For our graph description model, I helped write graph descriptions for a few hundred graphs, including information about their trends and general shape.

My progress is currently behind schedule, since our team’s progress is behind schedule. We are currently supposed to be testing our system with users, but since our system is not complete we cannot do so. I am also supposed to have placed our code on the Jetson, but we cannot do so without finalized code. We will have to spend time as a team finalizing the integration of our subsystem in order to get back on track.

In the next week, I hope to be able to put all necessary code on the Jetson so that the hardware is completely ready. I also will help finalize integration for our project. After that, I will help with user testing, as well as working on final deliverables for our project.