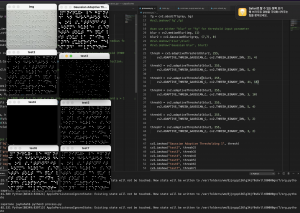

This week, I was able to convert our trained neural network from Apache’s MXNET framework, which AWS uses to train image classification networks, to ONNX (Open Neural Network Exchange), an open-source ecosystem for interoperability between NN frameworks. Doing so allowed me to untether our software stack from MXNET, which was unreliable on the Jetson Nano. As a result, I was able to use the onnx-runtime package to run our model using three different providers: CPU only, CUDA, and TensorRT.

Surprisingly, when testing CPU against CUDA/TensorRT, CPU peformed the best in inference latency. While I am not sure yet why this may be the case, there are some reports online of a similar issue where the first inference after a pause on TensorRT is much slower than following inferences. Furthermore, TensorRT and CUDA have more latency overhead on startup, since the framework needs to set up kernels and send instructions to all the parallel units. This is not something that will affect our final product, however, because it is a one time cost for our persistent system.

In addition to converting our model to MXNET, I also changed the model’s input layer to accept 10 images at a time rather than 1. Doing so allows more work to be done in a single inference, lowering the latency overhead of my phase. Because the number of images per inference will be a fixed value for a given model, I will make sure to tune this parameter to lower the number of “empty” inferences completed as we define the our testing data set (how many characters per scan etc.). It is also possible that as the input layer becomes larger, CPU inference becomes less efficient while GPU inference is able to parallelize, leading to better performance using TensorRT/CUDA.

Finally, I was able to modify output of my classification model to include confidence (inference probabilities) and the next N best predictions. This should help optimize post-processing to our problem space by narrowing the scope of the spell check search.

I did not have the opportunity this week to retrain / continue training the existing model using images passed through Jay’s pre-processing pipeline. However, as the details of the pipeline are still developing, this may have been a blessing in disguise. Next week, I will be focused on measuring the current models performance (accuracy, latency) using different pre-processing techniques and inference providers, as well as measuring cross-validation accuracy of our final training dataset. This information will be visualized and analyzed in our final report and help inform our final design. In addition, I will also be integrating the trigger button for our final prototype.