What are the most significant risks that could jeopardize the success of the project?

- Pre-Processing: Currently, pre-processing has been fully implemented to its original scope.

- Classification: Classification has been able to achieve 99.8% accuracy on the dataset it was trained on (using a 85% training set, 5% validation set). One risk is that the model requires on average 0.0125s per character, but the average page of braille contains ~200 characters. This amounts to 2.5s of latency, which breaches our latency requirement. However, this requirement was written on the assumption that each image would have around 10 words (~60 characters), in which case latency would be around 0.8s.

- Post-Processing: The overall complexity of spellchecking is very vast, and part of the many tradeoffs that we have had to make for this project is the complexity v. efficiency dedication, as well as setting realistic expectations for the project in the time we are allocated. The main risk in this consideration would be oversimplifying in a way that might overlook certain errors that could put our final output at risk.

- Integration: The majority of our pipeline has been integrated on the Jetson Nano. As we have communicated well between members, calls between phases are working as expected. We have yet to measure the latency of the integrated software stack.

How are these risks being managed?

- Pre-Processing: Further accuracy adjustments are handled in the post-processing pipeline.

- Classification: We are experimenting with migrating back to the AGX Xavier, given our rescoping (below). This could give us a boost in performance such that even wordier pages can be translated under our latency requirement. Another option would be to experiment with making the input layer wider. Right now, our model accepts 10 characters at a time. It is unclear how sensitive latency is to this parameter.

- Post-Processing: One of the main ways to mitigate risks in this aspect is through thorough testing and analysis of results. By sending different forms of data through my pipeline specifically, I am seeing how the algorithm reacts to specific errors.

What contingency plans are ready?

- Classification: If we are unable to meet requirements for latency, we have a plan in place to move to AGX Xavier. The codebase has been tested and verified to be working as expected on the platform.

- Post-Processing: At this point there are no necessary contingency plans with how everything is coming together.

- Integration: If the latency of the integrated software stack exceeds our requirements, we are able to give ourselves more headroom by moving to the AGX Xavier. This was part of the plan early in the project, since we were not able to use the Xavier in the beginning of the semester.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)?

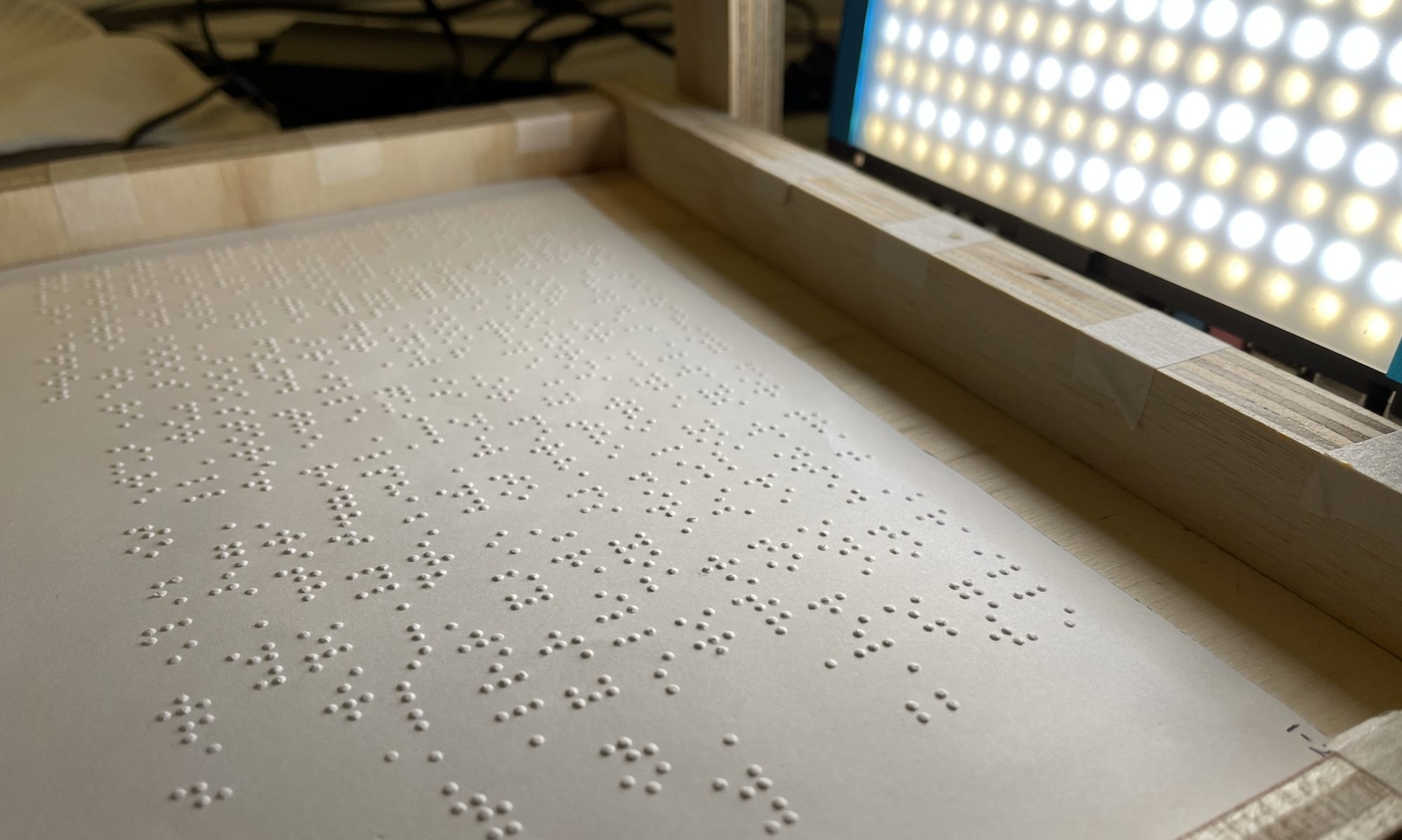

Based on our current progress, we have decided to re-scope the final design of our product to be more in line with our initial testing rig design. This would make our project a stationary appliance that would be fixed to a frame for public/educational use in libraries or classrooms.

Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

This change was necessary because we felt that the full wearable product would be too complex for processing, as well as a big push in terms of the time that we have left. While less flexible, we believe that this design is also more usable for the end user, since the user does not need to gauge how far they need to put the document from the camera.

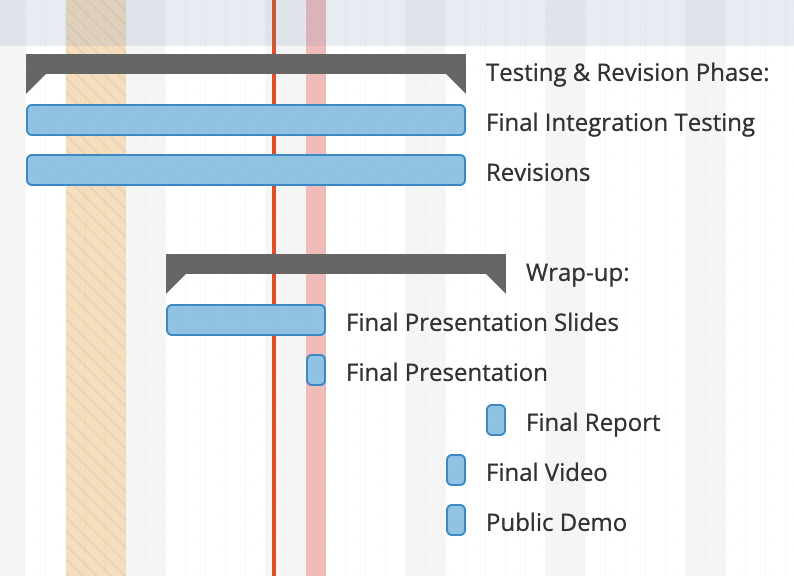

Provide an updated schedule if changes have occurred.

Based on the changes made, we have extended integration testing and revisions until the public demo, giving us time to make sure our pipeline integrates as expected.

How have you rescoped from your original intention?

As mentioned above, we have decided to re-scope the final design of our product to be a stationary appliance, giving us control over distance and lighting. This setup was more favorable to our current progress and allows us to meet the majority of our requirements and make a better final demo without rushing major changes.