This week, I spent an unexpected bulk of my time setting up the Jetson Nano with our camera. Unfortunately, the latest driver for the e-CAM50/CUNX-NANO camera we had chosen to use was corrupting the Nano’s on-board firmware memory. As a result, even re-flashing the MicroSD card did not fix the issue and the Nano was stuck on the NVIDIA splash screen when booting up. To fix this, I had to install Ubuntu on a personal computer and use NVIDIA’s SDK manager to reflash the Nano board entirely. We will be pivoting to a USB webcam temporarily while we search for an alternative camera solution (if the USB webcam is not sufficient). Looking at the documentation, the Jetson natively supports USB webcams and Sony’s IMX219 sensor (which is also available in our inventory, but seems to provide worse clarity). I am also in contact with e-con systems (the manufacturers of e-CAM50), and am awaiting a response for troubleshooting the driver software. For future reference, the driver release I used was R07, on a Jetson Nano 2GB developer kit with a 64GB MicroSD card running Jetpack 6.4 (L4T32.6.1).

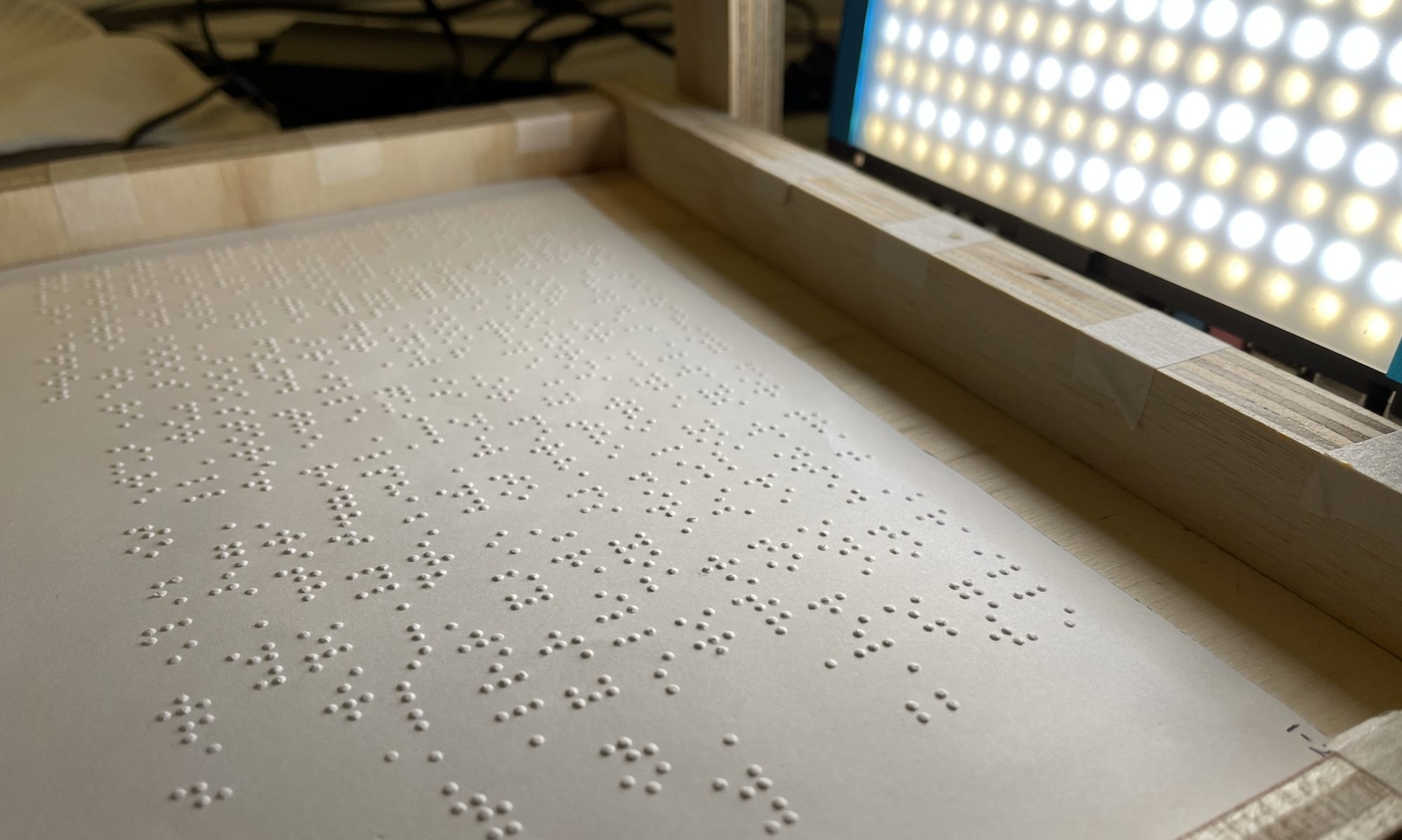

On the image classifier side, I was able to set up a Jupyter notebook on SageMaker for training a MXNet DNN model to classify braille. However, using default suggested settings and the given dataset led to unsatisfactory results when training for more than 50 epochs from scratch (~4% validation accuracy). We will have to tune some parameters before trying again, but we will have to be careful not to over-test given our $100 AWS credit limit. Transfer learning from Sagemaker’s pre-trained model (trained on ImageNet), conversely, allowed the model to converge to ~94+% validation accuracy within 10 epochs. However, testing with a separate test dataset has not been completed on this model yet. Once I receive the pre-processing pipeline from Jay, I would also like to run the dataset through our pre-processing and use that to train/test the models – perhaps even using it for transfer learning on the existing braille model.

One minor annoyance with using an MXNet DNN model is that it seems that Amazon is the only company actively supporting the framework. As a result, documentation is lacking for how to deploy and run inferences without going through SageMaker/AWS. For example, the online documentation for MXnet is currently a broken link. This is important because we will need to run many inferences to measure the accuracy and reliability of our final model / iterative models, and batch transforms are relatively expensive on AWS.

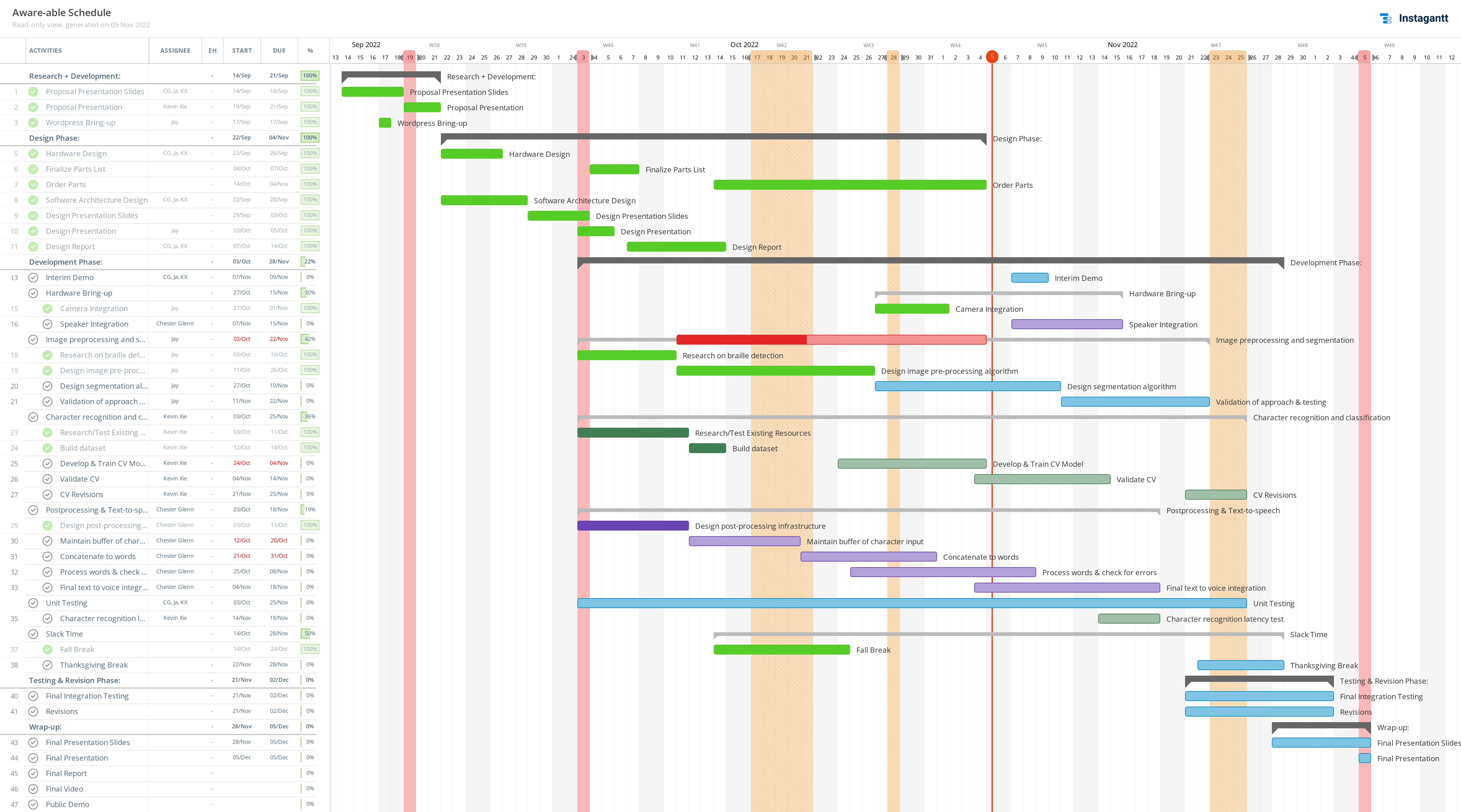

Next week is Interim Demo week, for which we hope to have each stage of our pipeline functioning. This weekend, we expect to complete integration and migration to a single Jetson board, then do some preliminary testing on the entire system. Meanwhile, I will be continuing to tune the SageMaker workflow to automate (a) testing model accuracy / confusion matrix generation (b) intake for new datasets. Once the workflow is low maintenance enough, I would like to help out with coding other parts of our system. In response to feedback we received from the ethics discussions, I am considering prototyping a feature that tracks the user’s finger as they move it over the braille as a “cursor” to control reading speed and location. This should help reduce overreliance and undereducation due to our device.