18-642 Project 9

Updated 6/28/2021. Changelog

Learning objective: create unit tests for a piece of non-trivial software.

This project has you unit-test your turtle's statechart. You will get

experience using CUnit assertions and writing mock functions for unit tests.

You must achieve 100% coverage on the transitions of the statechart, and

achieve 100% branch coverage of the code. You will also compile your code with

warnings, which you will have to take care of in the next project.

We strongly recommend using CUnit if you want to do embedded software

in industry, because it is representative of test frameworks we've seen in the

embedded industry. However, if you really want to use another unit testing

framework such as Google Test that is OK, but no support will be

provided if you run into problems.

Lab Files:

Hints/Helpful Links:

- CUnit

documentation

- Please follow the handin naming convention: every filename (before the

file extension) must end in [FamilyName]_[GivenName]_[AndrewID].

- In this and future projects, $ECE642RTLE_DIR refers to

~/catkin_ws/src/ece642rtle (or corresponding project workspace).

- See the Recitation video and slides on Canvas

Procedure:

- Read over the CUnit documentation:

- Writing

CUnit Test Cases

- Managing

tests and suites (this is good to know at a high level, but do not get

bogged down in the details -- the example code will have all that you need)

- Example code

- Build, run, and study the example system and its unit tests:

- The example code is in $ECE642RTLE_DIR/cUnit_example.

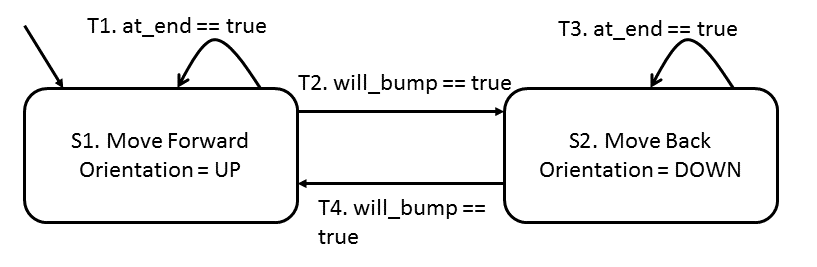

- Study dummy_turtle.cpp. This code implements the following

statechart:

- Note that dummy_turtle.cpp calls two functions (

set_orientation() and will_bump()) that we expect would be

implemented in some file like student_maze. However, in order to unit

test dummy_turtle, we need a way to mock up these functions in our

unit testing framework. We do this by replacing the header file we use while

testing:

#ifdef testing

#include "student_mock.h"

#endif

#ifndef testing

#include "student.h"

#include "ros/ros.h"

#endif - If testing is #define'd (we do this in the g++

command below), the file uses "student_mock.h". If the line were not

present, the file would use "student.h" and "ros/ros.h" as

usual.

- The mock functions are implemented in mock_functions.cpp. Study

how mock_orientation and mock_bump are used to mock up the

orientation output and will_bump input variable of the state chart.

- Take a look at how Transitions T1 and T2 are tested in

student_test.cpp (lines 11-17, 19-26). In particular, note that the

at_end input variable is passed as an input to moveTurtle.

Your own code might pass input variables as inputs to a function, or use

methods (such as bumped()) to fetch them, and the example shows both

ways of handling these cases.

- Note that test_t1 and test_t2 test the output (set by

the starting state) and the resultant state. Your tests shall do the same.

- Build the example:

g++ -Dtesting -o student_tests student_test.cpp dummy_turtle.cpp

mock_functions.cpp -lcunit

The -Dtesting flag functions the same as #define testing

would in the file, and -lcunit tells the compiler to link the CUnit

library.

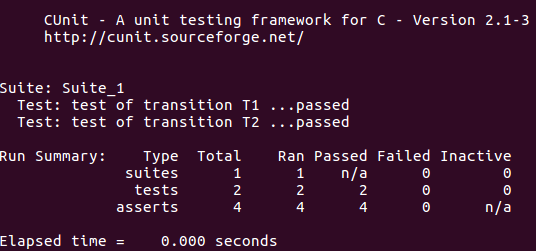

- Run the example (./student_tests)and observe the output. It

should match the following:

- Change something (such as the output orientation in S1) in

dummy_turtle.cpp to make a test fail. Verify that the test fails by

building and running the example again.

- Use CUnit to test your own student_turtle state

chart implementation.

- Copy the sample student_test.cpp to include your andrew ID in the file

name: [AndrewID]_student_test.cpp. In our discussion any reference to

"student_test" is to this file with your Andrew ID. This is the file

you shall include in your builds, your hand-ins, etc.

- Also include your name and andrew ID in that file as a comment in the first

few lines of the file.

- Include the same #ifdef testing blocks as in dummy_turtle.cpp

at the top of student_turtle.cpp. As in the example, implement

mocks of any student_maze functions you call.

- You should be able to use the same student_turtle.cpp in your

regular ece642rtle build and your unit test build without modifying the file

between builds. See the hints for more specifics on this topic.

- Make sure your student_turtle state chart implementation is easy to

instrument for testing -- we recommend moving your main state chart logic to a

routine that takes the current state as one of the inputs and returns the next

state, like in dummy_turtle.cpp.

- You may need to make new getter/setter methods to mock up any data

structures you have (for example, instead of accessing a visit counts array

directly, you may need to create a method called visit_array_at(int,int)

). In some cases the smartest approach will be to modify your code to make

it easier to test rather than deal with an overly difficult mock up. That

tradeoff happens in industry projects too. If there is an aspect of your code

that is particularly difficult to mock up in this way, please talk to us in

office hours.

- Set up the CUnit framework as in the example, and write unit tests for your

code. Provide a script to build and run your tests:

AndrewID_build_run_tests.sh. The architecture of the the testing files

up to you, but remember all files you create shall have your name and

Andrew ID embedded in the source code as a comment and the primary

student_test file shall have your andrew ID in the file name as previously

stated.

- You shall use the compiler flag value-Dtesting to make

testing defined when running unit tests. (This

preprocessor

directive defines "testing" just as if a #define had been place

in a source code file.) In a Makefile, you can use CPPFLAGS +=-Dtesting

. You should not have #define testing anywhere in your code

and only enable it in the build commands, so that we can grade your project

seamlessly.

- Your unit tests shall meet the following requirements:

- 100% transition coverage: Test every transition in the statechart.

Write tests according to your state chart diagram and requirements

documentation (not your code). Writing tests based on documentation and running

them on your code is a way of verifying if your implementation matches your

documentation. Annotate code to statechart traceability by including comments

in your unit test code that map to transitions. It is fine for one test to map

to more than one transition, but every transition must be tested and annotated

in the code.

- Up to 100% data coverage: If your transition tests do not cover

all combinations of input variables, write 8 additional tests (if fewer than 8

tests are needed to achieve 100% data coverage, write up to the number of tests

necessary to achieve that coverage) to test these combinations (for example, in

the dummy example, state==s1, at_end==false and will_bump==false is a possible

combination).

- 100% branch coverage: Write any additional tests to achieve 100%

branch coverage in your state chart handling code (including subroutines). This

means you have to figure out how to exercise any default: switch

statements. Note that this is simple "branch coverage" and not MCDC

coverage. We recommend, but do not require, 100% MCDC coverage.

- Build and run your tests.

- Spend at least one hour fixing any failing unit tests (it is OK to spend

less than one hour if all tests are fixed). Take note of any tests you did not

fix. You will have pass all your unit tests by the next Project.

- Update your build package.

- Create a build package according to the same requirements as Project 8,

including leaving all compiler warnings enabled and using the -Werror flag.

- Ensure that the build takes into account unit testing. It is OK to rely on

cunit to provide as much of the following sub-requirements as you can:

- Add execution of all unit tests as part of ANDREWID_build.sh via

running AndrewID_build_run_tests.sh

- It shall be obvious to a TA when grading that unit tests have been run

(e.g., via a message displayed on the terminal that unit tests are running)

- It shall be obvious to a TA when grading which unit tests have failed

(e.g., via messages displayed on the terminal noting each unit test that fails)

- Note that for this project all unit tests are not expected to pass, but all

must be run by the script.

- Answer the following questions in a writeup:

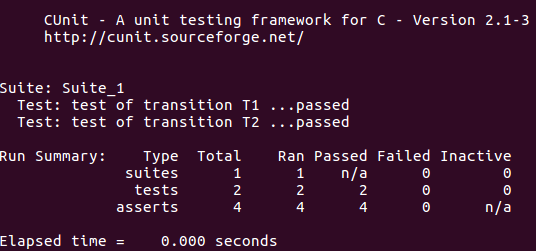

- Include a screen capture or output file capture snippet that shows the

output of your unit testing, showing tests running and any tests that have

failed.

- Make an argument that you achieved 100% transition coverage, 100% (or

approaching) data coverage, and 100% branch coverage. You do not have to

describe every obvious test case, but talk about how you handled any special

cases and your general strategy.

- Did you have any failing tests before you fixed things? Why did they fail?

For the ones you fixed, how was the experience of fixing them?

- List any tests that are failing at the time of turn-in. Briefly give a

plan for fixing them for the next project.

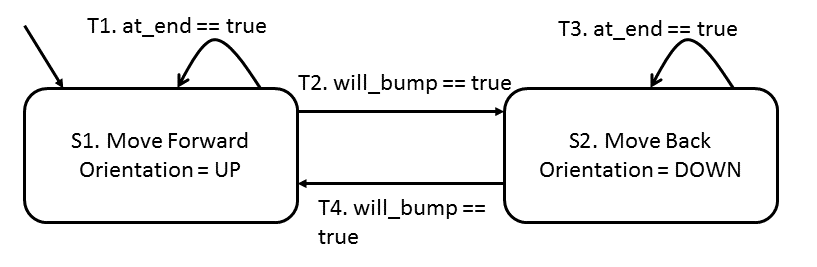

- Include your statechart, including any updates you made for this project.

It should match the transition tests you wrote. Your statechart should reflect

the code you submit. We strongly suggest you update other parts of your design,

but you do not have to turn them in.

- Do you have any feedback about this project? (Include your name in the

writeup)

- Note: The next project will have you fix all the failing unit tests you

encountered in this Project, and will also have you implement an invariant.

Since there is some slack built in to this project that carries over to the

next project, plan your time accordingly. You should get all your unit tests

passing this week, but that is not a strict requirement.

Handin checklist:

Hand in the following:

- Your build file: AndrewID_P09.tgz

- Your writeup, called

p09_writeup_[FamilyName]_[GivenName]_[AndrewID].pdf the elements of the

writeup should be integrated into a single .pdf file. Please don't make the TAs

sift through a directory full of separate image and text files.

Other requirements:

- Use the usual project naming conventions with a prefix of "p09"

for items other than source code.

- The writeup shall be in a single acrobat file for ease of navigation.

- Fonts for all diagrams and text shall be rendered at no smaller than 10

point font. Smaller fonts will require a resubmission

Zip the two files and submit them as P09_[Family

name]_[First name]_[Andrew ID].zip.

The rubric for the project is found here.

Hints:

- There are no points for mazes this week, but that is coming.

We strongly suggest you see how your code is doing on mazes 1 through 6

so you can plan effort for the next project.

- Hint for getting 100% branch coverage on switch statements: define an extra

enum value just for unit testing. For example, if your switch has four cases

for north/south/east/west, define the enums to be: {North, South, East, West,

ErrorDefault}. Do not have a case for ErrorDefault -- that is there only for

unit testing and is there to exercise the default case. That lets you still

test the switch statement while using the type-correct enum value.

- If it you find it really difficult to write unit tests, the problem isn't

the unit test framework; it's your code. Remember MCC/SCC? This is where high

complexity comes back to bite you.

- Minimize use of static variables of all types

- Keep reasonable complexity to make unit testing viable

- Provide interfaces designed to make testing easier

- Using globals instead of file static to make testing easier is A REALLY BAD

IDEA (see next point)

- Nonetheless, you'll find out that unit testing with file static variables

can be painful because you need to get the variables into a certain value

before running a test. This is a periennial problem with unit test in industry,

so you get a taste in this project. For example, what if you need to test

different turtle orientations in different unit tests; how to you get the

turtle in that orientation before you run the unit test? Here are some general

techniques you can use to set file static variable state for unit testing. Some

are good ideas. Some aren't. The first approaches are the least scary. The last

approaches are the ones I most often see in industry, and are generally

problematic. You can do whichever you like -- but don't come crying to us if an

ugly hack blows up in your face. (See also:

https://stackoverflow.com/questions/593414/how-to-test-a-static-function)

- Use test code to put the unit in the correct state via a sequence of calls.

- Example: issue a bunch of move commands to the turtle, then run the unit

test.

- Pro: minimally intrusive

- Con: makes unit test design more difficult; can require lots of code other

than unit to execute; unit tests can break if system design changes.

- Add public get and set functions for the file static variables

- Pro: better than making them global

- Con: exposes internal state you don't want any other code seeing; breaks

encapsulation; involves writing extra code only used for testing

- Variant: conditionally compile so the set/get functions only needed for

unit test are only compiled when compiling for unit tests. This fixes the

encapsulation issues for production code. This means surrounding these

definitions with #ifdef testing .... #endif.

- #include the .c file in the test file

- Hack. Often causes complications on large projects. If you don't understand

how/what/why on your own you're not allowed to do this (don't ask for help on

how to do this).

- Pro: if done a certain way you no longer need the "#ifdef

testing" trick do deal with file static declarations.

- #ifdef testing

#define FileStatic

#else

#define FileStatic static

#endif

- Ugly Hack. And therefore the one I see most often in industry. But it's

still ugly. (Even worse is conditionally changing "static" to be

blank, which breaks local static variable definitions.)

(If you have hints of your own please e-mail them to the course staff and,

if appropriate, we'll add to this list.)

- 6/28/2021: Released.

- 10/20/2023: updated with font size requirement