Detects all obstacles that are within 3.5 meters (FOV: 90° horizontal and 70° vertical)

Depending on the distance from obstacles to user, the 10 vibration motors independently feed backs different strength of vibrations

Allows optional location/room detection and notification via sound

Whole system is controlled by simple 3 voice commands:

Response time is less than 250ms

Entire system lasts up to 2.2 hours

Durable

Lightweight

Easy to wear & take off

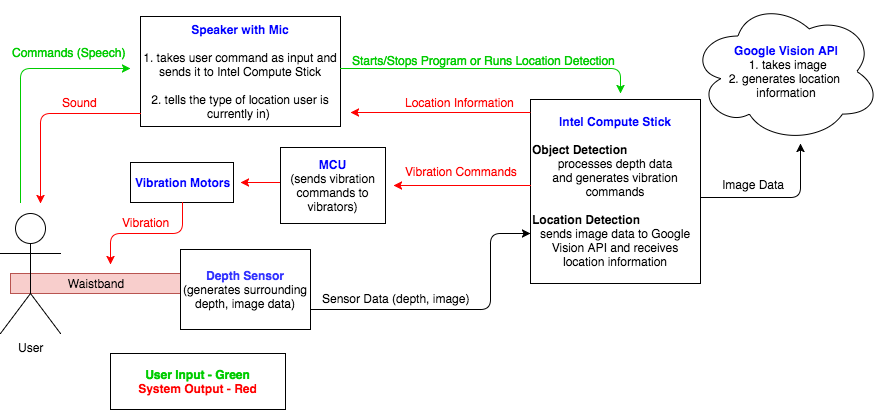

This diagram shows how our componenets interact with each other.

Once the user starts the program by speaking “start” into the mic, the Intel Compute Stick receives depth data from the depth sensors and generates vibration commands. This command gets sent to the MCU and corresponding vibration motors on the waistband vibrate.

To do location detection, the user can say “location”. This triggers the Intel Compute Stick to receive rgb image data from the depth sensor and send it to Google’s Cloud Vision API. Google Cloud Vision processes the image based on the objects in the image and generates location label, such as “kitchen” or “bathroom”. This location label is then sent to the user as sound.

To shut the device down, the user can say “stop”.

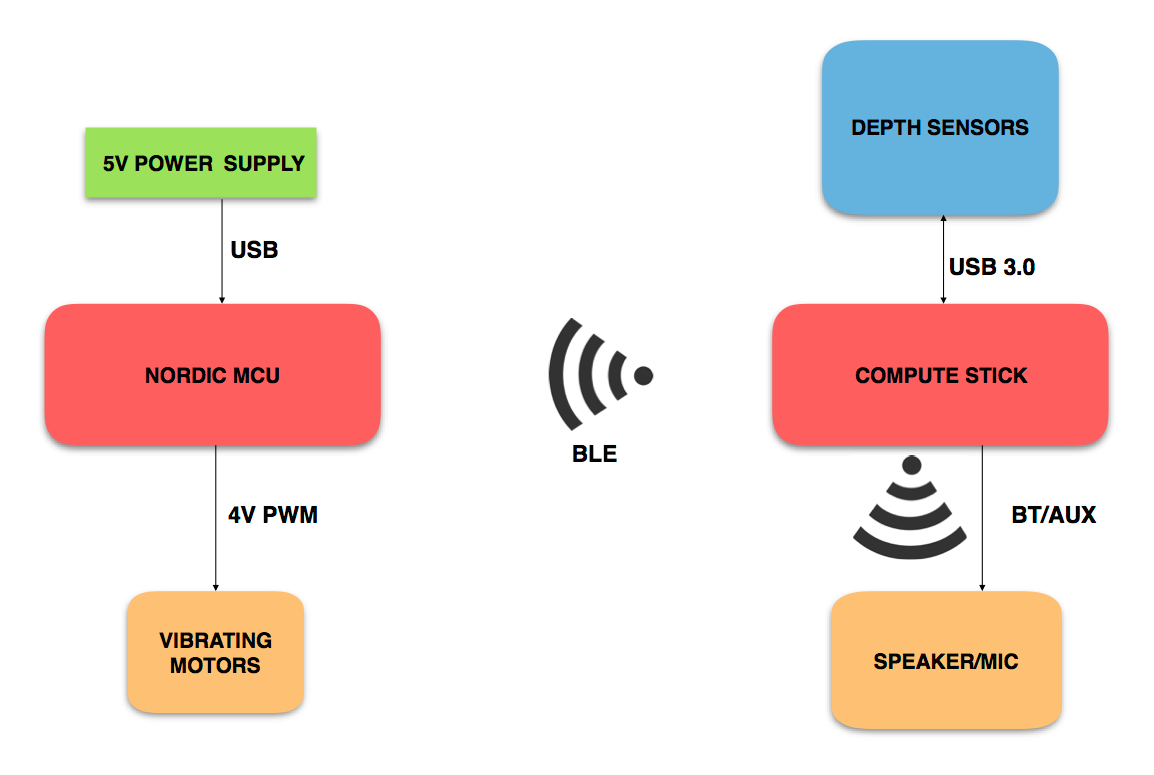

This diagram shows the 2 primary processing blocks. The left block receives commands over BLE to power our haptic feedbacks system. The second block processes information from the depth sensors and sends the relevant information to speaker via aux cable.

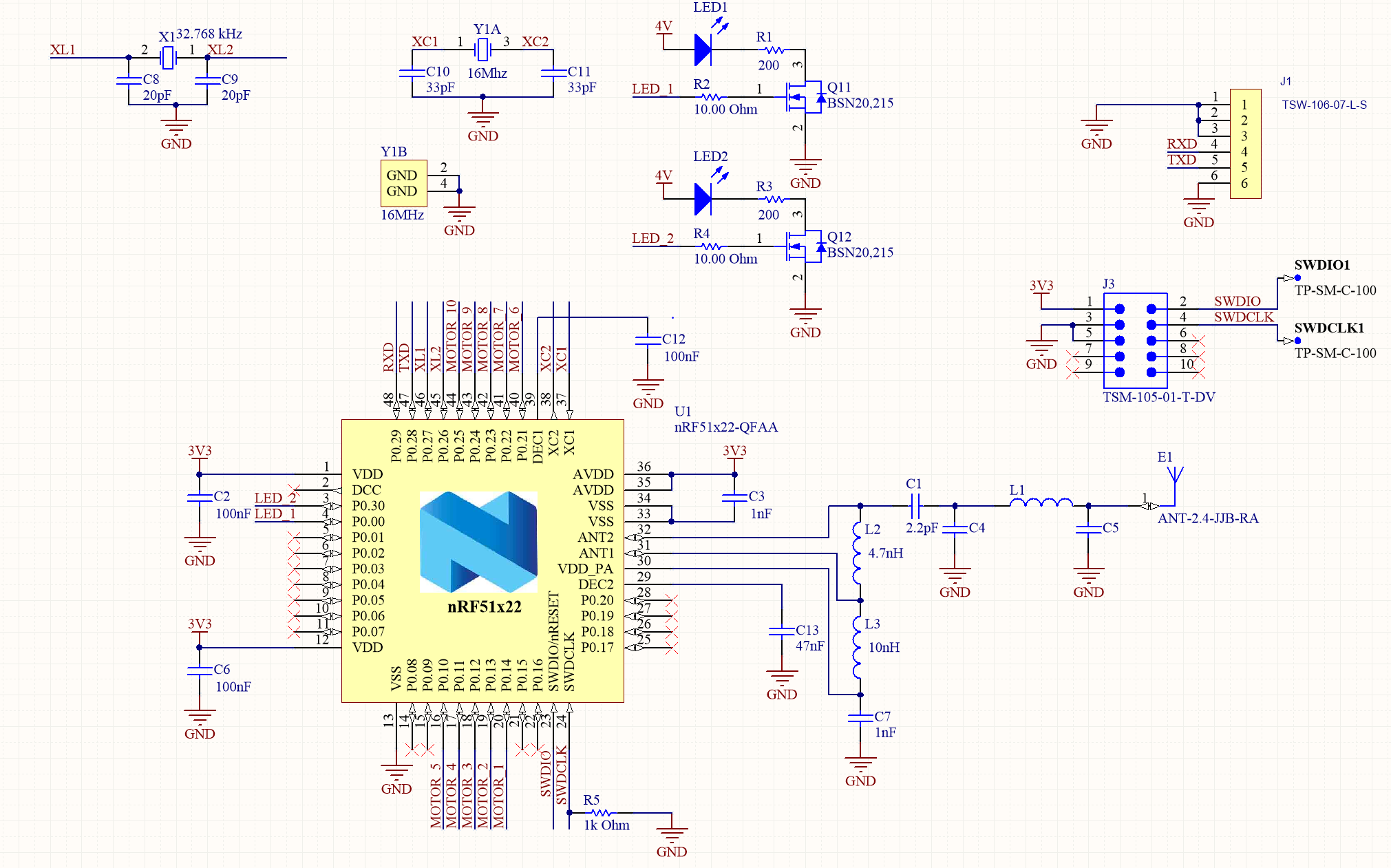

This schematic primarily interfaces the MCU with the components needed for it to operate. It also displays the connection between the antenna and the MCU.

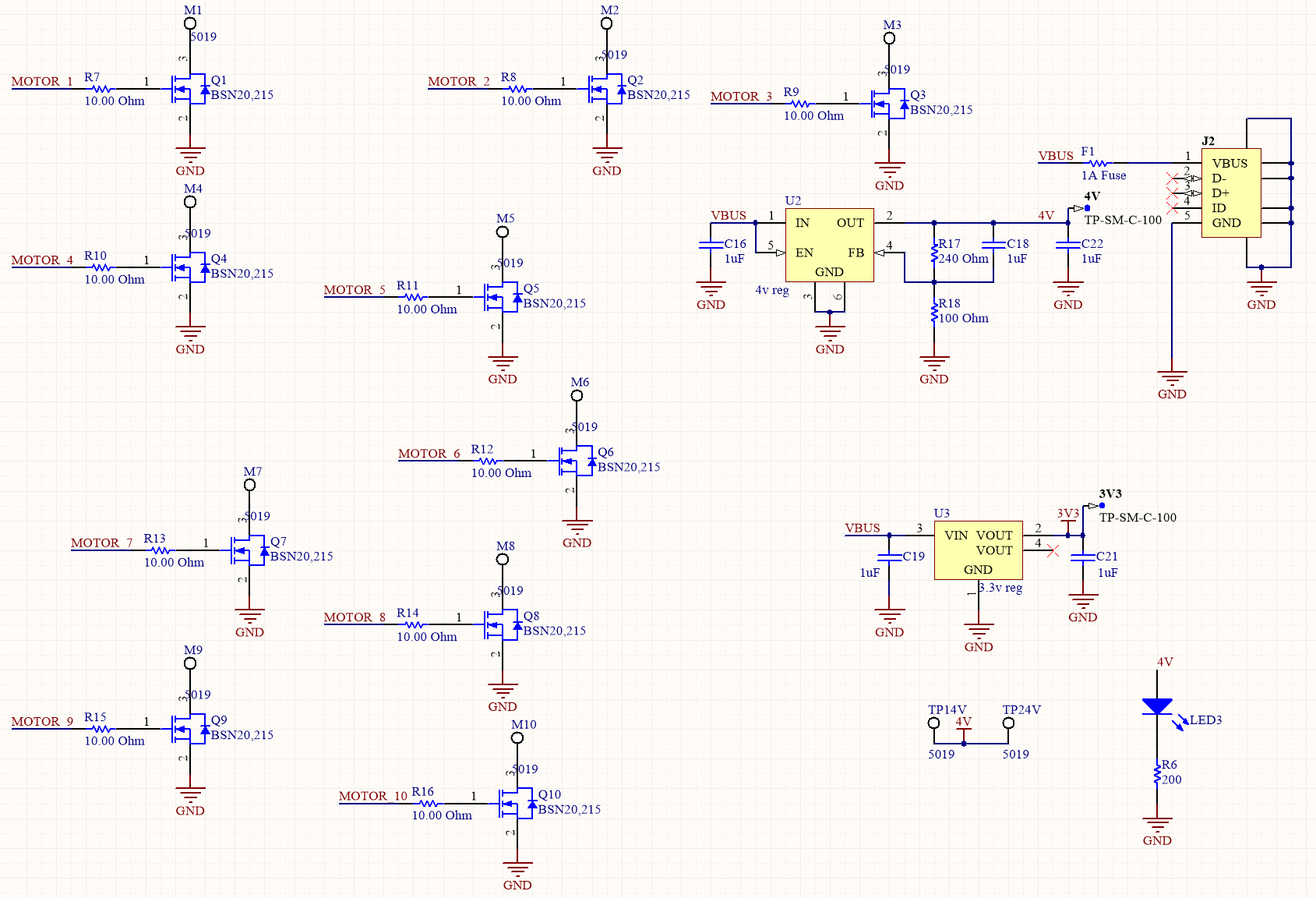

This schematic shows all the components needed for power regulation on the board and PWM control of the vibrators.

Vendor: Newegg

Price: $99

Library for capturing data from Intel real sense camera (C/C++)