The most significant risks have remained unchanged, with it being the delay of MVP. These risks are being mitigated by having spare components ordered from ECE inventory to substitute instead of our actual desired components. In terms of contingency plans, there are none, as MVP is a crucial step that cannot be circumvented in any way. However, we are testing the parts of our design as they finish, so we are confident that they will work as we assemble them into an MVP.

We made a few changes to the system design. We updated the cloud reliability target from 95% to 97% to reduce downtime risks and ensure timely database lookups for license plate matching, as AWS’s baseline uptime guarantee is closer to 95%. This is pretty realistic and also follows the published statistics for server uptime on AWS and shouldn’t change our costs. We also refined the edge-to-cloud processing pipeline to improve accuracy and efficiency. Both high and low-confidence detections are sent to the cloud, but low-confidence results also include an image for additional verification using more complex models. This change ensures that uncertain detections receive extra processing while still keeping the system responsive and scalable.

This will not significantly alter the current schedule, as lowering the accuracy requirement will make training easier and potentially quicker.

In addition, we have written the code for using the ML models on the raspberry pi and it can be found here.

Part A (Richard):

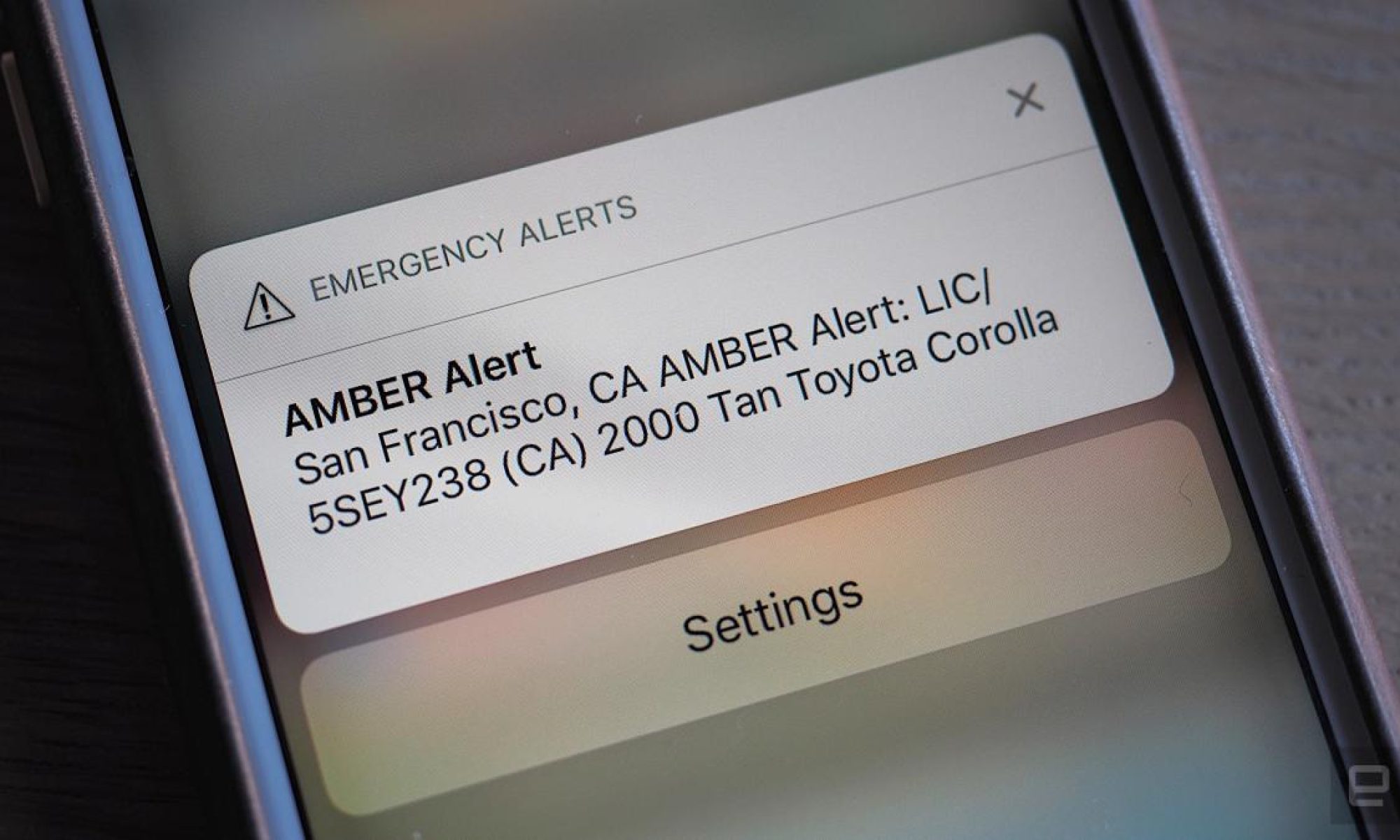

Our design should make the world a safer place with regards to child kidnapping. Our device, if deployed at scale, will be able to locate the cars of suspected kidnappers using other cars on the road quickly and effectively, allowing law enforcement to act as fast as possible. While we currently only plan on using the device with amber alerts, a US system, the design should largely work in other countries. The car and license plate detection models are not trained on the cars and plates of any specific country, and PaddleOCR supports multiple languages (over 80) if needed with foreign plates. This means that if other countries have a similar system to amber alerts, they can use our design as well. Our device may also motivate other countries who do not have a similar system to start their own in order to use our design and better find suspected kidnappers in their country.

Part B (Tzen-Chuen):

CALL sits at a conflicting cross-section of cultural values. Generally, our device seeks to protect children, a universal human priority. It accomplishes this through a distributed surveillance network, akin to the saying “it takes a village to raise a child.” By enabling a safer, more vigilant nation, we are in consideration of a global culture.

In terms of traditional “American values,” CALL presents a privacy problem. While not explicitly a constitutional right, it is implied in the 4th amendment. A widespread surveillance network is bound to raise concerns among the general public. We attempt to mitigate this concern by only sending license plate matches that have a certain confidence level to the cloud, and never to the end users. This way we balance the shared cultural understanding of child protection with the American tradition of privacy.

Part C (Eric):

Our solution minimizes environmental impact by leveraging edge computing, which reduces reliance on energy-intensive cloud processing, lowering power consumption and data transmission demands depending on the confidence of the edge model output. The system runs on a vehicle’s 12V power source, eliminating the need for extra batteries and reducing electronic waste. Additionally, its modular design makes it easy to repair and update, extending its lifespan compared to full replacements. These considerations ensure efficient operation while reducing the system’s environmental footprint.