All the details for our final design can be found on the final project documents page.

Richard’s Status Report 4/26

This week I worked with Eric and Tzen-Chuen on the final presentation. I specifically focused on adding the testing metrics/results and some of the design tradeoffs, as well as working on polishing the presentation throughout. I was the presenter this time, so I also spent time practicing my presentation and delivery. In addition, we have finished testing multiple aspects of our system, most notably the webcam and our ML models. Our results when running inference with our ML models were pretty terrible with the webcam, but performed very well in comparison with images of ideal quality (iphone 13 camera). Therefore we knew quantitatively that the webcam was unsuitable, so instead of waiting for the raspberry pi camera module 3, I ordered a replacement camera: the Arducam IMX519 Raspberry Pi camera. This camera is a higher quality at 16MP compared to the camera module 3, has autofocus, and has a sufficient FOV, but does not have IR capabilities since I was not able to find an IR raspberry pi camera with a fast enough delivery time. This camera arrived a few days later. I confirmed that it works, and updated the code to use this new camera.

My progress is on schedule. By next week I hope to have completed all the deliverables for this capstone project as well as finish testing this backup camera that we will use as our main camera going forward.

Team Status Report for 4/19

As per last week, the camera we ordered still hasn’t arrived. While concerning, our MVP camera will still serve us well, as even though it doesn’t have IR capabilities or a very high resolution sensor, it is still more than good enough to demonstrate the functionality of the project as a whole.

There are no changes to the overall design of the project. However, we have made a slight change with using AWS Rekognition rather than Sagemaker for verifying low confidence matches. This was done since AWS Rekognition is specifically designed for OCR which is our main use case of the cloud model, which also makes it easier to work with. The costs this incurs are minimal since we did not waste time or resources on the Sagemaker portion other than basic testing. As for schedule, we are currently testing both the ML and camera right now, with ML tests ongoing and camera tests taking place tomorrow before the slides are assembled.

Richard’s Status Report for 4/19

This week I worked with Eric on using AWS Rekognition into our pipeline. AWS Rekognition will be called by a Supabase function whenever a low confidence match is uploaded to our database. We decided on Rekognition over SageMaker since Rekognition was better suited for our OCR use case, and we noticed that larger models for cropping cars and license plates had very diminishing returns, and was not worth the extra computation in the cloud. To accommodate this change, the raspberry pi also uploads a cropped image of the license plate as well as the original image. We have also laid out a testing strategy and have begun testing multiple parts of our device, such as the power supply and cooling solution. Since our camera has been delayed numerous times now, we are testing the webcam we are using, which unfortunately does not have the resolution or the IR capabilities for good results in far or night conditions.

My progress is on schedule. By next week, I hope to have finished testing and have our final presentation slides ready to present on time.

As I have implemented my project I have had to learn a lot about training ML models for computer vision and setting up a database. To learn how to train the models I watched videos and looked at the sample code provided by the makers of the model I decided to use, YOLO11. I chose this model for its widespread support and ease of use, so I was able to fine tune the model for detecting license plates relatively quickly. For setting up the database, I read the documentation provided by Supabase, and used tools that integrate / set up parts of the database for me with Supabase, specifically Lovable which we used to make the front-end website.

Team status report for 4/12

During demos, we verified that we already have a working and functional MVP. The risk is that we need to verify that the replacement camera we bought can work with the system properly, and testing for this will begin as soon as the replacement arrives. However, this is a small risk, as the previous one functioned already, and our backup is sufficient for demonstrating functionality.

There are no changes to the design, as we are in the final stages of the project. Only testing and full Sagemaker integration remain.

On the validation side, we plan to test the fully integrated system once we receive and install the replacement camera. We’ll run tests using real-world driving conditions and a portable battery setup to simulate actual usage. We will also test in various lighting conditions.

More specifically, while we have not run comprehensive tests yet, our initial testing on the timing requirements as well as the database matching are all meeting our requirements of 40 seconds and 100% accuracy, respectively. To test these at a more comprehensive level, we will run 30 simulated matches with different images to make sure all are within the timing and match requirements. Once we receive the camera we will use in our final implementation, we will take images of varying distances and weather/lighting conditions, and test the precision and recall of our whole pipeline. These images will also be put into platerecognizer.com, a commercial model, to see if it is our models that need improvement or the camera. The details of these tests are the same as what we have in the design report. Finally, we will either run this system in an actual car, or take a video of a drive with the camera and feed this video into the pipeline to simulate normal use, and make sure it detects all the matches of the video.

In the last two weeks, we finalized our MVP and are working on additional features as well as testing.

Richard’s status report for 4/12

This week, I worked with Eric on the verification of low-confidence matches through the AWS SageMaker platform. This included setting up the AWS service and environment and deploying the models to the cloud. During this process, we also learned about Rekognition, an AWS service specifically for image recognition, so we also looked into this service as a possible better option. Last week, I worked on polishing the website so that the interface was clean throughout and bug-free, especially with the interim demo. I also implemented and debugged the code for our GPS in our pipeline, and that aspect is working smoothly for us.

My progress has been on schedule. By next week, I hope to have made a decision and implemented the cloud verification system, and run tests on our entire pipeline.

Richard’s status report for 3/29

This week, I worked on debugging the camera with Eric and verifying the functionality of everything else. We tried many troubleshooting methods on both our camera module 3 and the backup arducam camera. However, no matter what we did we could not get the camera module 3 to detect and take pictures. The backup camera does detect, but would only take extremely blurry pictures of weird colors, such as blue and pink despite facing a white wall. Ultimately, we decided to move forward with a usb webcam Tzen-Chuen already had for demos. Outside the camera, mostly everything else was tested to see if it ran smoothly. The whole pipeline from image capture (simulated with just a downloaded image file on the raspberry pi) to being verified as a match was tested and worked within timing requirements. In addition, I fixed some bugs in our website such as images not displaying in fullscreen properly. The link is the same as the previous week.

Outside of the camera issues, my progress has been on schedule. Since we don’t think putting the camera into our pipeline is much of an issue once we have a working one, we don’t expect this to delay much. By next week, I hope to add a working camera to our pipeline and then work on the sagemaker implementation with Eric.

Team status report for 3/22

A significant risk that could jeopardize the success of the project is currently the camera and the pi. We were able to take images, testing baseline functionality, but when we moved our setup, the camera stopped being detected. Though this can probably be resolved, the impact to our timeline will still be felt. If it is the camera, pi, or cable that is the issue, we need to diagnose soon and order replacements from inventory or the web.

A minor change was made to the system design by replacing the HTTP POST Supabase edge function with event triggers. This change was necessary because the Raspberry Pi already inserts data directly into the possible_matches table, and may result in more complexity compared to the edge function method.

Right now, our locally run code is finalized outside of the camera problems and can be found here. We also have a working prototype for the website for law enforcement to view matches, and can also be found here.

Richard’s Status Report for 3/22

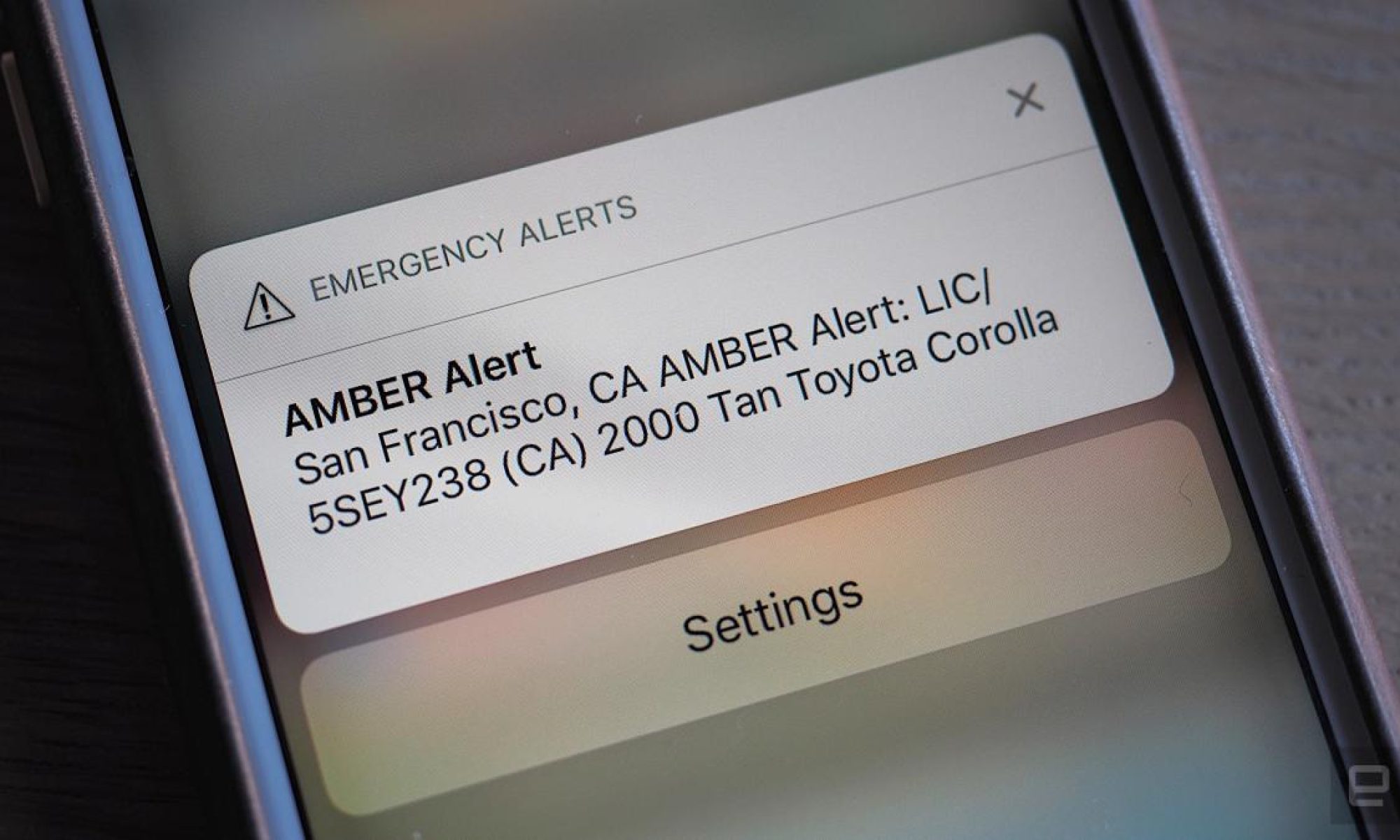

This week I focused on finalizing the locally run code. I added the queue functionality where if a match cannot be sent up to the database for whatever reason, such as loss of internet connection, the data will be saved locally, and it will be retried later. I have also written a main function that will take a picture and send matches every 40 seconds, check for database updates every 200 seconds, and retry failed entries to the database every 200 seconds. Due to some problems we have with initializing the camera, that is the only aspect of the locally run code we do not have working right now. The code can be found here. In addition to the locally run code, I have made a prototype for the front-end website using lovable for law enforcement to look for matches. They can see the currently active amber alerts, possible matches sent to the database, and verified matches where the larger models on the cloud confirm the result of the possible matches. It also has useful filtering options such as filtering by confidence level or license plate number. The website can be found here.

My progress is on schedule. By next week, I hope to implement the verification process of possible matches into verified matches with Eric, and have an MVP.

Team Status Report for 3/15

From the feedback received for our design report, our current risks are to figure out the cloud implementation in more detail and have something we can use to integrate with the Edge compute part. Additionally, we need to test the camera to see if it matches what we need as soon as possible. To that end, we have gotten a barebones and minimal but still usable cloud implementation for our case, and Tzen-Chuen will be hooking up the camera in the next couple days.

Currently, there are no changes to the existing design. We forecast changes next week however, as the major components should be integrated and we will begin testing our MVP, likely learning what could be improved. The current schedule is MVP testing next week, with working on new/replacement components/making the requisite changes the week after that.

Right now, we have the locally run code mostly finished, and it can be found here. We also have made large strides on the cloud side of things, with databases set up.