The most significant risk is that the edge compute solution may not guarantee enough performance (precision and recall) to meet our MVP. The contingency plan is having a two-phase approach where if more accuracy than the edge compute raspberry pi can give us is needed, we then send the image into the cloud, where a more sophisticated model can give us better results.

A change we made to the existing design was that we are now using a Raspberry Pi 4 rather than a 5. This change was made since all the raspberry pi 5s available in storage were claimed very quickly, and since we wanted to test our software as soon as possible on actual hardware, we took a raspberry pi 4 instead. While unfortunate that we’re unable to use the most powerful hardware available, this should not have any impact on our ability to create an MVP or final device since the process for loading the models on these devices are nearly identical. When we run our model, if the performance is in the order of magnitude fitting of a compact processor, we can spend our currently plentiful remaining budget on the more powerful raspberry pi 5.

We have trained the model we will most likely use for our MVP, a YOLOv11n model trained on an open source license plate detection dataset for 400 epochs. It can be found here. We have also looked into existing OCR methods and chosen the PaddleOCR out of them, which we’re currently experimenting with.

Aside from the model, the rpi 4 is currently being developed, with a github repo to be populated by next week. The camera module is also expected to arrive next week as well.

Part A written by Richard Sbaschnig:

A.

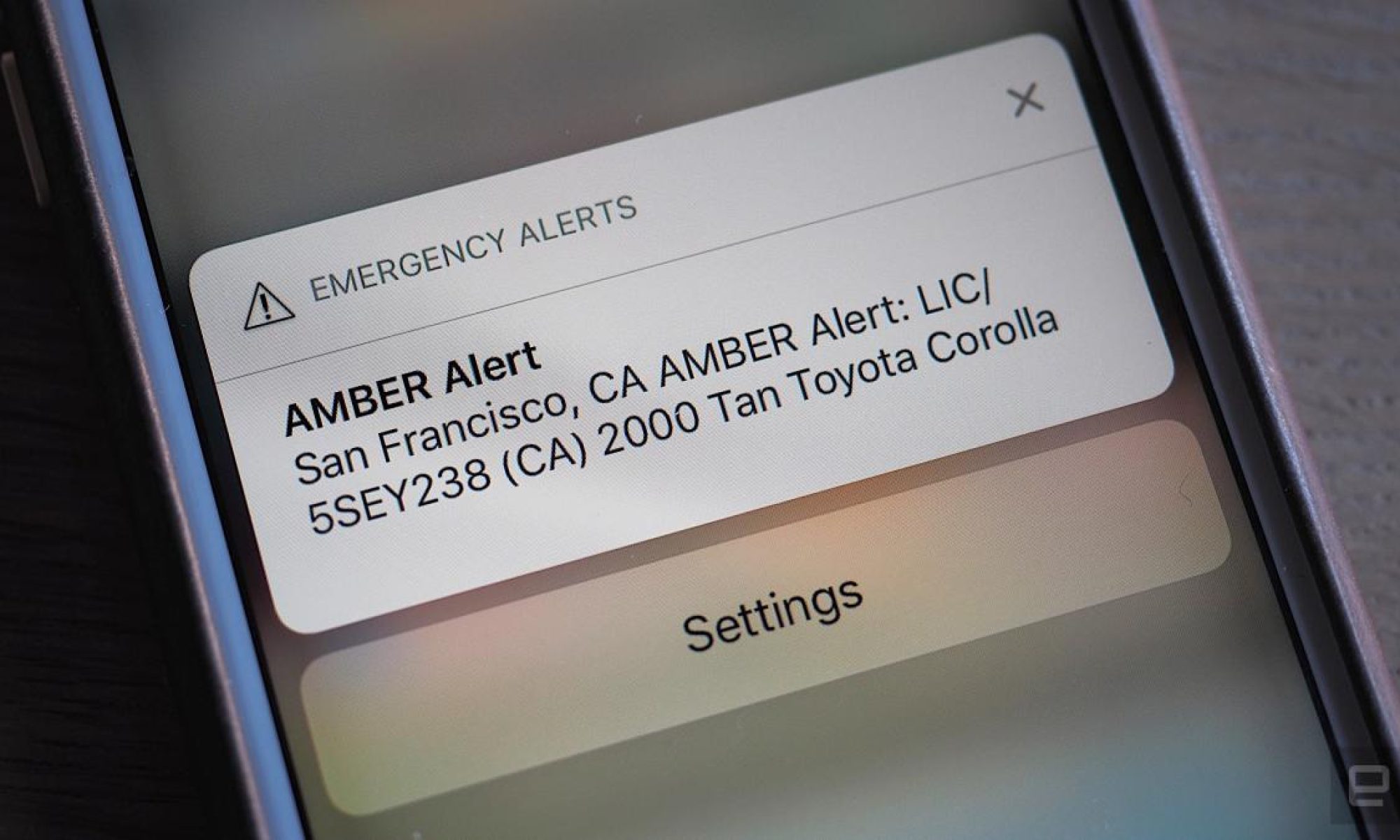

Our device aims to improve public safety. This is done by detecting the license plates listed in active amber alerts in a dashcam. Since these alerts are sent to identify suspected kidnappers of children, by increasing the search coverage of amber alerts with our device, law enforcement will be able to find these vehicles sooner and catch kidnappers sooner. This should also have a deterrent effect, since would-be kidnappers would be less inclined knowing that there are these devices all around that can identify their car and notify the police automatically.

Part B written by Tzen-Chuen:

Our device’s social considerations don’t quite appear as an obvious point of concern. The different groups that will be interacting through our device is the manufacturer, the consumer, and the potential child abduction victim. The main point of contention may be between the consumer/end user and the manufacturer, as the manufacturer may install our device without the end user being aware of it, but I believe that this can be mitigated through an explicit opt-in system.

Part C written by Eric:

Our license plate recognition system is designed with one of the focuses being affordability, utilizing low-cost Raspberry Pis and camera modules. This provides a more accessible alternative to expensive surveillance systems, ensuring that even communities with limited resources can use our system. This can be especially beneficial for those in rural areas with little existing infrastructure since our device would be mounted as a dash cam, allowing for wider reach and greater impact.