This week, our team made major strides toward finalizing our project for the upcoming demonstration. A primary focus was on system integration: we successfully combined all components into a single main file, allowing the entire pipeline to run smoothly on one machine instead of across multiple devices as in earlier demos. This simplification greatly improved the system’s reliability and made the final setup more efficient.

In parallel, significant effort was put into developing the final deliverables, including the poster, report, and video. All team members contributed to drafting and refining these materials to accurately represent our system’s functionality and performance. Coordination was key during this phase, ensuring that technical explanations, results, and visuals were cohesive across all mediums.

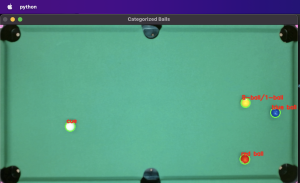

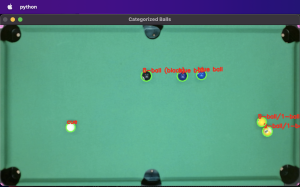

On the technical side, we made final improvements to core subsystems. Notably, Kevin worked extensively on ball categorization as accuracy was enhanced by refining the color thresholding techniques, addressing challenges like distinguishing between similarly colored balls under varying lighting conditions. Although we explored a no-button, motion-based image capture system to further streamline user interaction, testing revealed that it was too sensitive to background motion for robust deployment. As a result, we deprioritized this feature to maintain focus on the stability of our MVP.

Looking ahead, the team’s focus will be on final polishing: completing the poster, report, and video, stress-testing the system in the demo environment, and making any final adjustments needed for optimal performance. With all major systems integrated and operational, we are well-positioned for a strong final demonstration.