Risks and Contingency Plans

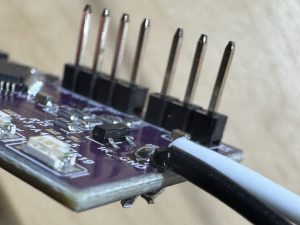

- The custom receiver is to be optimized. The current protocol we use is way too complicated to satisfy our needs. Should be done before the demo, if not we will demo the current working version.

Design Changes & Impact

There is no design changes at this point.

Schedule Updates

No major changes were made and the schedule remains as is.

Unit Tests and Analysis

IR transmission stability:

Sent cloned IR signals to LG TVs and Samsung TVs at different distances (1m, 2m, 3m, 4m, 5m) and angles (0, 15, 30, 45, 60, 75) to the TV. Successful transmission is 100% within 3m and 45 degrees. Successful transmission is 0% out of this range. The current transmission quality is not perfect but would need increase in voltage supply on PCB if we want to further improve it. At the same time, the drawback would not affect the demo, so we are not making changes to the design currently. We would need to address this problem if we want to sell it as a business product in the future.

CNN Model:

Confirmed that the model produces outputs with a success rate of 90% for five gestures and 80% for one gesture, based on performance evaluations from four different users after they learned how to perform each gesture and practice for 5 minutes. Verified that the loss function consistently produces finite values across different mini-batches. Monitored validation accuracy to evaluate model generalization.

No further changes were made to the model architecture, as the current version meets acceptable performance criteria. The CNN model was successfully developed, tested, and validated. The system is functioning as intended.

Progress / Photos

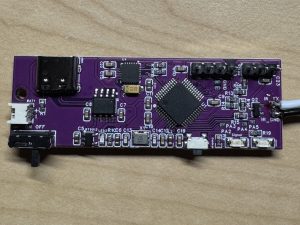

Wand controlling the TV in 1300 coves with cloned signal

Please see the project GitHub.