Over the past week I worked alongside the rest of the team on wrapping up our final presentation, in addition to working on the final integration of the subsystems. For the final presentation, I worked on timing the latency in addition to conducting multiple trials to measure the model’s accuracy in plus the time it takes the cohesive script’s to detect and run inference on a singular object. In terms of implementation, I managed to interface successfully with the ramp through the main script that handled object detection, but my progress was impeded by inconsistent turning of the ramp. As mentioned in the Team Status Report, the issue was caused by mechanical failure, though I initially had suspicions it may have been a servo issue. Between now and the demo, I hope to iron out any last quirks with interfacing with the servo, which should have the system ready for its final testing prior to demo day. Additionally, I will fine-tune our YOLO model on the TACO dataset (unused at the moment in favor of the research paper dataset) to see if that improves inference results in any way.

John’s Status Report for Saturday, April 26th

Over the past week, I smoothened out some of the mechanical aspects of the project. I lengthened the tensioner to apply more tension to the belt to accomodate any potentially higher speeds or loads the team may want to try. The team was also experiencing issues with our belt drift over to one direction. I solved this by placing tape and slightly increasing the diameter of the roller opposite the side the belt was drifting towards. This allows the belt to have more traction on that side and fight the tendancy to drift over in one direction. This will contribute to much greater ease while the group tests towards integration and validation of our overall system. Moving forward, I will freeze any major mechanical changes and simply serve as support to my group mates in their primary tasks.

Team Status Report for 04/26/2025

Potential Risks and Risk Management

Upon testing the servo control logic, we noticed erratic behavior in the angle the servo would turn to. However, we narrowed this down to a mechanical issue, which has since been fixed through acquiring a new servo mount.

Overall Design Changes

No significant design changes were made during this phase of the project. The design of the mount for the servo turning the ramp was changed in light of the mechanical failure we encountered, but the functionality remains the same.

Schedule

Schedule – Gantt Chart

We are mostly on track. We plan to finish the remainder of integration Monday and work on documentation and testing for the remainder of the week before the demo.

Progress Update

Over the past weekend, we tested specific subsystems of our project. We were satisfied with many of our results; however, we also noticed that some hardware limitations constrained us. For example, we assumed when creating these specifications, we would have been able to achieve a control center latency of <400ms. However, upon implementation, we found that sending a command from the Jetson to the Arduino has a minimum latency of 2s. This does not really impact our project because we only require this communication when we have a lot more time to work with. For other parameters like the detection of different items, we are confident that with further tuning, we will be able to increase accuracy. We will repeat the tests we were unable to conduct and include the results in our final report once the system is fully integrated.

| Requirement | Target | Results |

| Control center Latency | 400ms | (max) 2s |

| Maximum Load Weight | 20lb | ~20lb |

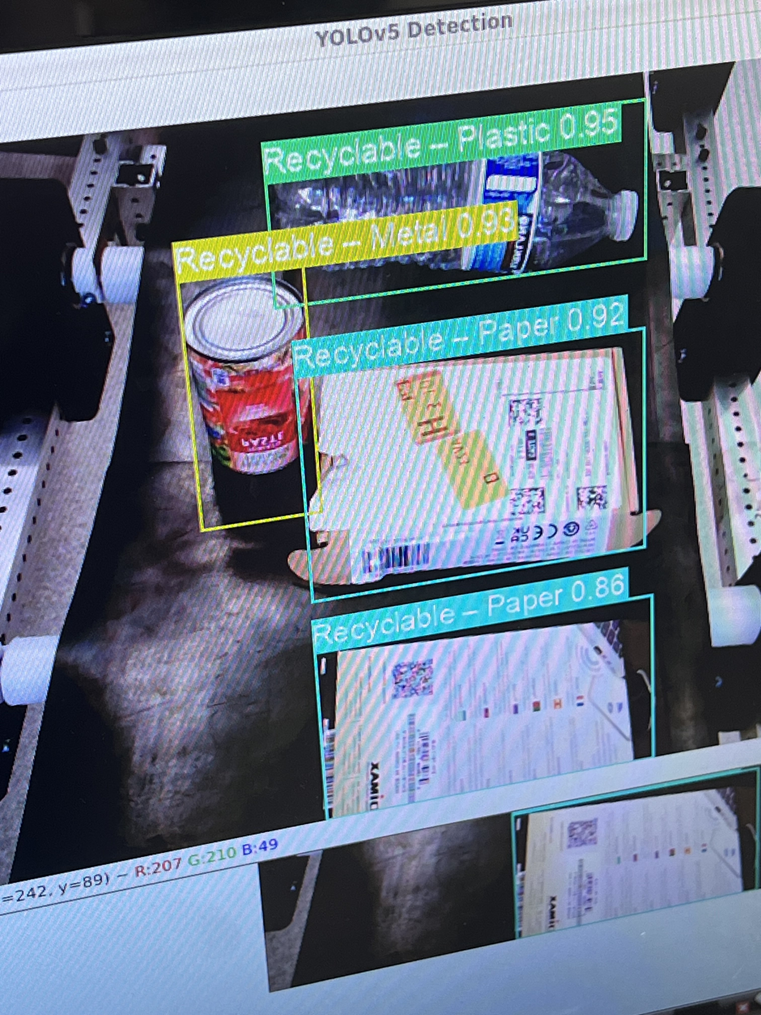

| Detection of different items | Metal, Plastic, & Paper with 90% | ~77% |

| System sorting accuracy | 90% | – |

| Item inference speed | < 2 seconds | 1.15s |

| Overall system speed | 12 items per minute | – |

| Final Cost | < $500 | $514 |

Erin’s Status Report for Saturday, April 26th

Over the past weekend and week, I worked with Mohammed to start integrating the control logic of the servo with the model classification results. We also did some unit testing of the different subsystems and worked on the final presentation. We ran into some issues during testing of the servo, which we were able to narrow down to mechanical problems, which have now been fixed. Over the coming week, in addition to all the documentation that we need, I will be working on finalizing the integration of the control logic of the servo and classification model. We also plan to conduct final unit testing for the sorting sub-system, as well as whole-system accuracy, which we were previously unable to do.

Mohammed’s Status Report for April 19th, 2025

This week I worked on multiple parts of my domain in the project, including the camera dynamics, running CUDA, and improving our YOLO model. Furthermore, I worked alongside the rest of the team in the overall integration of all the project’s subsystems.

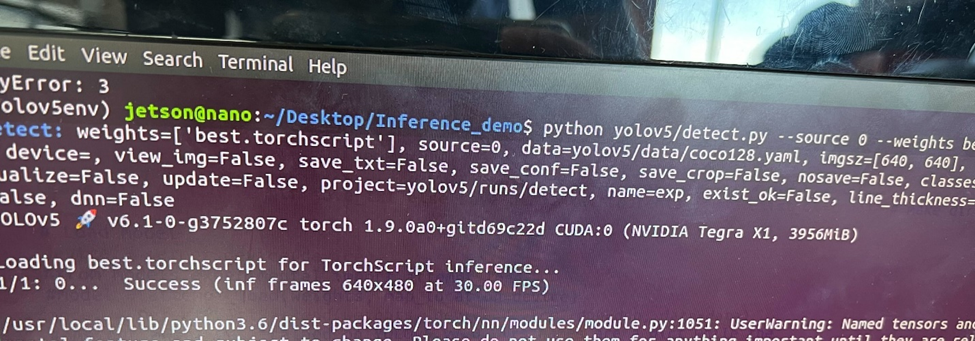

My week started off with running our interim demo’s inference script on CUDA since I got GPU acceleration working last week. While I wished it were as simple as running the script, that of course was not the case. As a reminder, I had trouble compiling the Jetson libraries necessary for CUDA support on widely supported Python versions like 3.8, so I opted to reflash the Jetson with an image that contains the necessary libraries pre-installed, albeit at the price of sticking to an older Python version. Unsurprisingly, this led to library dependency issues as some of the libraries used in our interim demo did not support our downgraded Python. As a result, I had to also downgrade to an older version of YOLOv5, which then caused issues with importing the model as that was fine-tuned on the latest version. Long story short, I had a few painful hours of re-exporting the model and modifying the script to accommodate any differences in the new model’s behavior, but all is well that ends well as I got GPU acceleration working with YOLOv5. Compared to running inference on the CPU, CUDA inference is unsurprisingly smoother, which should aid us in meeting our inference time use case requirement.

With GPU acceleration working, I then moved on to configuring the Oak-D SR camera. I learned the hard way that a high bandwidth USB 3.0 to USB type C cable is necessary to properly interface with the camera. I followed the company’s documentation for setting up the camera and running their demo Python scripts, which had variable success from the get-go as only the grayscale ones would work. After some tinkering I found out that initializing the SR’s RGB camera’s was different than the remaining models (or at least I assume), so I adapted the new initialization steps and got the demos working in color that way. I mainly tinkered with the camera control’s demo, which places you in a sandbox setting where you could fine-tune the many settings of the camera to your liking. Additionally, I tried implementing a quick script for depth detection as that would be helpful for detecting nearby objects on the belt, but with variable success. Note that all the aforementioned was done on my laptop.

Unfortunately, getting the camera to work on the Jetson was not a cake walk, primarily because its power and memory usage caused the Jetson to outright shutdown due to stress. Initially I had the camera function as a generic webcam using a UVC (USB Video Class) Python script before connecting to it using our interim demo script. To place less strain on the Jetson, I switched to handling everything in one script in addition to adding further optimizations like installing a less memory intensive desktop interface, and optimizing my model’s weights to short floats (from floats). The camera feed was stable since, although slightly choppy. The bummer is despite all I have done to get the Oak-D working, our model’s performance with it was inferior to the generic webcam, likely due to color differences. I tried fine-tuning the camera output as much as possible to optimize our accuracy to what it was before but with variable success. So we decided to stick with the generic webcam used for the interim demo. I optimized the camera’s feed to reduce the glare on object’s, an issue that made certain objects undetectable, using the Video4Linux2 interface. We hope to revisit using the Oak-D SR in addition to its depth mapping if time permits.

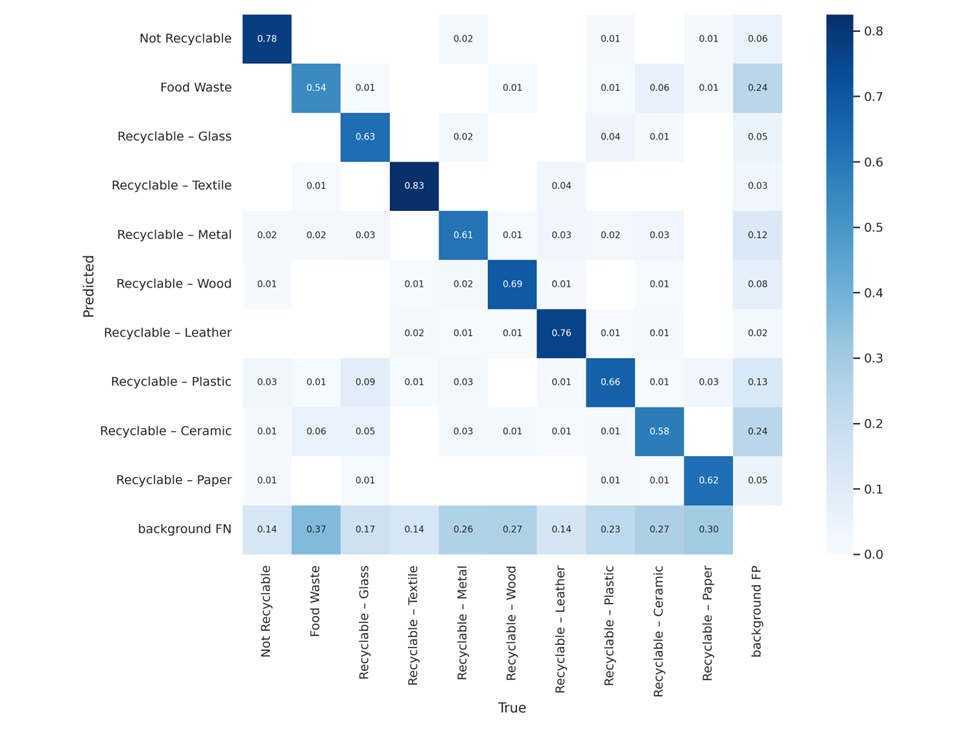

My final main contribution was switching to a different YOLOv5 model, as recommended by John. John found a paper tackling a similar problem, which provided a much larger dataset (almost 10,000 samples compared to the 1500 of the TACO dataset) as a part of its methodology. While there were also model files included, the documentation was incomplete in addition to the fact the model’s parameters were not labeled in English, which discouraged using them directly. I tinkered with some settings and trained a YOLOv5s model from scratch on the new dataset, which seems to be an improvement thus far. Note that I had to adjust our main inference script to accommodate the different waste categories of the new model. The new categories (food waste, textile, wood, leather, and ceramic) are all treated as trash for our project’s scope. Nevertheless, I do not anticipate the model will detect said classes as it is unlikely we will supply them during our testing.

Finally, I helped Erin with fixing our motor speed issues in addition to interfacing between the YOLO script and UI plus actuators.

Additional Question

I did have to learn many things on the fly, such as fine-tuning and training a YOLO model, setting up a Jetson with CUDA from scratch, in addition to using a non-plug and play high-end camera. To be brutally honest, a lot of that learning has been facilitated by generative AI. For example, while I have used YOLO before for another project, ChatGPT helped guide me more depth through the different scripts of YOLOv5 in addition to resolving some bugs here and there. Furthermore as a Windows user, having a co-pilot for navigating certain Linux features (e.g. v4l2) has been a treat. Other than that, I did watch videos as well as read papers forum posts to learn other things, such as interfacing with the Oak-D cameras and getting RGB to work on the SR. Reading research papers was especially helpful in setting up and improving our object detection model.

John’s Status Report for April 19th, 2025

Throughout this week, I finished manufacturing and assembling the the ramp sorter for the team to begin full-system sorting. The ramp was designed with laser-cut scrap MDF found in techspark. It was primarily fastened by gluing it together with additional support pieces for more surface area.

Below is an image of the ramp’s assembly from the laser-cut frame.

Below shows an isometric view of the ramp nearly assembled.

Finally, below shows the angled ramps I placed to allow for more consistent object manipulation, ensuring the object travels down the center of the ramp and in the direction the ramp is facing.

Moving forward, I will continue to serve as mechanical support to the team during testing and into our final demo day.

Additional Question:

I learned about more modern forms of 3D printing. This primarily happened as I went to Roboclub to 3D print many of our components. There were more modern methods of printing supports than I was used to. I also learned more about the process of ML engineering. Although I didn’t directly work on the ML/CV aspects, I did occasionally help in the planning and literature review/researching for our next steps. With him, I learned how to look for these more advanced ML models and datasets to make our applications more effective.

Team Status Report for 04/19/2025

Potential Risks and Risk Management

We ran into some issues while using higher conveyor belt speeds; specifically, the power supply we were using had a current limit of 0.25A, which was too low for the motor. However, we were able to acquire one with a greater current limit when supplying higher voltages, and this allowed us to raise our maximum speed threshold. Over the next couple of days, the only risks we anticipate are with respect to timing and synchronization. We believe that with our unit testing, which we will conduct this weekend, we will have a better understanding of the areas we need to further improve in time for the demo.

Overall Design Changes

We did switch to a model trained from scratch to better meet our design and use-case requirements, but the model architecture is still the same (YOLOv5). Additionally depending on the unit tests and integration process, we may switch from stopping the belt temporarily for sorting the objects to going at a slower motor speed and not halting. Beyond that, we have decided to stick to the generic webcam used in the interim demo rather than use the Oak-D Short Range camera mentioned in our design report due to performance issues (we may revisit using the camera given enough free time). Regardless, all changes are relatively minor functionally speaking.

Schedule

Schedule – Gantt Chart

Progress Update

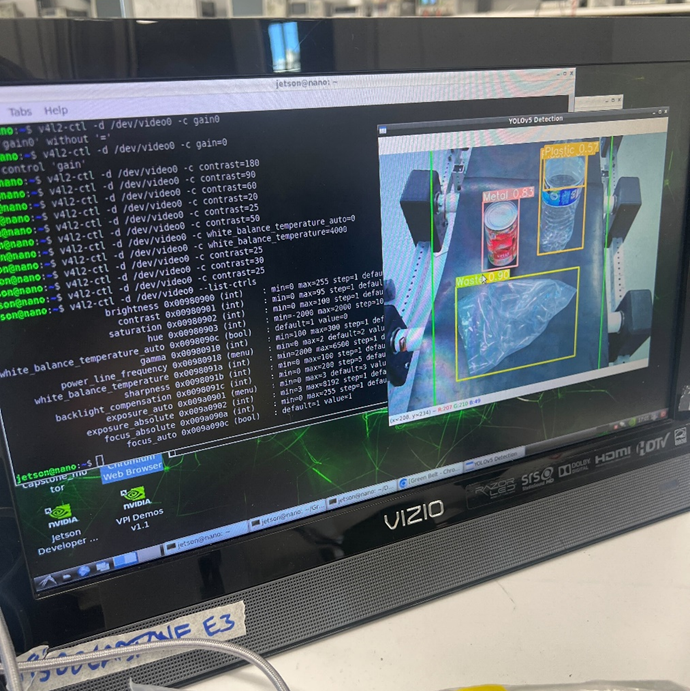

This week, we made significant progress toward integrating the major hardware and software components of the project. On the mechanical side of things, the ramp was installed into the main system, and we were able to further tension the belt to avoid any rattling as the conveyor belt approached greater speeds. We were also able to transmit input (conveyor belt speed) from the user interface to the Arduino, and have a working live video stream of the inference and classification happening in real-time. We are currently working on testing the integration of the ML model with the servo actuation. With respect to the ML model, we were able to test inference on CUDA on the new Jetson firmware from last week, and despite having multiple compatibility issues, it was eventually resolved. We were also able to get the OakD SR camera working with color on the Jetson; however, since the model’s performance was subpar, we decided to stick to the regular camera while adjusting color settings for more optimal imaging. In addition, we looked at the dataset from the MRS-Yolo waste detection paper and trained a new model from scratch. At the moment, we are observing better object recognition.

Over the weekend, we also plan to conduct unit testing on the different subsystems of the project and fine-tune a lot of parameters for synchronization to make sure the ramp moves in response to the classification in a timely manner.

Erin’s Status Report for April 19th, 2025

This week, I worked on setting up the user interface for the project and running the server on the Jetson. I was successfully able to transmit user input for speed (via the sliding bar on the monitor connected to the Jetson) to the Arduino, which consequently sends the appropriate PWM signal to the motor driver, resulting in setting the speed. I experimented with the different minimum and maximum speeds that the motor could handle, and accordingly constrained the user input to within reasonable bounds that do not provide current issues. The power supply we previously used for the demo had a current limit of 0.25A when supplying voltages greater than 6V, so we switched to using a different power supply with a greater current limit, which also allowed the motor to spin faster. Upon initial testing, we are able to get an object from end to end of the belt within the 5 seconds that was specified in our use-case requirements. With further testing this weekend, we will gauge whether we can also classify an object in time, and will accordingly modify the speed constraints. In addition to this, I also worked with Mohammed on getting some sort of live video stream of the inference and classification on the website, which eliminates the need to have another window open with the live inference. Over the weekend, once the ramp is built, I will be working with Mohammed to integrate the control logic of the servo with model classification results, unit-testing the different subsystems of the project, and also fine-tuning and synchronization to make sure the ramp moves at the right time.

Additional Questions

Throughout the course of this project, I watched tutorial videos online to learn how to accomplish tasks that I was previously unfamiliar with. For example, when figuring out how to drive a motor with custom specifications, the video on the GoBilda website walked me through how to use the motor specifications to send out PWM signals. In addition, I’ve also had to look into open forums online when running into specific problems, which has also been helpful. For the User Interface, I took a Web Applications course last semester, which gave me a lot of the background I needed to be able to design and interface with the website.

Team Status Report for 04/12/2025

Potential Risks and Risk Management

During the interim demo, we noticed that our timing belt was not measured correctly and did not have enough tension to turn the pulleys. This led the belt to slip from the pulleys under loads that were well below our maximum weight requirement. To fix this, John designed a tensioner (shown on the right) made from spare PLA and attached it earlier this week. The tension in the timing belt is sufficient, and the belt runs smoothly now.

We also noticed during our testing that the Jetson was running inference on CPU by default rather than CUDA (GPU acceleration), which likely stalled significantly in comparison. To remain on track with our inference and classification speed metric, we have looked into ways of enabling CUDA and managed to get it working by reflashing our Jetson with a pre-built custom firmware.

During our interim demo, we noticed that at the moment, the model is not fully capable of recognizing the objects moving on the belt. This is partially due to a noticeable glare in the camera feed. Beyond further fine-tuning the model and adding filters to reduce the glare, we will have our servo default to sorting into the “trash” bin to prevent contaminating the properly recycled batches.

Overall Design Changes

We had a minor design change when it came to the camera mount for the interim demo. Our initial design mounted the camera on the side of the conveyor belt, while the change has the camera mounted right above the belt. The change itself is subtle, but we believe it will help with integration as we were able to easily restrict the locations of detected objects to the approximate pixels of the belt. Additionally, the new positioning allows us to capture a larger section of the belt in each frame.

Beyond that, we are removing the detection and sorting of glass from the scope of the project, as far as the MVP is concerned, at least. This was done due to multiple factors and issues we ran into, including safety precautions and the fact that glass data samples were extremely limited in the datasets used.

Schedule

We updated our schedule in time for the interim demo last week. We are now (mostly) in sync with the following schedule.

Progress Update

Since carnival, we’ve made significant progress towards our project. We have a bulk of the mechanical structure built (as depicted in the image below).

In addition to this, we integrated the motor and servo into the mechanical build, and for our interim demo, we were able to showcase all our subsystems (object detection, servo control logic, and user interface) pre-integration.

Over the last week, we added finishing touches to the mechanical belt by installing the tensioner to fix the motor belt slipping. Additionally, we reflashed the Jetson and are now able to run CUDA successfully, which should make inference significantly faster. We were also able to establish communication between the Arduino and Jetson using Pyserial. This will be useful for when we configure the servo control logic using classification signals from the ML model. In addition, we were able to install all the dependencies for the web interface (see image below) on the Jetson and can successfully use simple buttons to toggle an LED onboard the Jetson. Over the next week, the goal is to be able to use user input to control the speed of the motor, build the ramp (we have obtained the necessary materials), and successfully use the servo to get it moving, and lastly, integrate the ML classification into the whole mechanism.

Testing Plan

The use case requirements we defined in our design report are highlighted below. To verify that we meet these requirements, we have a series of tests that we will conduct once the system is fully integrated and functional. The tests are designed such that their results directly verify whether the product’s performance meets the use case and design requirements imposed or not.

Requirements

System needs to be able to detect, classify, and sort objects in < 5 seconds

- Perform 10 trials, consistently feeding objects on the belt for a minute at a time. We will be able to verify that we met this benchmark if we are able to place at least 12 objects on the belt per minute.

The accuracy of sortation mechanism should be > 90%

- Perform 30 trials with sample materials and record how many of these classifications are accurate.

System runs inference to classify item < 2 seconds

- Perform 5 trials for each class (metal, plastic, paper, and waste) and record how long it takes for the system to successfully detect and categorize the items moving on the belt

Control center latency < 400ms

- We will perform 5 trials for each actuator (servo and DC motor), where a timer will start once an instruction is sent using the Jetson. The measurement will end once the actuator completes the instruction, that being a change in speed for the motor and rotation for the servo.

Erin’s Status Report for April 12th, 2025

This week, I worked more on setting up the electronics for the conveyor belt in time for the demo. Currently, we are able to use one power supply to move the conveyor belt at a fixed speed and simulate the servo moving every 5 seconds. This is approximately how it will be when we integrate the classification signals into the system. In addition, I set up the web interface on the Jetson, and early next week, I plan on writing a framework allowing us to change the conveyor belt speed. I will also be working with Mohammed to integrate the classification signals into the project. I anticipate running into a bunch of timing issues, specifically getting the ramp to turn exactly when the object is at the end of the belt; however, with some experimentation and synthesized delays, I’m sure we will be able to navigate this.

To verify that the subsystems I am working on meet the metrics, we have a set of comprehensive metrics and tests:

Selection of bin in < 2 seconds

Run 20 trials of placing random objects on the belt, and record how long it takes for the ramp to turn to the bin of the material it is classified to be. The average will be a good indicator of if we met our target.

Reaction to controls < 400ms

Run 5 trials for each actuator (servo and DC motor), and start a timer when an instruction is sent using the Jetson. The measurement will end once the actuator completes the instruction, (a change in speed for the motor and rotation for the servo).

Depending on the data that we collect from our comprehensive testing, we may have to further tune and improve parameters in our system to help meet benchmarks.