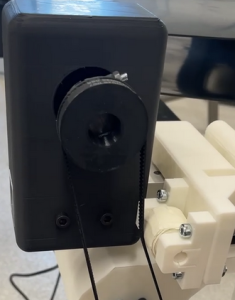

This week I worked on interfacing with the Oak-D SR in addition to setting up CUDA on the Jetson Nano. The Oak-D camera gave us trouble during the interim demo, leading us to temporarily swap it for a more generic webcam. I managed to get it working after using a high bandwidth USB 3.0 to USB type C, whereas I was previously using a generic USB type C cable. As of right now I have only managed to run the DepthAI Viewer program provided by Luxonis, which demos the camera on a stock YOLOv8 model.

Regarding the Jetson, inference on CUDA was not working initially as mentioned in the Team Status Report. As it turns out the version of OpenCV installed on the Jetson by default does not have CUDA support. While I tried to install the necessary packages to enable it prior to the demo, I did not see much success. As building the package from scratch was obnoxious and often riddled with compatibility issues, I opted to instead reflash the Jetson with a firmware image I found on GitHub that had all the prerequisites pre-installed. Following the reflash I confirmed that CUDA was enabled before reinstalling Wi-Fi compatibility and the Arduino IDE for interfacing with the actuators.

Next week, I hope to integrate the Oak-D SR camera into the cohesive system instead of the webcam we have been using thus far. The Oak-D does have promising depth perception which can be used to determine the front-most object in the belt should our current approach that uses the YOLO bounding boxes fail. As I got CUDA working, I am not particularly interested in running our YOLOv5 mode natively on the camera as that may be riddled with compatibility issues (the demo does have YOLOv8 instead of v5).

Regarding verification plans for the object detection and classification sub-system, I want to re-run the program used for the interim demo to begin benchmarking the inference speed with GPU acceleration as CUDA works now. This corresponds to the “System runs inference to classify item < 2 seconds” requirement mentioned in the Team Status Report. Additionally, I want to benchmark the model’s current classification accuracy to determine if additional fine-tuning is necessary. As outlined in the design presentation, this will be done through 30 trials on assorted objects, ideally representing the classes of plastic, paper, metal, and waste equally. The objects will be moving on the belt to better simulate the nature of the final product.