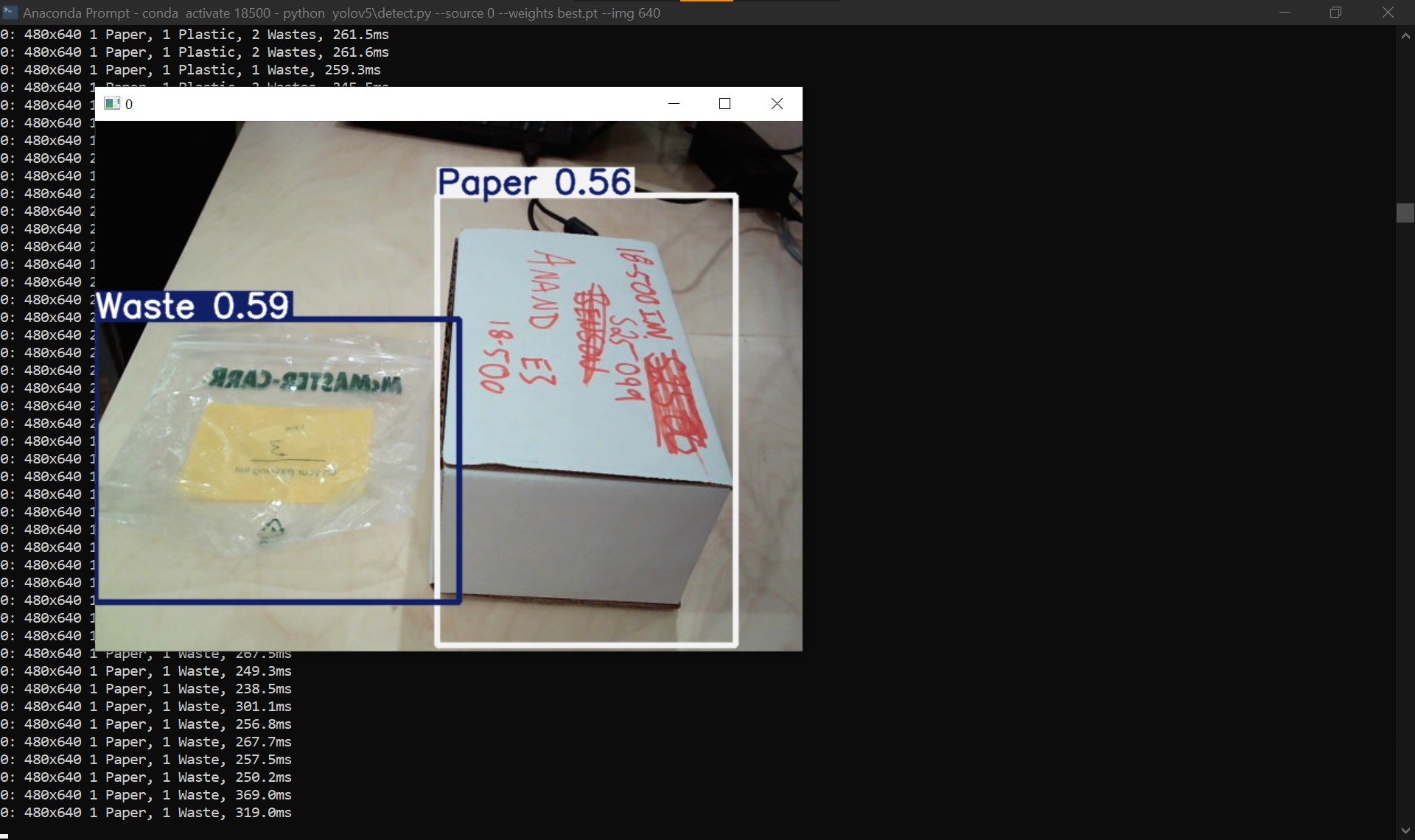

This week I primarily worked on improving the YOLO model’s performance through additional fine-tuning in addition to testing it in a real-time setting through a webcam attached to my laptop. Compared to a few weeks ago, the model is performing better in classifying paper, plastic, metal, and waste (general trash). However, it still struggles with classifying glass particularly, most likely as there are not many images with glass objects in them in the TACO dataset. Additionally, through testing the model’s inference on a live camera viewing, I have noticed that it performed best at an elevated angle that looked down on the objects for classification. I believe that to be case based on the images in the TACO dataset, which were captured at a similar perspective. By that, I experimented with multiple angles and elevations with respect to the objects to determine the best one, which we hope to deploy in the conveyor belt itself.

To train the model more efficiently, I stopped using Google Colab and set up an environment that trains on John’s home desktop as it does have hardware comparable to an NVIDIA T4, which has been working great so far. Special thanks to John for offering!

Additionally, I was helping the team out with putting everything together for the intermin demo.

Going beyond the interim demo, I hope to improve the model’s precision and recall further in all the object classes. This may need some data augmentation for certain classes, particularly glass. I also hope to test the model’s performance on other datasets not used for training.