Aside from working on next week’s presentation, I primarily experimented with different pre-trained models that can be utilized for the project. The two prominent ones were a YOLOv5 model and a vision transformer, though both were trained on similar datasets. Both models seemed to struggle a bit with trash detection as well as false positives for recyclable material. Fine-tuning the models will likely help with that. The fine-tuning data will likely consist of the dataset that the models were trained. We will also use the Trash Annotations in Context (TACO) dataset which consists of a large image collection of labeled trash and recyclable objects, where we will have to condense the latter to the categories we are using for the project (trash, paper, metal, glass, and plastic).

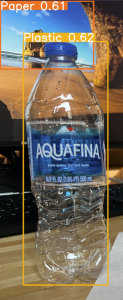

Attached is an image of the YOLOv5 model’s inference result on an image I captured. The aforementioned problems can be seen in the image. Regardless, we will be sticking with a YOLO model as it is easier to fine-tune and can better visualize its predictions.