What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk to our project is that the suction-based end effector won’t be able to pick up different types of trash items. We’re managing this risk by focusing a considerable amount of time on the development of the end effector mechanism. In the case that the suction with the vacuum pump is ineffective, we plan to pivot to a claw like end effector mechanism for picking up the pieces of trash.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There are no current changes made to the existing design of the system.

Provide an updated schedule if changes have occurred.

There are no schedule changes as of now.

Part A was written by Teddy, Part B was written by Ethan, and Part C was written by Alejandro.

Part A:

with consideration of global factors. Global factors are world-wide contexts and factors, rather than only local ones. They do not necessarily represent geographic concerns. Global factors do not need to concern every single person in the entire world. Rather, these factors affect people outside of Pittsburgh, or those who are not in an academic environment, or those who are not technologically savvy, etc.

Currently, the issue of proper waste processing is one that many countries, including the US, are struggling with. Many countries do not have the infrastructure to pay for recycling facilities, as it requires a lot of manpower, and the resulting materials are not very profitable. Additionally, a lot of our waste is shipped to other countries in order to prevent it from piling up here, however this means that other countries are burdened with our trash. SortBot could help reduce the amount of trash by separating out the useful materials, at a much lower cost compared human workers. This could potentially help other countries without the funds for recycling management to do so.

Part B:

In the United States, the common practice is to dispose of all types of municipal solid waste in single bin, prioritizing convenience over environmental concern. SortBot is set out to challenge this norm, one that focuses on ease and efficiency, by introducing an autonomous system that streamlines waste separation without requiring a behavioral change. By shifting the responsibility of sorting waste away from the person throwing away the trash, SortBot can work within the existing American cultural norms. This ensures that materials can be property sorted without requiring individuals to change their habits. While this is not the best solution for this issue, it is one of the most appropriate for current American culture.

Part C:

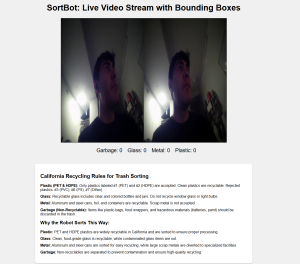

Our product solution will meet the need of the consideration of environmental factors by providing a streamlined method to making the environment cleaner. Utilizing advanced computer vision and machine learning algorithms, our robot has the ability to identify and differentiate between different types of trash and can thus correctly categorize the trash types and sort them into their respective bins. The system can aid in the sorting of trash to aid in the recycling of these items. Normally, all recycling items mixed with trash would normally end up in landfills and be lost in terms of the value that they could provide when recycled, but now with our product solution, we can recover these items and help in reducing the environmental strain of waste disposal.