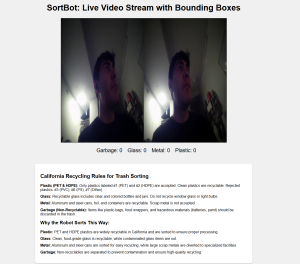

This week, I worked on the integration to have the Jetson relay the on-off command to the Arduino, which I accomplished in tandem with Ethan. The webapp can now stop the gantry via the on/off button being pressed. I also rewrote the Arduino code to handle the Jetson commands in a sequential manner. The Arduino can now inform the Jetson when it is done processing a command. Additionally, I fixed an earlier issue I had with parsing the commands of the Jetson so that the Arduino can now receive the coordinates of an object in xy and then move to the corresponding trash bin coordinates.

For next week, I intend to solidify concrete measurements of different components on my end. Additionally, I plan on integrating the web app controls of speed and the Jetson to Arduino interface for that. I also plan on writing the code for updating the statistics on the webapp in terms of how many types of trash objects have been sorted.

I needed to learn how to write Arduino code to control stepper motors. I leveraged YouTube and the webpages of tutorials throughout the internet as I searched for how to do different tasks. I also used the AccelStepper library documentation to help me control the stepper motors. I also needed to learn how to write certain function calls for Node.js js which I learned from googling and reading online forums and such.

I am currently on schedule.