What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The most significant risk to our project is preventing the accumulating of error between the machine learning model and the gantry system. To prevent this we want to make sure that the machine learning model and the gantry is as accurate as possible. This means performing a lot of unit tests for each system.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

There are no current changes made to the existing design of the system.

Provide an updated schedule if changes have occurred.

There are no schedule changes as of now.

Unit Tests

Gantry System:

Weight Requirement: We tested with a few different types of objects that were all around 1lb to make sure the gantry met the 1lb requirement.

Pickup Consistency: We tested on 30 different recycling objects with different materials and textures for just the pick-up and drop off, with focus on the end-effector.

Speed: We timed the gantry’s movement assuming the maximum amount of motion in order to get its maximum time for the pickup/drop off sequence.

Web Application:

Usable Video Resolution: Counted the number of pixels in a static frame from the video stream using an image snipping tool.

Real-time Monitoring: Measured round-trip timestamp checking of 416×416 images.

Fast Page Load Time: Measured the time it takes for the initial webpage to load in a browser.

Machine Learning Model:

Ability to identify Different Trash (One Environment): Gathered a various number of trash from the 5 classes and placed them on the conveyor belt to see if the model was able to correctly identify and localize them.

Ability to identify Different Trash (Multiple Environment): Gathered a various number of trash from the 5 classes and placed them on the conveyor belt to see if the model was able to correctly identify and localize them. Before the image was send to the model, I tried to adjust the brightness, the color, and how blurry the image was to see how the model would in this scenario (since we don’t really know what the environment of the final demo room will be).

Speed of Inference: Feed a random subset of images from our real-time photos and calculated the average time of inference to see if it met the value in our design requirements.

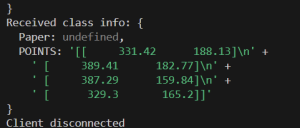

Overall System:

We tested on 30 different recycling objects the whole detection, communication, and pick-up/drop-off sequence for timing and consistency.

Findings:

– The machine learning model is robust enough to work in any lighting scenarios.

– The web app’s latency is heavily dependent on CMU-SECURE WiFi.