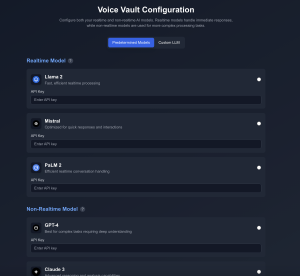

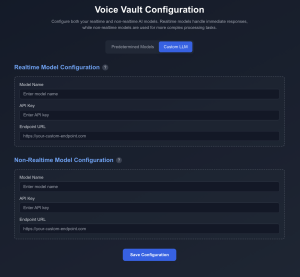

The most significant risk is that the latency of the whole system is too large. We want it to be <5 second from the person finishing their statement to our device responding. If we cannot meet this requirement, we will have to redesign the data flow or move the model location sure the latency is under control. No changes were made to the existing design of the system. Below are some pictures of our frontend UI that we setup this week that will allow users to customize LLM models (not yet functional). We also finalized our order of parts this week.