This week I worked on the model/server code. From last week, I was initially trying to get the downloaded model from hugging face running locally. However there were many issues, dependencies, and OS problems. After trying many things, I did some more research into hosting models. I came across a software named Ollama, which allowed for easy use of a local model. I coded some python to create a server which took in requests to run through the model and then return to the endpoint.

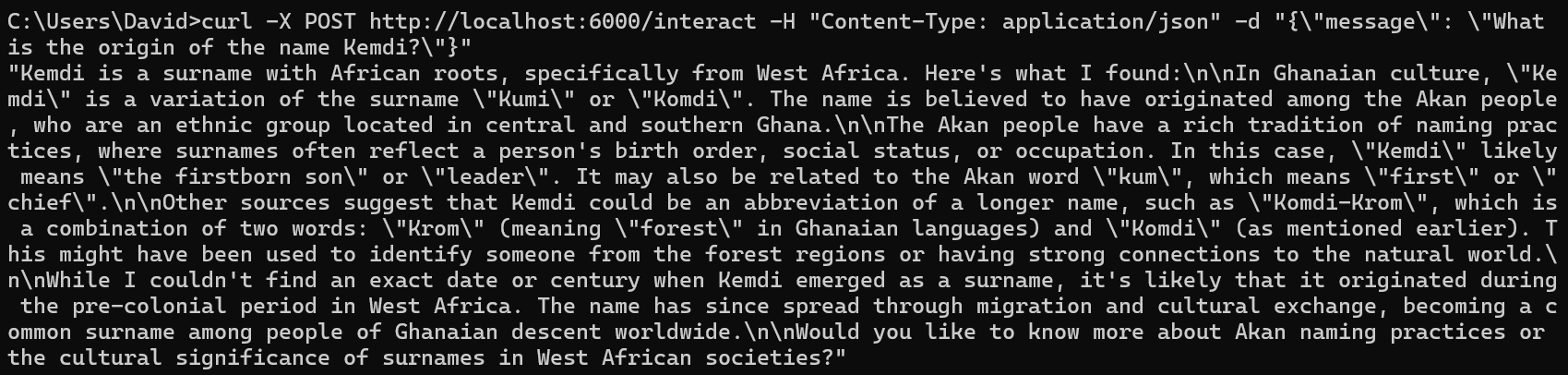

As seen here, we can simply curl a request in which will then be parsed into the model and returned. I then tried to look into dockerization of this code. I was able to build a container and curl into its exposed port, yet the trouble I come across is that the Ollama code inside does not seem to be running. I think it stems from the fact that to run Ollama in python, you need two things (more like three things): Ollama package, Ollama App and related files, and an Ollama model. Currently, the Ollama model and App are on my pc somewhere, so when I initially tried to containerize the code the model and app were not included, only the Ollama package (which is useless by itself). I then tried pasting those folders into the folder before building the Docker image, and they were still not running. I have played around with mounting images, and other suggested solutions online, but they do not work. I am still researching into fixing it, but there are few resources that pertain to my exact situation as well as my OS(windows). We are currently on schedule.

As seen here, we can simply curl a request in which will then be parsed into the model and returned. I then tried to look into dockerization of this code. I was able to build a container and curl into its exposed port, yet the trouble I come across is that the Ollama code inside does not seem to be running. I think it stems from the fact that to run Ollama in python, you need two things (more like three things): Ollama package, Ollama App and related files, and an Ollama model. Currently, the Ollama model and App are on my pc somewhere, so when I initially tried to containerize the code the model and app were not included, only the Ollama package (which is useless by itself). I then tried pasting those folders into the folder before building the Docker image, and they were still not running. I have played around with mounting images, and other suggested solutions online, but they do not work. I am still researching into fixing it, but there are few resources that pertain to my exact situation as well as my OS(windows). We are currently on schedule.