This week I have worked on setting up the docker container and the software that deals with the model. I currently have working code that can take in a curl request with username and password and if correct the request will then be sent to a model. The model currently is a dummy model that just spits out a number, but once the actual model is put in place it should be working. For getting the actual model, I have requested and downloaded a Llama model from HuggingFace. I am currently working through setting up the model as it needs certain requirements. I have also done some research into new parts for speaker/microphone and have settled on one that should fix our issues. Our project is a little behind due to the hardware not working, and we hope to fix that next week with the new part. I personally hope to accomplish getting the model set up so that the established data flow of a command coming in, authentication checking, running prompt through model, and spitting out result works as intended.

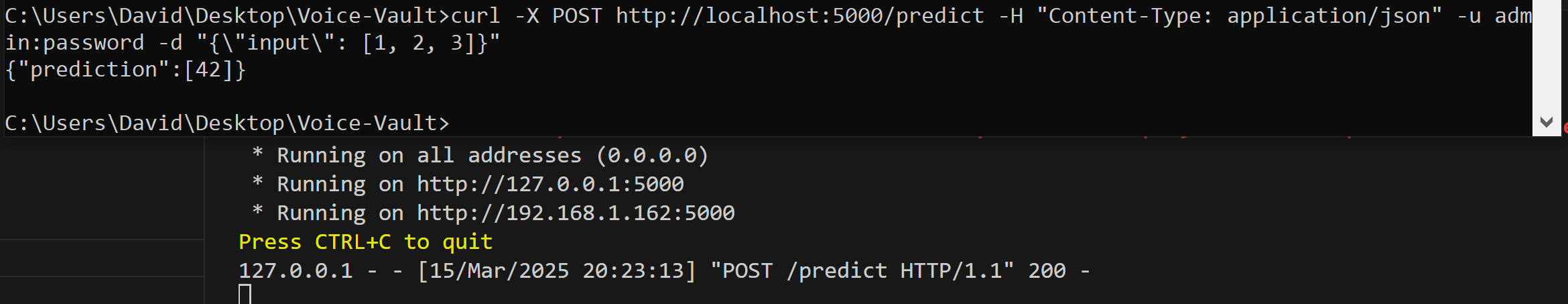

As can be seen in this picture, I send a curl command from terminal and the server receives it and gives back a prediction (from dummy model).