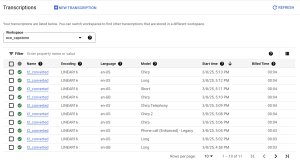

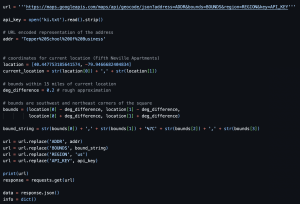

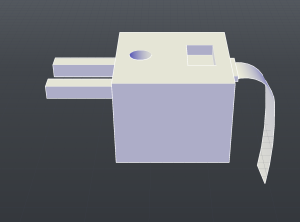

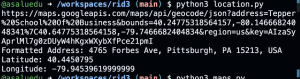

Our current progress on the Rid3 device is going well. The main tasks for this week were to complete the setup for raspberry pi, finish setup for the blues starter kit, and get basic object detection with sensors. We were able to accomplish most of these goals since our last progress report. We have the raspberry Pi set up and have made it compatible with the blues starter kit by using ssh for programming on the Pi. In addition, we worked on configuration for converting speech to text using the Google Speech API and were able to see sample outputs. We also ordered materials for a new mount for our bicycle which we designing a component that is compatible with the mount ( see below ).

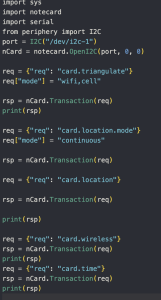

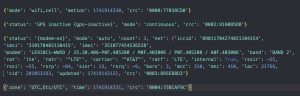

One of the risks that we are currently facing is the fact that the GPS information is not being sent properly through to the Raspberry Pi, this could be a problem as we need accurate GPS data for the proper directions to be sent.

NEXT WEEK DELIVERABLES:

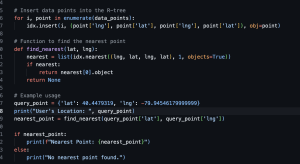

Continue testing for speech-to-text translation and beginning implementation of R-Tree algorithm. Set up circuit for wristband + establishing basic object detection for sensors. Fixing GPS issues and storing GPS data to be used by the RPi.

.

component compatible with mount.

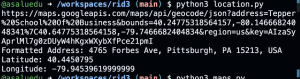

sample output for Google speech API

ADDITIONAL QUESTIONS:

Part A: … with consideration of global factors. Global factors are world-wide contexts and factors, rather than only local ones. They do not necessarily represent geographic concerns. Global factors do not need to concern every single person in the entire world. Rather, these factors affect people outside of Pittsburgh, or those who are not in an academic environment, or those who are not technologically savvy, etc.

There is a global need for increased safety and accessibility in urban mobility. Our device Rid3 can help meet this increased need for bicyclists’ safety by enhancing phone-less navigation in increasingly crowded urban settings. Bike lanes and road support for micro-mobility are being expanded in many major cities worldwide, however riders are often still at risk due to poor visibility, car blind spots, and distractions from checking navigation devices. This technology lowers the risk of accidents and increases traffic safety by enabling cyclists to receive crucial blind spot alerts and clear directions without taking their eyes off the road by fusing voice navigation with a haptic feedback wristband. This solution is particularly impactful in regions where cycling infrastructure is still developing or where road conditions are less predictable. Additionally, our device is intended to work in various climates expanding beyond what typically occurs in Pittsburgh, like high dust environments. The usability of our device is also simplistic and meant to be intuitive to promote use for all people regardless of technological expertise. By enhancing safety in diverse environments, the product contributes to broader global efforts to promote sustainable transportation, reduce urban congestion, and improve public health. A was written by Emmanuel.

Part B: … with consideration of cultural factors. Cultural factors encompass the set of beliefs, moral values, traditions, language, and laws (or rules of behavior) held in common by a nation, a community, or other defined group of people.

Within the context of our project, the main cultural factor for consideration is the fact that different countries have different road laws hence it is important that the Rid3 devices adhere to these cultural norms. Specifically, it is important that our device functions in a way that it is intuitive to a biker on the road. Consequently, it is essential that the audio feedback for navigation instructions adheres to road safety rules. Consequently, research will have to be done to ensure that the system’s feedback adheres to the rules of the region. B was written by Akintayo

Part C : … with consideration of environmental factors. Environmental factors are concerned with the environment as it relates to living organisms and natural resources.

Environmental factors play an important role in our project. We want to make sure that we’re using resources that do not pollute the environment and are safe for the environment. It is battery powered and does not release any toxins into the air when it is running, so the environmental concerns are limited. One thing to take note of is the potential for coming across different animals in the environment. If we detect animals in our blind spot, we want to notify the user so that they don’t hit the animal coming across. C was written by Forever.