This week, we made solid progress on all subsystems of our project.

We were able to integrate all of our scripts and have them running simultaneously on the RPi4 . So, the GPS tracking, blindspot detection, and navigation instructions with voice recognition system are able to work together by using threading. The navigation script is able to get continuous updates of longitude and latitude from the GPS through a shared global variable.

There were many pivots with the wristband system. We replaced the Micro Arduino device for the wristband with the Blues Swan, which also has Arduino pairing capabilities. The reason for this change was due to the Swan including a PMIC accessible via a JST PH connector, this allows us to power the board with a LiPo battery but also recharge the battery if need be, through the USB port. Additionally, we to changed our bluetooth system from operating with 2 HC-05 modules to using the RPi4 bluetooth and one HC-05 module because we were having trouble sending data with the previous setup. Lastly, one of the biggest changes we made was to switch from the ultrasonic sensors to the OPS243 Doppler Radar Sensor because during interim demo week the ultrasonic sensors were having connectivity issues. Also, it was apparent they would be insufficient in meeting in our use cases.

Our audio and navigation components are integrated with our GPS tracking and we have been actively testing their accuracy and functionality by doing test routes ( ex. Porter Hall to Phipps Conservatory). Right now we have instructions being inputted manually but we are actively fleshing out kinks with the audio input.

We 3D printed our bike mount piece and got it to match the GoPro sizing and are actively working to have the wristband and main device encasing finished printed soon.

RISK:

In regards to the GPS subsystem, our encasing could potentially block satellite signals, if not positioned properly. Another separate risk, is the fact that HC-05 device and Bluetooth earbuds would need to be connected to the Raspberry Pi 4 simultaneously. Unfortunately, it may not be possible for the RPi to connect to multiple bluetooth devices. As a result, it is important that we spend sufficient time in attempting to integrate these bluetooth devices to the system to work at the same time. If that is not possible, then the audio aspects of the system will have to be done via a regular microphone and speaker that do not rely on Bluetooth connectivity.

There is risk with our new sensors as well. There’s risk with the new OPS243 Doppler Radar Sensor because the field of view is very limited compared to the ultrasonic sensor. Although it’s fairly accurate in detecting incoming objects, its field of view to do so is very limited and poses a threat of objects being missed in a user’s blindspot. We might have to change or specify our guaranteed coverage zone in our final use case.

TESTING:

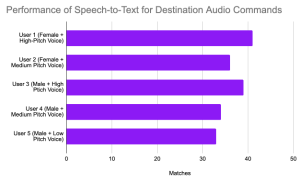

We are still early in our testing but overall it has been going well. We tested inputting a journey manually and having directions update based on a small sample set of GPS coordinates. For our audio input portion we aim to test 5 different voices (from different people) for 20 different destinations within the Pittsburgh area and checking the accuracy from the output of the speech-to-text system. To test the navigation accuracy we’ll test multiple GPS coordinates on 10 different routes and check that the generated navigation instructions are accurate by comparing with the actual turns on a map. Our validation is ensuring that GPS coordinates are occurring in real-time and the navigation suggestion system is outputting the correct instruction.

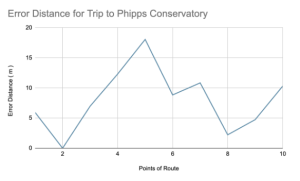

In order to meet use case requirements such as users receiving audio instructions within 200 feet of a turn, the GPS system needs to accurately measure where the user is. We’ve done a couple of bike trips to Phipps Conservatory and captured the longitude, latitude, and distance from turn for each of these trips to ensure the user is at the right location. Another test that has been done is putting the GPS sensor in a box, and reading GPS data while outside, to ensure that when the actual encasing is finished, we are not blocking GPS signals. We still need to test more locations and routes but so far testing as been going well and the results are aligning with our use case/

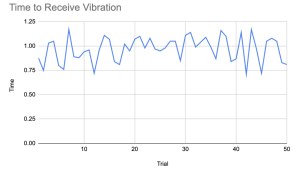

For the blind spot detection and wristband system, we have tested the basic functionalities. When stationary and indoors non moving objects infront of the sensors don’t trigger a wristband vibration but and incoming object at certain speed will. Tested when objects are incoming at different angles relative to the sensor and it does have a more limited field of view than the ultrasonic sensors but it’s better at filtering out unnecessary objects. The wristband system is able to meet our use case in a limited setting. When the system is stationary, a vibration haptic feedback response is generated within a second of an incoming object being detected. We still need to create an explicit plan to test the accuracy rate of the blindspot system.

NEXT WEEK DELIVERABLES:

We are primarily focused on extensively testing our subsystems independently. We will also work on getting all the encasings 3d printed to properly secure our project so that we can test our integrated systems on a bike.

Gantt