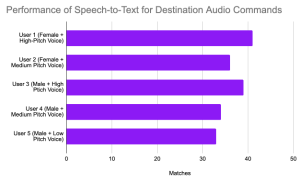

This week was mainly focused on unit testing, holistic testing, and fixing any bugs with our project. We focused on issues dealing with the GPS system. In addition, we also made changes to our encasing. During our testing the device fell, which weakened the connection of our sensor to the acrylic case. We decided to apply more glue for a better stick, and extended the wire, so that there was less pressure pulling on the sensor when connected to the Raspberry Pi.

Image: Broken Joint

Image: New Case

RISK:

One risk we noticed was that it takes a considerable amount of time for our GPS module to lock in with a satellite ( > 5 minutes ). So, we changed the orientation of the GPS module to see if that would result in a shorter connection time. This seems to have reduced the problem because we are able to connect to our device in less than 5 minutes.

TESTING

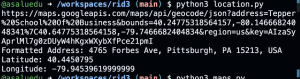

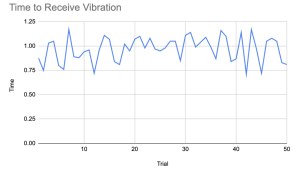

We did comparative testing to see if the current location of users being shown by our gps script, matched that of which the user actually was. In order to have a reference point, we used the IPhone’s internal GPS as a standard measure of GPS accuracy.We used this to map out four points on each journey route we had, and measured the differences in latitude and longitude as a metric for the accuracy of the GPS module.

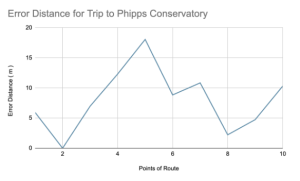

We ran 50+ tests this week with the doppler radar sensor to measure its field of view and accuracy. Through testing, we noticed the orientation of the sensor played a large part in how accurate the distance detection was so removed the sensor from our encasing and changed the orientation. Also, the sensor had no false positives as it never detected an object that wasn’t there. Lastly, since the sensor’s FOV is so limited it’s best for our device to be attached slightly angled towards the left hand side of the user to get a better view of incoming objects as people usually take a wider angle when passing bicyclists and don’t come up directly behind them.

For our overall system testing , we spent a few hours this week riding a bike with Rid3 to collect data. We also simulated certain scenarios with a car and on another bike. Various kinks arose with testing off campus that we had to resolve throughout the week. Once again, one of our biggest setbacks was the bike mount piece broke while we were testing. The device fell and our encasing broke so I took time this week to put it back together and reprint a sturdier mount. This was an inadvertent strength test of our encasing as our device held up pretty well and there were no cracks in the encasing, we only had to attach the sides back together.

NEXT WEEK DELIVERABLES:

We will primarily be focused on wrapping up our project and preparing for the final demo. We will continue testing to ensure the product performs as expected.