WORK ACCOMPLISHED:

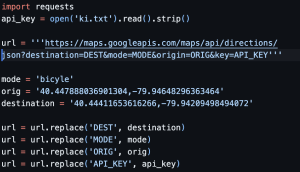

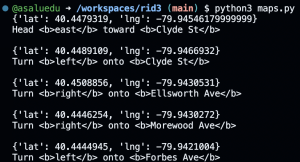

This week, I primarily worked on testing for the audio recognition aspect of the project and fixing some integration issues it had with the rest of the system. Moreover, I worked with other members on the team to carry out test journeys by attaching are device to Pogoh Bikes and riding them around the campus. We were able to test different aspects of the projects e.g. route audio feedback and haptic feedback.

PROGRESS:

I did not do as much testing as I would have liked, so I am slightly behind. So, I will have to make time this week to do extra testing.

NEXT WEEK DELIVERABLES:

I will primarily just continue testing the audio + navigation aspects of the device; and plotting and documenting the test results in order to see how it matches up with our design and use-case requirements.

TESTING:

Did some testing