WORK ACCOMPLISHED:

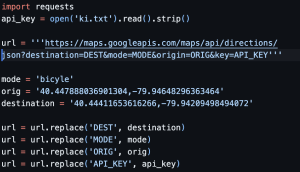

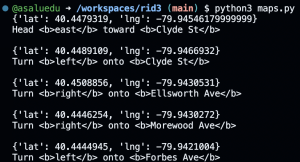

This week, I tried to work on setting up the Raspberry Pi 4, but I realized I would require a micro SD card reader; hence, I was unable to move forward as I was missing the device. I also worked more on the Google Maps API.

Additionally, I decided to modify the design of the system by removing the web server and localizing the navigation and audio system to the Raspberry Pi instead. This drastically reduces the latency required for our system.

PROGRESS:

Due to some issues I faced, I’m currently behind schedule as I had expected to finish up with how to record audio files from the Raspberry Pi and also begin to work on integrating the Google Speech-to-Text AI.

NEXT WEEK’S DELIVERABLES:

I am mostly will try and catch up on last week’s deliverables. So, I will working on how to record audio files from the Raspberry Pi and sending it to the Navigation endpoint. I will also begin to work on integrating the Google Speech-to-Text AI.