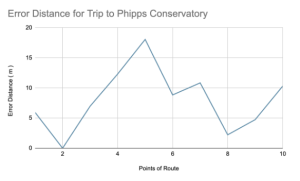

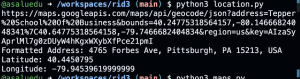

At this point in the project, we’re heavily focused on working through our individual parts. We have been working on the navigation piece, and trying to integrate it with the Raspberry Pi. We were able to get the GPS working and and are receiving GPS data such as longitude and latitude, however the results have not been as accurate as we wanted them to be. So we decided to move forward with triangulation as our primary method for determining where the user is – this has proved to be more accurate. Having said this, we need to find a way to integrate their API for requesting triangulation data with our Raspberry Pi.

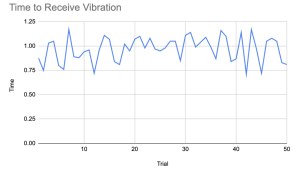

Additionally, some progress was made in regards to the haptic feedback for the wristband system. We were able to set up a circuit on the mini breadboards, which allow the ERM motor to vibrate from a script on the micro Arduino. Time was spent learning how to use the HC-05 bluetooth module in order to send data that the Arduino can use to dictate when the motor should vibrate. We currently working on adding code to the sensor script so it can send a signal to the HC-05 when an object is detected within a certain range. We are currently working on adding code to the sensor script so it can send a signal to the HC-05 when an object is detected within a certain range.

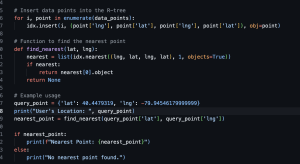

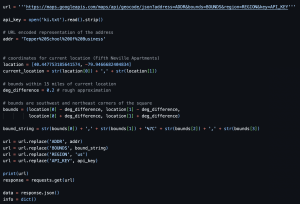

This week, we also worked on the navigation generation aspect of the project. Essentially, we worked on the code for suggesting the next direction instruction based on the user’s current location.

RISK:

In relation to the accuracy of the current GPS system, if using the triangulation alternative is not getting accurate enough data, we might have a hard time determining when a user is heading down the wrong path. As a result, we might have to consider other GPS systems.

To ensure the safety of our system, it is very important to establish the communication between the haptic feedback on the wristband and the object detection from the sensor on the bike. Otherwise, there’s a major risk if objects are detected by the sensor but users aren’t warned through the vibration of the wristband.

Since we will begin working on integration of two distinct subsystems, one potential risk is how the subsystems are talking to each other. For now, the tentative solution is that the GPS subsystem will be periodically writing the user’s GPS location to a text file, and then the navigation subsystem will be reading that GPS location from that file. One issue that may arise from this is the timing between the two processes and how outdated the data may become based on the timing at which the navigation subsystem reads that data. Additionally, the accuracy of the GPS data will affect the functionality of the navigation system

NEXT WEEK DELIVERABLES:

For next week, we will be collaborating together to begin the integration of the navigation subsystem and the GPS subsystems in order to have a fully functional system that tracks the user’s GPS location in relation to the route for their journey and suggesting appropriate navigation instructions.

In relation to the haptic feedback system, we wanted to be able to send data to the motor circuit from a python script by now. We aim to have this done later today though. We also may still need to find a better sensor, but want to make sure we can get basic functionality of the blindspot detection subsystem before we spend more time trying to improve accuracy.