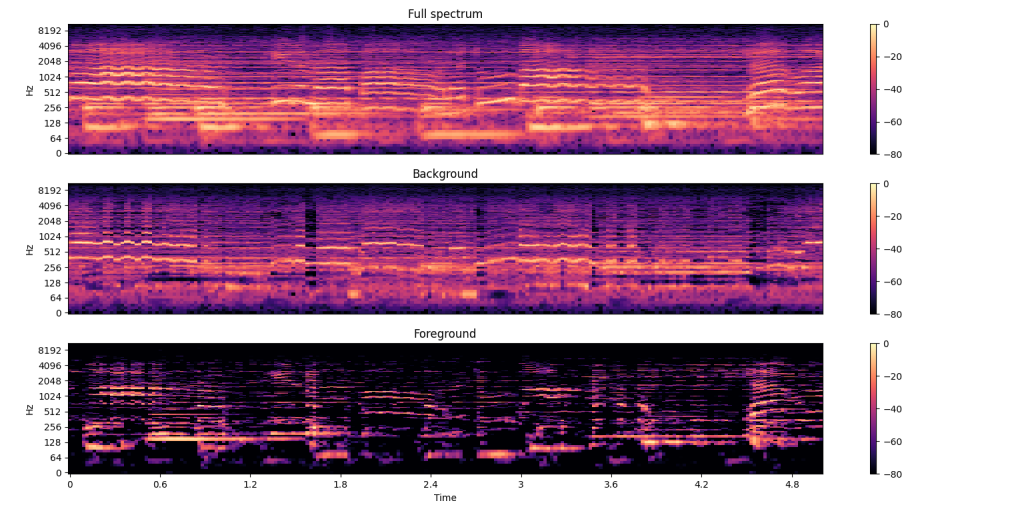

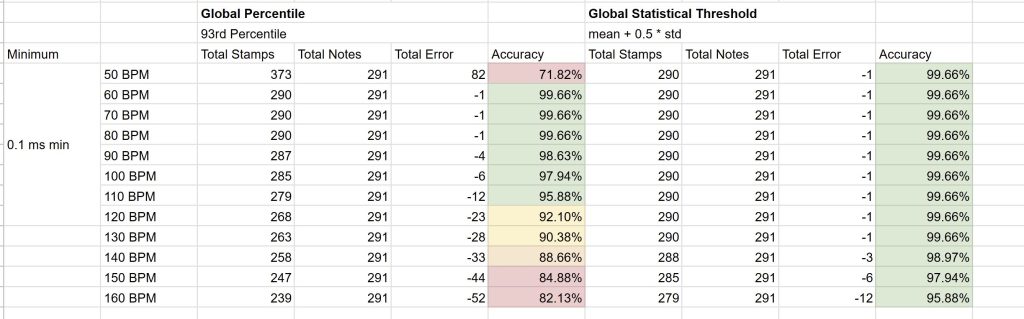

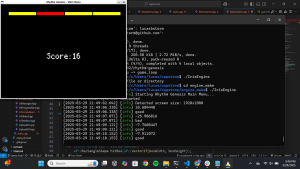

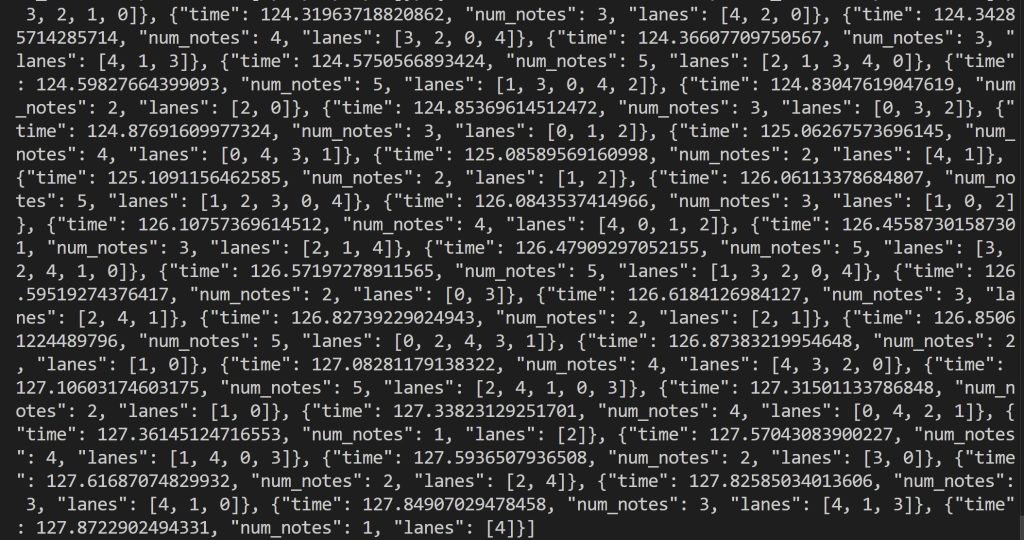

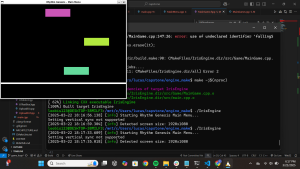

This week, I tested and stabilized the Beat Map Editor and integrated it with our game’s core system. I improved waveform rendering sync, fixed note input edge cases, and ensured exported beat maps load correctly into the gameplay module. I worked with teammates to align beatmap formats and timestamps. I also stress-tested audio playback, waveform scrolling, and note placement on both Ubuntu and Windows. Due to virtual audio issues on Ubuntu, I migrated the project to Windows, where SFML audio runs reliably using WASAPI. I updated the build system, linked SFML, spdlog, and verified playback stability across formats. The editor now supports consistent UI latency, note saving/loading, and waveform visualization, meeting our design metrics.

What Did I Learn

To build and debug the Beat Map Editor, I learned C++17 in depth, including class design, memory safety, and STL containers. I switched from Unity to SFML to get better control and performance, and I studied SFML docs and examples to implement UI components, audio playback, waveform rendering with sf::VertexArray, and real-time input handling. I learned to parse sf::SoundBuffer and extract audio samples to draw time-synced waveforms. I also learned to serialize beatmaps using efficient C++ data structures like unordered_set for fast duplicate checking and real-time editing.

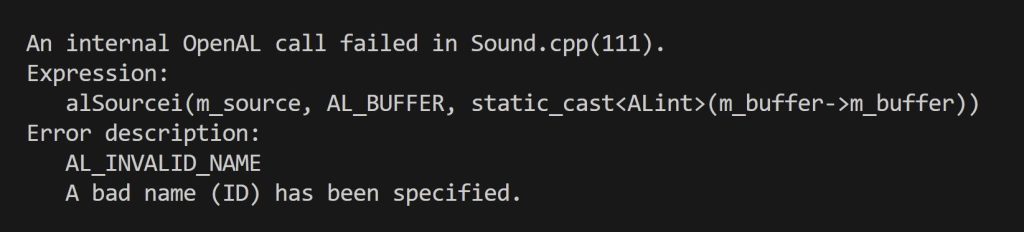

I faced many cross-platform dev challenges. Ubuntu’s VM environment caused SFML audio issues due to OpenAL and ALSA incompatibilities. I debugged error logs, searched forums, and simplified my CMake files before deciding to migrate to Windows. There, I set up a native toolchain using Clang++, static-linked dependencies with vcpkg and built-in CMake modules. Audio playback became reliable under Windows using WASAPI.

Verion control is key to our project, and I picked up Git branching, rebasing, and conflict resolution to collaborate smoothly with teammates. I integrated my code with others’ systems, learning how to standardize file formats and maintain compatibility. I utilized YouTube tutorials, GitHub issues, and online forums to solve low-level bugs and these informal resources were often more helpful than official docs. I also leanred lots of linux and windows commands, C++ syntax, SFML function use cases, git hacks, methods for resolving dependency issues from GPT. Overall, I learned engine programming, dependency management, debugging tools, and teamwork throughout this semester.