Summary of Individual Contributions

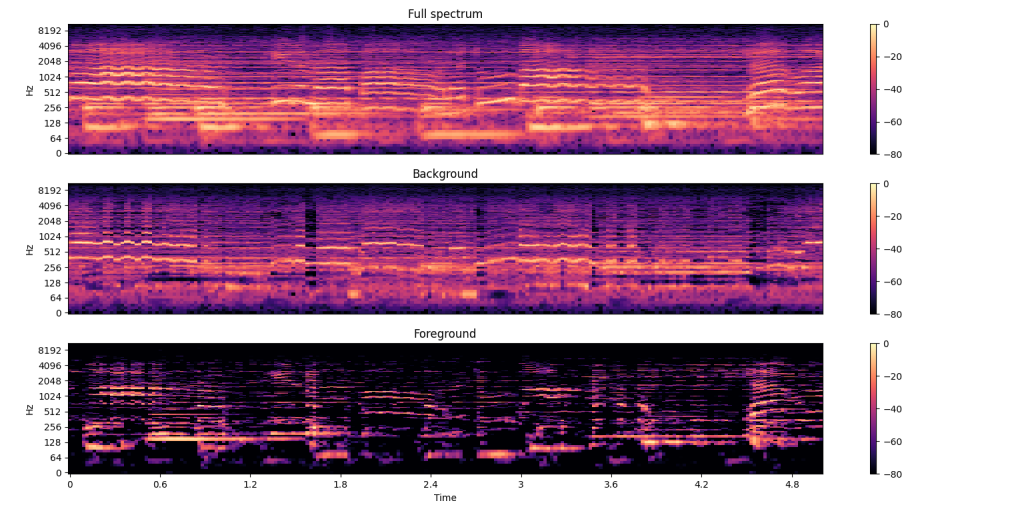

Michelle focused on improving rhythm detection by analyzing vocals separately from instrumentals. She implemented a vocal separation technique using a similarity matrix and median spectrogram modeling, although testing revealed significant background noise bleeding and high latency (~2.5 minutes processing for a 5-minute song). After thorough testing across multiple instruments and vocal recordings, Michelle concluded that rhythm detection on vocals is generally less accurate than on instrumental tracks. Based on this finding, the team decided not to include vocal separation in the final system. Michelle is on track for the final demo and will conduct full system validation next week.

Lucas concentrated on polishing and stabilizing the gameplay system. He performed debugging across various game components, added a cohesive color palette for better visual design, and fixed issues in JSON file loading to ensure the game correctly fetches user data. Lucas plans to spend the final week on thorough system-wide debugging, playtesting, and attempting to integrate a MIDI keyboard to enhance gameplay engagement. He is also preparing final project deliverables, including the poster, video, and report.

Yuhe worked on validating and stabilizing the Beat Map Editor. She conducted user testing with four testers (all with programming and music backgrounds) and received positive feedback on the editor’s usability and responsiveness. Yuhe manually created two new beatmaps (for Bionic Games – Short Version and Champion by Cosmonkey) and began creating a third classical piece beatmap to demonstrate genre versatility. She stress-tested the editor across dense rhythmic patterns and verified consistent note placement, waveform rendering, and file persistence on Windows using SFML.

Unit Tests Performed

1. Michelle’s Unit Tests:

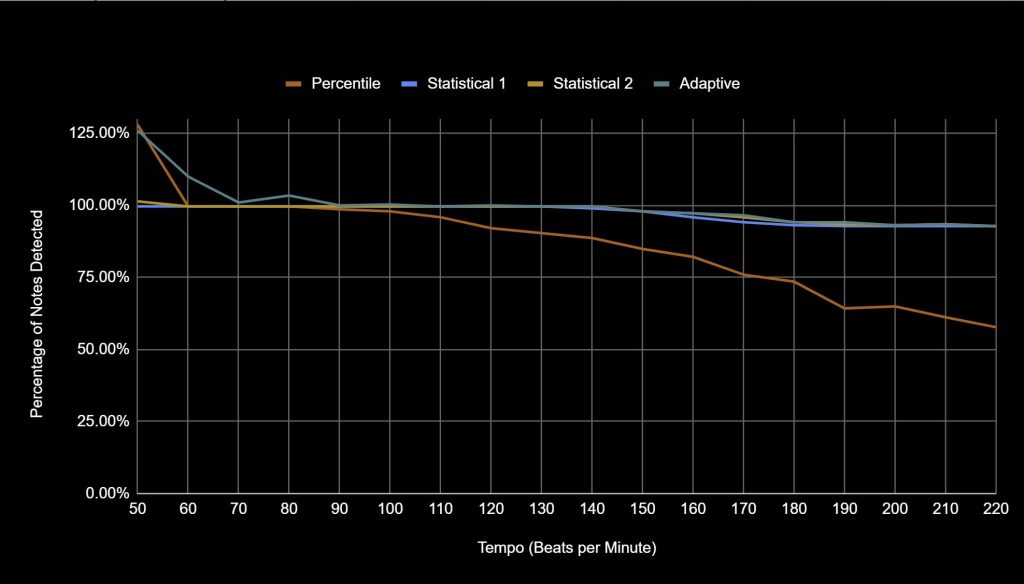

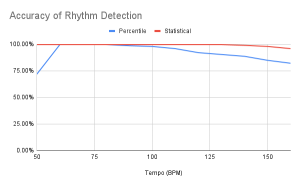

- Rhythm detection accuracy on simple, self-composed pieces (whole to 16th notes, 50–220 BPM) for piano, violin, guitar, voice.

- Rhythm detection with dynamic variations (pianissimo to fortissimo).

- Rhythm detection on complex, dynamic-tempo self-composed pieces (whole to 64th notes, accelerandos/ritardandos).

- Rhythm detection accuracy (aurally evaluated) on real single-instrument pieces:

- Bach Sonata No. 1, Bach Partita No. 1, Bach Sonata No. 2, Clair de Lune, Moonlight Sonata, Paganini Caprice 17/19.

- Rhythm detection accuracy on real multi-instrument pieces:

- Brahms Piano Quartet No. 1, Dvorak Piano Quintet, Prokofiev Violin Concerto No.1, Guitar Duo, Retro Arcade Song, Lost in Dreams, Groovy Ambient Funk.

- Rhythm detection with and without vocal separation on vocal pieces:

- Piano Man, La Vie en Rose, Non Je ne Regrette Rien, Birds of a Feather, What Was I Made For, 小幸運.

- Latency tests for all rhythm detection cases.

2. Yuhe’s Unit Tests:

- UI responsiveness testing (<50ms input-to-action latency).

- Beatmap save/load time (≤5s for standard songs up to 7 minutes).

- Waveform synchronization testing across different sample rates and audio lengths.

- Stress testing dense note placement, waveform scrolling, and audio playback stability.

- Manual verification of beatmap integrity after saving/loading.

Lucas’ Unit Tests:

- JSON file loading and path correctness verification.

- Game UI color palette integration and visual consistency.

- Basic gameplay functional debugging (note spawning, input handling, scoring).

Overall System Tests

- Full gameplay flow validation: Music upload ➔ Beatmap auto-generation ➔ Manual beatmap editing ➔ Gameplay execution.

- Cross-platform stability testing (Ubuntu/Windows) for Beat Map Editor and core game engine.

- Audio playback stress testing across multiple hardware setups.

- End-to-end latency and responsiveness validation under normal and stress conditions.

- Early user experience feedback collection on usability and intuitiveness.

Findings and Design Changes

Vocal Separation Analysis:

Testing revealed that rhythm detection accuracy on vocals is significantly worse than on instruments. Vocal separation also introduced unacceptable latency (~2.5 minutes vs. 4 seconds without).

➔ Design Change: We decided not to incorporate vocal separation into the audio processing pipeline.

Beat Map Editor Validation:

Manual beatmap creation and user testing confirmed that the editor meets design metrics for latency, waveform rendering accuracy, and usability.

➔ Design Affirmation: Current editor architecture is robust; minor UX improvements (e.g., keyboard shortcuts) may be added post-demo.

Game Stability and File Handling:

Debugging of JSON fetching and general gameplay components improved reliability and reduced potential user-side errors.

➔ Design Improvement: Standardized file paths and error handling for smoother gameplay setup.