This week, I continued finetuning the audio processing algorithm. I continued testing with piano and guitar and also started testing voice and bowed instruments. These are harder to extract the rhythm from since the articulation can be a lot more legato. If we used pitch information, it may be possible to distinguish note onsets in slurs, for example, but this is most likely out of scope for our project.

Also, there was a flaw in calculating the minimum note length based on the estimated tempo because sometimes a song that most people would consider 60 BPM, librosa would estimate 120 BPM, which is technically equivalent, but then the calculated minimum note length would be much smaller and result in a lot of “double notes”, or note detections directly after one another that resulted from one more sustained note. For the game experience, I believe it is better to have more false negatives than false positives. I think having a fixed minimum note length will be a better generalization. A threshold of 0.1 seconds seems to work well.

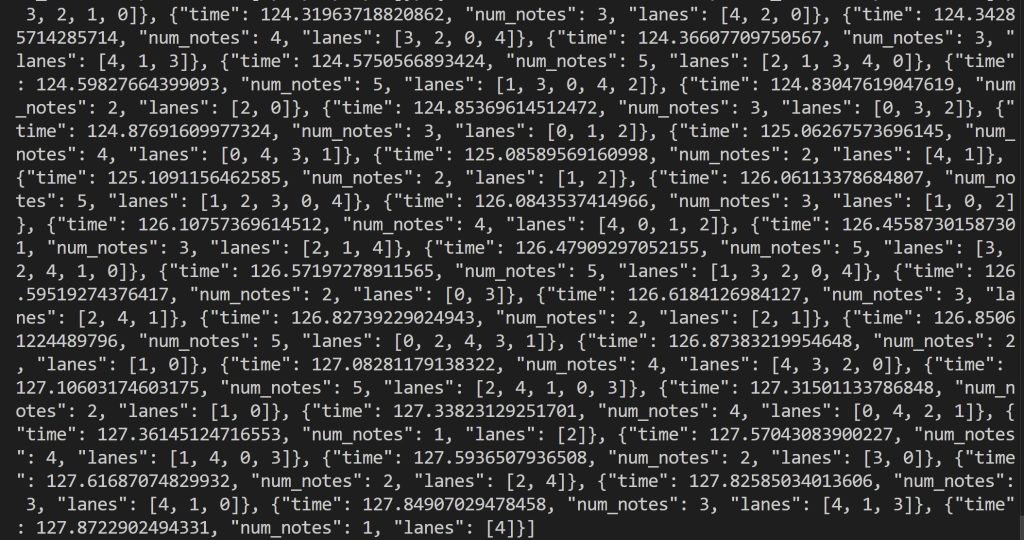

Additionally, In preparation to integrate the music processing with the game, I added some more information to the JSON output that bridges the two parts. Based on the number of notes in for a given timestamp, the lane numbers are randomly chosen from which the tiles will fall from.

My progress is on schedule. Next week, I plan to finalize my work on processing the rhythm of single-instrument tracks and meet with my teammates to integrate all of our subsystems together.