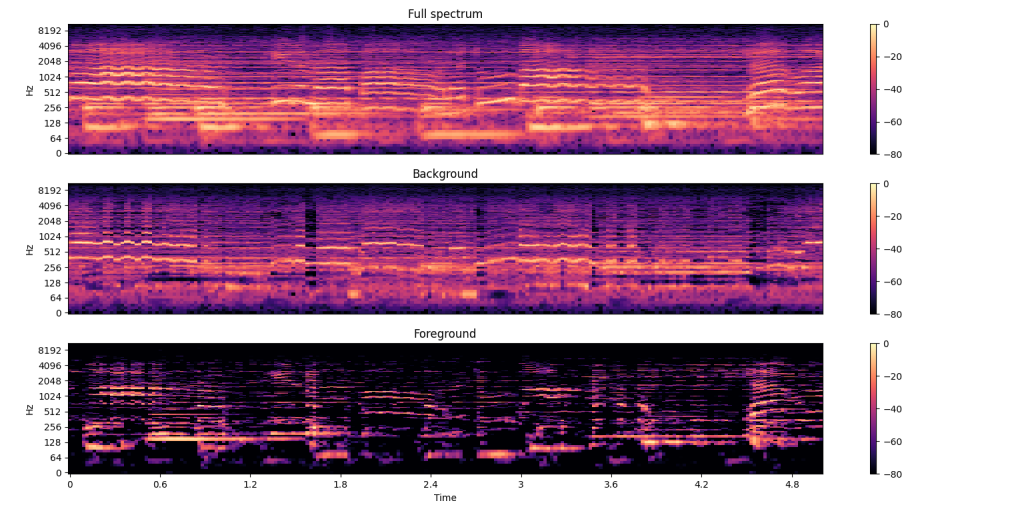

This week, I worked on trying to analyze the rhythm of just the vocals of pop songs. I used a technique for vocal separation that involves using a similarity matrix to identify repeating elements and then calculates a repeating spectrogram model using the median and extracts the repeating patterns using a time-frequency masking. The separation is shown in an example below:

Using this method, there is still some significant bleeding of the background into the foreground. Additionally, the audio processing with vocal separation takes on average 2.5 minutes for a 5 minute song, compared to 4 seconds without the vocal separation. Even when running the rhythm detection on a single voice a cappella track, the rhythm detection does not perform as well as on for a piano or guitar song, for example, since in general the notes have less distinct onsets in singing. Thus I think users who want to play the game with songs with voice in it are better off using the original rhythm detection and refining with the beat map editor or using the editor to build a beat map from scratch.

I am on schedule and on track with our goals for the final demo. Next week, I plan to conduct some user testing of the whole system to validate our use case requirements and ensure there are no bugs in preparation for the demo.