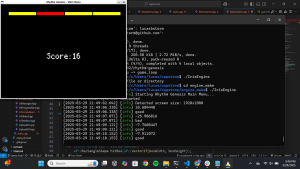

This week, we made progress toward integrating the audio processing system with the game engine. We implemented a custom JSON parser in C++ to load beatmap data generated by our signal processing pipeline, enabling songs to be played as fully interactive game levels. We also added a result splash screen at the end of gameplay, which currently displays basic performance metrics and will later include more detailed graphs and stats.

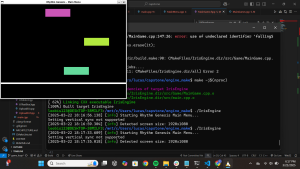

In the editor, we refined key systems for waveform visualization and synchronization. We improved timeToPixel() and pixelToTime() mappings to ensure the playhead and note grid align accurately with audio playback. We also advanced snapping logic to quantize note timestamps based on BPM and introduced an alternative input method for placing notes at specific times and lanes, in addition to drag-and-drop.

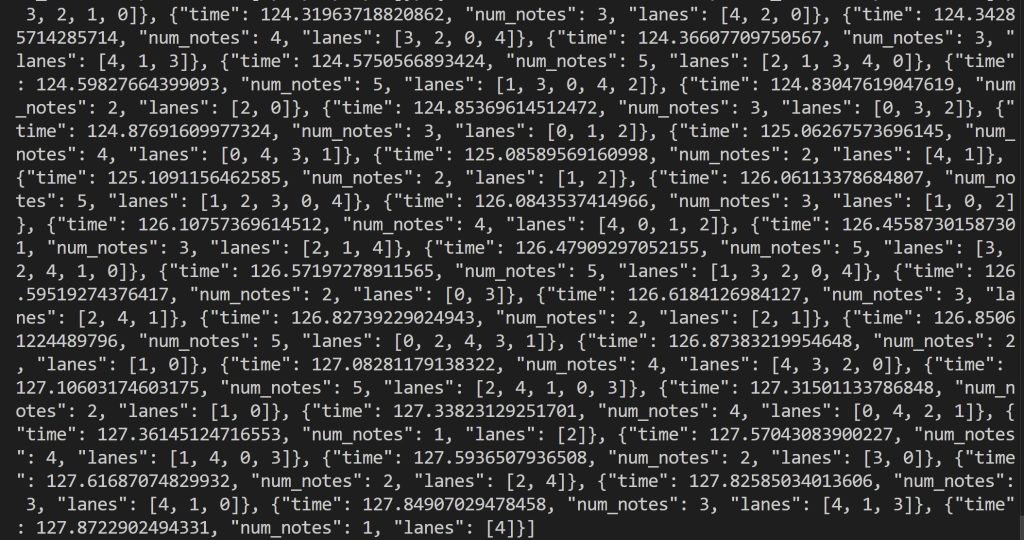

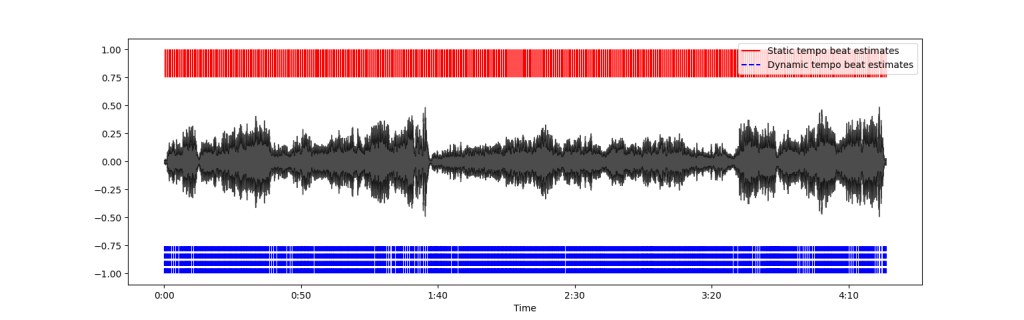

On the signal processing side, we expanded rhythm extraction testing to voice and bowed instruments and addressed tempo estimation inconsistencies by using a fixed minimum note length of 0.1s. We also updated the JSON output to include lane mapping information for smoother integration.

Next week, we plan to connect the main menu to gameplay, polish the UI, and fully integrate all system components.