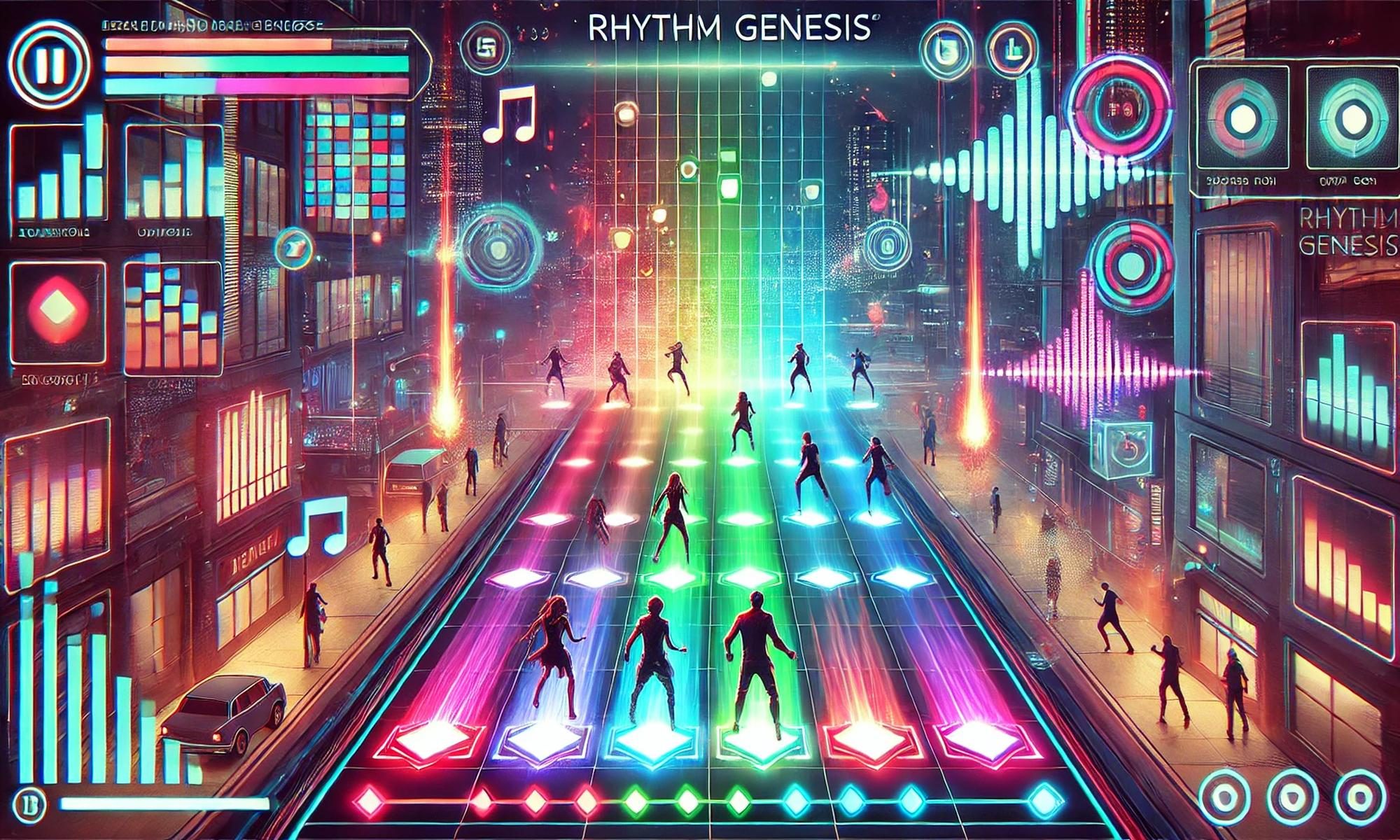

This week, we began implementing the Rhythm Genesis game in Unity, including the User Interface and the core game loop, and also continued work on tempo and beat tracking analysis, calculating the current beat alignment error.

- Yuhe worked on the User Interface Layer of the game, implementing the main menu and song selection.

- Lucas focused on making the core game loop, implementing the logic for the falling tiles.

- Michelle worked on verification methods for beat alignment error in audio analysis.

Some challenges that we are currently facing are figuring the best method of version control. We initially tried using GitHub, but this did not work out since Unity projects are so large. We are now using Unity’s built-in Plastic SCM, which is not super easy to use. Another challenge is that we are discovering that faster tempos are experiencing beat alignment error outside of our acceptance criteria. We will need to spend some more time finetuning how we detect beat timestamps especially for fast songs. As of now there are no schedule changes as the team is on track with our milestones.

A. Written by Yuhe Ma

Although video games may not directly affect public health or safety, Rhythm Genesis may benefit its users mental well-being and cognitive health. Rhythm games are known to help improve hand-eye coordination, reaction time, and focus. Our game offers an engaging, music-driven experience that enhances people’s dexterity and rhythm skills, which can be useful for both entertainment and rehabilitation. Music itself is known to reduce stress and boost mood, and by letting users upload their own songs, Rhythm Genesis creates a personalized, immersive experience that promotes relaxation and enjoyment. From a welfare standpoint, Rhythm Genesis makes rhythm gaming more accessible by offering a free customizable alternative to mainstream games that lock users into pre-set tracks or costly DLCs. This lowers the barrier to entry, allowing more people to enjoy rhythm-based gameplay. By supporting user-generated content, our game encourages creativity and community interaction, helping players develop musical skills and express themselves. In this way, Rhythm Genesis is not only a game but also a tool for cognitive engagement, stress relief, and self-expression.

B. Written by Lucas Storm

While at the end of the day Rhythm Genesis is just a video game, there are certainly things to consider pertaining to social factors. Video games provide people across cultural and social backgrounds a place to connect – whether that be via the game itself or just finding common ground thanks to sharing an interest – which in my opinion is a very valuable thing. Rhythm Genesis, though not a game that will likely incorporate online multiplayer, will still allow those who are passionate about music and rhythm games to connect with each other through their common interest and connect with their favorite songs and artists through the gameplay.

C. Written by Michelle Bryson

As a stretch goal, our team plans to publish Rhythm Genesis on Steam where users will be able to download and play the game for free. Steam is a widely used game distribution service, so the game will be accessible to a wide range of users globally. Additionally, the game will be designed so that it can be played with only a laptop keyboard. We may consider adding functionality for game controllers, but we will still maintain the full experience with only a keyboard, allowing the game to be as accessible and affordable as possible.