While we’re still waiting to actually acquire the EGG sensor, my goal is to get a head start on planning out the recording feature + sheet music tracking. Ideally, the user will be able to input sheet music and a recording of them singing, and when they analyze their feedback in the app, the two will sync up. While the sheet music element wasn’t initially something we deemed important, it became a priority due to how this would dramatically increase usability, allowing the user to visually interact with the app’s feedback rather than be forced to relisten to a potentially lengthy audio file in order to get context for the EGG signal they see. It’s also a significant way of differentiating our project from our main existing “competitor,” VoceVista, which doesn’t have this feature. Additionally, if for some reason the EGG thing falls through, this mechanism will still be useful if we end up measuring a different form of vocal health.

General Brainstorm

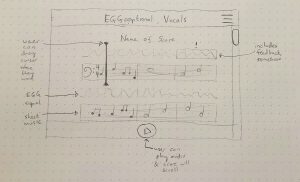

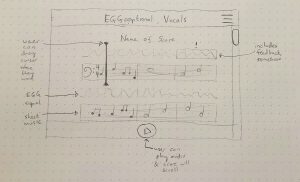

Here’s what I’m envisioning, roughly, for this particular view of the app. Keep in mind that we don’t yet know how the feedback is going to look, or how we want to display the EGG signal itself (is the waveform showing vocal fold contact actually useful? Maybe we just want to show the closed quotient over time?). But that’s not my problem right now. Also note that there ought to be an option for inputting and viewing an audio/EGG signal without a corresponding sheet music input, as that’s not always going to be possible or desirable.

The technical challenges here are far from trivial. Namely:

- How do we parse sheet music (what should be sung)?

- How do we parse the audio signal input (what’s actually being sung)?

- How do we sync these things up?

Researching how, exactly, this synchronization could be achieved, the prospect of a complicated system using pitch recognition and onset detection is daunting (though, we do hope to incorporate pitch detection later on as one of our analytic metrics). Doing something like fully converting the input signal into a MIDI file is difficult to achieve accurately even with established software, so, basically a project in its own right.

So here’s an idea: what if the whole synchronization is just based on tempo? If we know exactly how long each beat is, and can detect when the vocalist starts singing, we can match each measure to a specific timestamp in the audio recording without caring about the content of what’s actually being sung. This means that all the app needs to know is:

- Tempo

- This should be adjustable by user

- Ideally, the user should be able to set which beats they want to hear the click on (eg, full measure vs every beat)

- Time signature

- Could be inputted by user, or extracted from the score

- Where measure divisions occur in the score

- This should be detectable in a MusicXML formatted file (the common file format used by score editors, and probably what we’ll require as the format for the sheet music input)

- However, if this is the only thing that needs to be extracted from the score, reading just the measure divisions off of a PDF seems more doable than the amount of parsing originally assumed necessary (extracting notes, rhythms, etc)

- But let’s not overcomplicate!

- When the vocalist starts singing

- Onset detection

- Or, the recording page could already include the sheet music, provide a countdown, and scroll through the music as it’s being sung along to the click track. That could be cool, and a similar mechanism to what we’d be using for playback.

Potential difficulties/downsides:

- Irregularities in beats per measure

- Pickup notes that are less than the full measure

- Songs that change time signature partway through, repeats, DC Al Coda, etc

- We just won’t support these things, unless, maybe, time signature changes come up a lot

- A strict tempo reduces the vocalist’s expressive toolbox

- Little to rubato, no tempo changes

- Will potentially make vocalists self-conscious, not sing like they normally would

- How much of an issue is this? A question for our SoM friends

- Sacrifice some accuracy in the synchronization for the sake of a bit of leeway for vocalists?

- If an individual really hates it, or it’s not feasible for a particular song, could just turn off sheet music sync for that specific recording. Not necessarily a deal breaker for the feature as a whole.

- Click track is distracting

- If it’s in an earpiece, could make it harder to hear oneself

- Using something blinking on screen, instead of an audio track, is another option

- And/or, will singing opera make it hard to hear the click track?

- If it’s played on speaker, could be distracting on playback, plus make the resulting audio signal more difficult to parse for things like pitch

- This could be mitigated somewhat by using a good microphone?

- Again, the visual signal is an option?

- Latency between producing click track and hearing it, particularly if using an earpiece

Despite these drawbacks, the overall simplicity of this system is a major upside, and even if this particular mechanism ends up insufficient in some way, I think it’ll provide a good foundation for our sheet music syncing. My main concerns are how this might limit vocalists expression, and how distracting a click track might be to the singer. These are things that I will want to bring up at next week’s meeting with School of Music folks.

Workflow

In the meantime, this will be my order of operations:

- Finalize framework choice

- We’ve decided that we (almost certainly) will be building a desktop app with a Python backend (the complications of a webapp seem unnecessary for a project that only needs to run on a few devices in a lab setting, and Python has very accessible/expansive libraries for signal processing)

- The frontend, however, is still up in the air– this is going to be my main domain, so it needs to be something that I feel comfortable learning relatively quickly

- Set up basic “Hello World”

- Figure out a basic page format

- Header

- Menu

- Create fundamental music view

- Both the recording and playback pages will use the same foundational format

- Parse & display the MusicXML file input

- This could be very complicated. I have no idea.

- Separate music into lines, with room for modularity (to insert EGG signal data etc)

- Figure out where measure separations are

- Measures will likely need to all be equal widths for the sake of signal matching ultimately? Not sure if this is crucial yet

- Create cursor that moves over score in time, left to right, when given tempo

- On the record page, tempo will be given explicitly by the user. On the playback page, the tempo will be part of the recording’s metadata

- How the cursor aligns with the measures will depend on the MusicXML file format and what is possible. Could just figure out amount of space between measure separation lines + move cursor smoothly across, given the amount of time per measure

- Deal with scrolling top-to-bottom on page

- Create record page

- Set tempo

- Record audio

- While music scrolls by, or by itself

- Countdown

- Backing click track

- Create playback page

- Audio sync with measures + playback

- Play/pause button

- Drag cursor

Picking Framework & Technologies

I considered several options for a frontend framework for the Python desktop app. I was initially interested in using Electron.js, as this would work well with my existing knowledge of HTML/CSS/JavaScript, and would allow for a high level of customizability. However, Electron.js has very high overhead, and would introduce the complications of basically having to run an entire web browser instance in order to run the app. I also considered using PyQt, but I haven’t had experience with this framework, and it seemed like its idiosyncrasies would mean a pretty steep learning curve. So, I’ve decided to proceed with using TKinter, the standard Python GUI– in my small experiments, it seems to so far be straightforward, and since I’m starting out with some of the more complicated views of my GUI, I think I’ll be able to tell fairly quickly whether or not it’ll be sufficient for my purposes.

Finally, I wanted to learn how to parse and display MuseScore files in a Python program. I’ve started by learning how to use music21, a package developed in collaboration with the MIT music department, that offers a wide range of functionalities for dealing with MusicXML files. I’ve only really had the time to get this library set up, so I have yet to actually do much with it directly. Till next week!