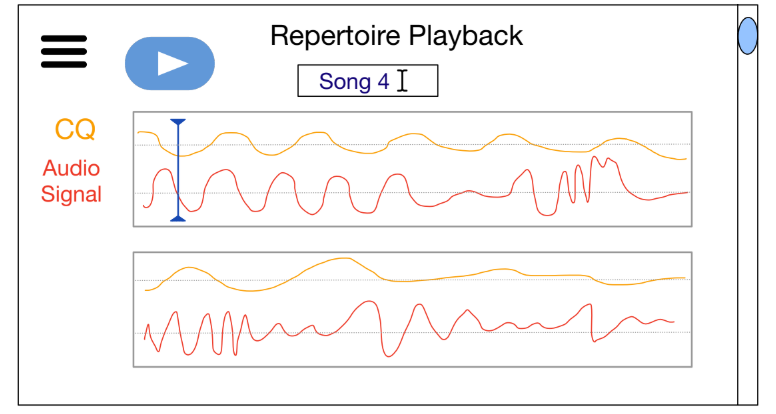

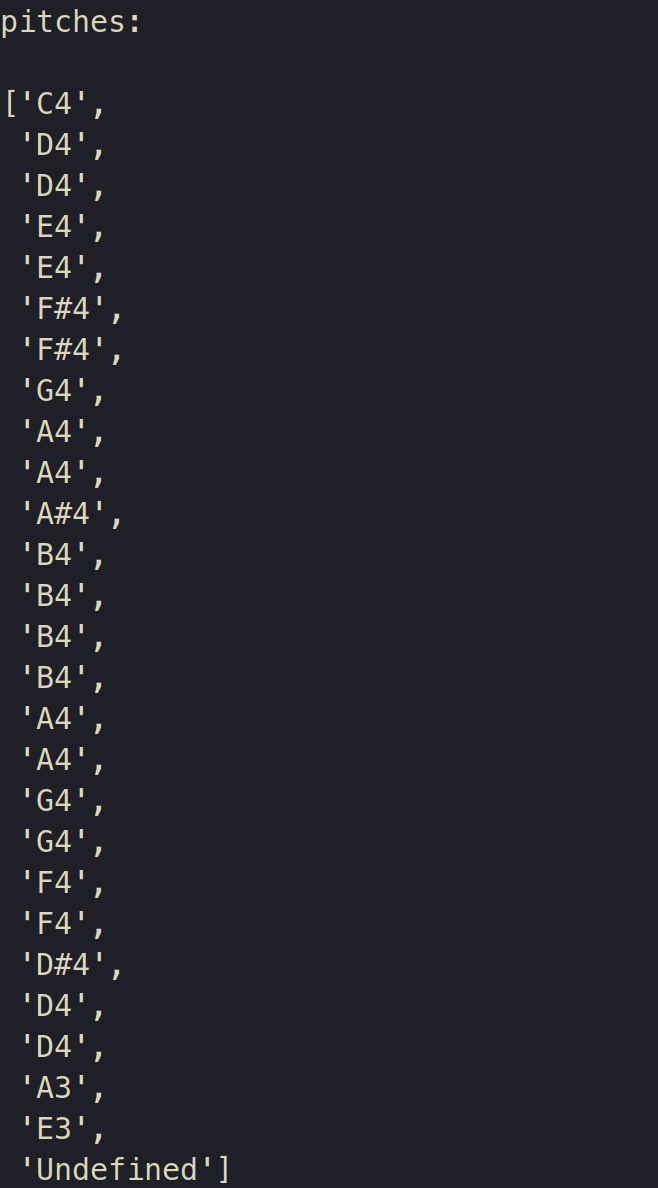

On Monday during class I was able to get a scraper in order for us to get the EGG data output available to us, I used the pytesseract library in order to do so. Tuesday I had a meeting with the manufacturers of the EGG and was able to debug and get the EGG signal and the microphone signal to work for the EGG. However, an issue that we ran into is that the EGG LED sensor for the electrode placement is broken, meaning that we will not be able to determine if we are getting the best electrode signal from the larynx placement while calibrating to the user. Another issue is that we are unable to utilize our EGG dual channel feature, which we believe may be because we need to utilize a USB to USB-C adapter in order to plug into the EGG. It is not a priority to be fixed, what we are currently doing is just recording using the mic on one laptop and recording the EGG signal on a different laptop and then overlaying them based on the audio timestamp of where we start. We start by pressing the record button together so it won’t be the most accurate but it will be useable for the time being. Now, I am working on merging the data sheets together in a nicely formatted way as well as recording more data from our singers, we had one two hour session with a singer but we would like to get a variety of singers before our meeting on Wednesday.

As far as progress, I believe that I am basically done with my parts and now I am focusing on helping Susanna and Melina with their parts and making sure our frontend design is intact.

For verification on my work, I have been comparing the measured EGG data with the EGG data that was provided as well as research papers to ensure that our EGG data collection makes sense and doesn’t output insane closed quotients that do not make sense. With my data collection of the EGG data, I roughly know it to be accurate since I am watching the closed quotient move through VoceVista and it matches the output of the data scrapper I designed. We do not need it to be super accurate since in the future VoceVista will be able to do it automatically, but since we do not have access to that version yet I designed a crude solution to use to meet our deadlines.

of the EGG. and in the Electrode Placement laryngeal LED it would only signal too low regardless of where we put the sensors, however the EGG Signal would have a proper display. I have emailed Professor Brancaccio, Professor Helou, and the VoceVista author Bodo Maass questions on how to address this. So far, Bodo is the only one that has responded and he said he is happy to help me troubleshoot during a VoceVista coaching session, which we will have to pay for. It might be useful to use, since I can also learn more efficient ways to transfer VoceVista data out of VoceVista into our software component as well as learn how to troubleshoot an EGG for future issues. I believe I am a little bit behind since this week I really wanted to record a lot of data with the singers to use with the EGG and microphone, and so far we only have my computer microphone data not even the Shura mic that we purchased, but it should be quick to catch back up with a short one to two hour efficient session with the singers. Outside of that, another deliverable I would like to be able to achieve is quick setups of the EGG, since right now it took me over 10 minutes to calibrate and attach everything for the EGG as well as set up Voce Vista, so a little more familiarity with everything. I would also like to start working on storing the EGG data into a more permanent and elegant solution rather than just having excel spreadsheets in a shared folder in Google Drive, and start working on a shared database to use.

of the EGG. and in the Electrode Placement laryngeal LED it would only signal too low regardless of where we put the sensors, however the EGG Signal would have a proper display. I have emailed Professor Brancaccio, Professor Helou, and the VoceVista author Bodo Maass questions on how to address this. So far, Bodo is the only one that has responded and he said he is happy to help me troubleshoot during a VoceVista coaching session, which we will have to pay for. It might be useful to use, since I can also learn more efficient ways to transfer VoceVista data out of VoceVista into our software component as well as learn how to troubleshoot an EGG for future issues. I believe I am a little bit behind since this week I really wanted to record a lot of data with the singers to use with the EGG and microphone, and so far we only have my computer microphone data not even the Shura mic that we purchased, but it should be quick to catch back up with a short one to two hour efficient session with the singers. Outside of that, another deliverable I would like to be able to achieve is quick setups of the EGG, since right now it took me over 10 minutes to calibrate and attach everything for the EGG as well as set up Voce Vista, so a little more familiarity with everything. I would also like to start working on storing the EGG data into a more permanent and elegant solution rather than just having excel spreadsheets in a shared folder in Google Drive, and start working on a shared database to use.