End-to-end Product

We’re at a point where we are able to use our components to make a full end-to-end example of our workflow: starting with the recording of both an EGG signal and the corresponding audio signal. VoceVista processes both the audio file to get spreadsheets of CQ and pitch frequencies over the given interval. At the moment, we have to do much of the following processing manually in order to sync up the start times of each file, as well as the audio itself. Once these are processed and lined up, we can load them into our application. The backend will automatically make sure that the total duration is synced up, and establish the range of pitches to show on the display graph based on the pitch identification algorithm. Finally, the frontend displays all this data to the user. Unfortunately, this isn’t a particularly seamless process at the moment, since it still requires some manual processing of data, but it at least is effective.

New singer feedback

Our main collaborators in this project are the two opera singers in the School of Music who we’re working with. This week, we also completed brief sessions with three non-music-student vocalists, with the goal of further gauging how new users interacted with our application and setup. This was an extremely small sample size, but yielded some interesting results from the anonymous Google form we asked the singers to complete after their sessions. Notably, all three singers we worked with agreed that the sensors were comfortable to wear, and that the setup and the navigation of the app were straightforward. However, we also asked on a scale of 1 (strongly disagree) to 5 (strongly agree) whether “the information I gain from this application would be useful or informative to my practice as a singer.” All three of our singers reacted with 3 (neutral) to this question– they didn’t see a direct practical application of the EGG data, and seemed to expect a clear “this is good” “this is bad” feedback as to their CQ, when the reality is a bit more complicated.

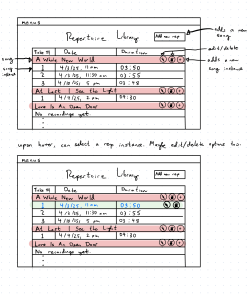

This was informative to us because on the one hand, these singers were not as experienced in formal technique as our opera singers, despite their love of music, and this confirmed that our target audience is actually quite small: this application might not be the most directly applicable to a larger pedagogical setting. However, through various channels of feedback and the advice of our TA and faculty mentors, we’ve agreed that we need to do more with the CQ data that we’re gathering, since that’s really what makes our project unique. As a result, we’ve made a plan for displaying CQ data in relation to pitch, allowing the user to select multiple days to compare over time. See Susanna’s status report for more details. This will be the final main feature to implement in the final couple weeks before demo.

General Schedule

Our overall integration of components has been going according to schedule, and our EGG has not had further complications this week– there aren’t any new risks to the project as of this time.