With regards to progress, I believe that I am very slightly behind schedule. Once again, I am not currently too worried about not getting our deliverables completed, and next week, the majority of our time will likely center around some light user testing, and preparing the materials for our final submissions (poster, slides, writeup).

Team Status Report for 4/19/2025

Last week, we managed to get both the object detection model and walk sign model onto the board. Due to make sure that the object detection model could use the GPU and decrease the inference time, we had to create a docker container for it to run in. However, due to Python dependency issues with tensorflow and pytorch, we are currently trying to change the walk sign image classification model to only use pytorch. We have tried a variety of methods such as converting a .h5 tensorflow model to a .pth pyotrch model, and also just rewriting everything using pytorch, but both have had issues. We are still currently exploring solutions to this problem.

Regarding hardware, we have finally mounted all our components to the chest mount. The power bank also arrived, which fits nicely into a fanny pack. We have tested running our system fully attached to a person, and it works as expected. The device is comfortable, and does not impede user motion or weigh too much. We are still improving the mount, but it’s in a very good state right now.

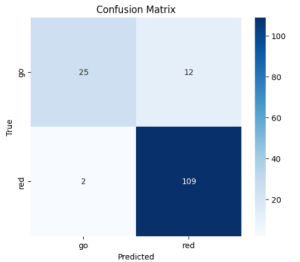

Update on 4/20/2025: Regarding the issue with converting the model from tensorflow to pytorch, we have successfully recreated the model in pytorch. We now have 3 different models trained on the much larger dataset collected from last week, using resnet 34, 101, and 152. The performance and confusion matrix for each are pretty similar. This is the confusion matrix for the resnet 152 model:

The test accuracy is 90.54%, but it’s possible that the class imbalance is skewing this accuracy. In any case, it’s better for the model to be more cautious when predicting “walk” than “don’t walk”, and we see that the error rate for the “don’t walk” class is very low.

Andrew Wang’s Status Report 4/19/2025

The next week, I also anticipate refining the navigation logic further, and getting a speech to text feedback loop tested on the Jetson, as mentioned before.

Andrew Wang’s Status Report 4/12/2025

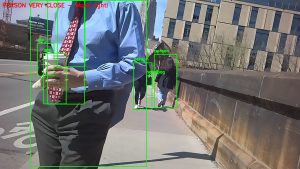

Using our models, I manually implemented visualizations of the bounding boxes identified by the model in the images sampled, and printed out any feedback prompts that would be relayed to the user on the top left of each image.

Visually inspecting these images, we see that not only do the bounding boxes look accurate to their identified obstacles, but that we are able to use their positional information in the image to relay feedback. For sample, in the second image, there is a person coming up behind the user on the right side, so the user is instructed to move to the left. Additionally, the inference speed was very quick; the average inference time per image was roughly 8ms for the non-quantized models, which is an acceptably quick turnaround for a base model. I haven’t tried running inference on the quantized models, but previous experimentation has indicated that it would likely be faster. As such, since the current models are already acceptably quick, I plan on testing the base models on the Jetson Nano first before I attempt to use to quantized models to maximize performance accuracy.

Currently, I am about on schedule with regards to ML model integration and evaluation. For this week, I plan on working with the staff and the rest of our group to refine the feedback logic given by the object detection model, as currently the logic is pretty rudimentary, often giving multiple prompts to adjust the user’s path when only one or even none are actually necessary. I also plan on integrating the text to speech functionality of our navigation submodule with our hardware if time allows. Additionally, I will need to port the object detection models onto the Jetson Nano and more rigorously evaluate the speed on our hardware, so that we can determine if more intense model compression will be necessary to make sure inference and feedback are as quick as we need it to be.

Andrew Wang’s Status Report: 3/29/2025

I am using TensorRT and Onnx packages to perform the quantization on the YOLO models, which I found online was the best method for quantizing ML models for Edge AI computing, due to the fact that this method results in the fastest speedups while shrinking the models appropriately. However, this process was pretty difficult as there are a lot of hardware specific issues I ran into in this process. Specifically, the process of quantizing using these packages involves at lot of under the hood implementation assumptions about how the layers are implemented and whether or not they are fuse-able, which is a key part of the process.

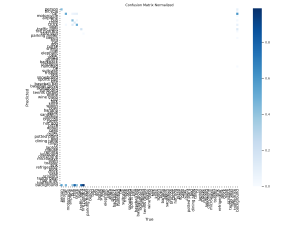

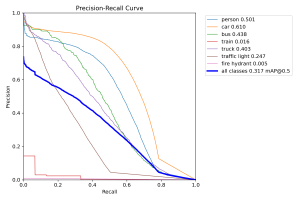

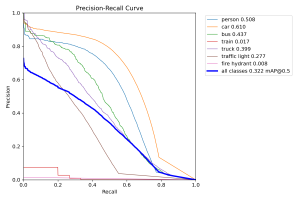

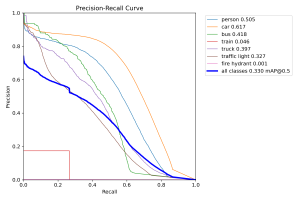

I did end up figuring it out, and ran some evaluations on the YOLOv12 model to see how performance changes with quantization:

Visually inspecting the prediction-labelled images and PR curve as well as the normalized confusion matrix, just like before, it generally appears that there isn’t much difference in performance with the base, un-quantized model, which is great news. This implies that we can compress our models in this way without sacrificing performance, which was the primary concern

Currently, I am about on schedule with regards to ML model development, but slightly behind on integration/testing the navigation components.

For this week, in tandem with the demos we are scheduled for, I plan on getting into the details of integration testing. I also would be interesting in benchmarking any inference speedups associated with the quantization, both in my development environment and on the Jetson itself in a formal testing setup.

Andrew Wang’s Status Report: 3/22/2025

This week, I spent some time implementing the crosswalk navigation submodule. One of the main difficulties was using pyttsx3 for real-time feedback. While it offers offline text-to-speech capabilities, fine-tuning parameters such as speech speed, volume, and clarity will require extensive experimentation to ensure the audio cues were both immediate and comprehensible. Since we will be integrating all of the modules soon, I anticipate that this will be acted upon. I also had to spend some time familiarizing myself with text-to-speech libraries in python since I had never worked in this space before.

I also anticipate there being some effort required to optimize the speech generation, as the feedback for this particular submodule needs to be especially quick. Once again, we will address this as appropriate if it becomes a problem during integration and testing.

Currently, I am about on schedule as I have a preliminary version of the pipeline ready to go. Hopefully, we will be able to begin the integration process soon, so that we may have a functional product ready for our demo coming up in a few weeks.

This week, I will probably focus on further optimizing text-to-speech response time, refining the heading correction logic to accommodate natural walking patterns, and conducting real-world testing to validate performance under various conditions.

Andrew Wang’s Status Report: 3/15/2025

Unfortunately, I wasn’t able to make much progress on this end. So far, I attempted switching the label mapping of the bdd100k dataset, trying to fine-tune different versions of the YOLOv8 models, and tuning the hyperparameters slightly, but they all had exactly the same outcome of the model performing extremely poorly on held out data. As to why this is happening, I am still very lost, and have had little luck figuring this out.

However, since we remain interested in having a small suite of object detection models to test, I decided to try to find some more YOLO variants to evaluate while the fine-tuning portion is being solved. Specifically, I decided to evaluate YOLOv12 and Baidu’s RT-DETR models, both compatible with my current pipeline for pedestrian object detection. The YOLOv12 model architecture introduces an attention mechanism for processing large receptive fields for objects more effectively, and the RT-DETR model takes inspiration from a Vision-Transformer architecture to efficiently process multiscale features in the input.

It appears that despite being more advanced/larger models, these models actually don’t do much better than the original YOLOv8 models I was working with. Here are the prediction confusion matrices for the YOLOv12 and RT-DETR models, respectively:

This suggests that it’s possible that these object detection models are hitting the limit of performance on this particular out of distribution dataset, and that testing these out in the real world might have similar performance across models as well.

Currently, I am a bit behind schedule as I was unable to fix the fine-tuning issues, and subsequently was not able to make much progress on the integration components with the navigation submodules.

For this week, I will temporarily shelve the fine-tuning implementation debugging in favor of implementing the transitions between the object detection and navigation submodules. Specifically, I plan on beginning to handle the miscellaneous code that will be required to pass control between our modules.

Team Status Report for 3/8/2025

A change was made to the existing design – specifically, the machine learning model used in the walk sign subsystem was changed from a YOLO object detection model to a ResNet image classification model. This is because the subsystem needs be able to actually classify images as either containing a WALK sign or DON’T WALK sign, so an object detection model would not suffice. No costs were incurred by this change other than the time spent adding bounding boxes to the collected dataset. One risk is the performance of the walk sign image classification model when evaluated in the real world. It is possible that images captured by the camera when mounted on the helmet are different (blurrier, taller angle, etc.) than the images the model is trained on. This can definitely affect its performance, but now that the camera has arrived, we can begin testing this and adjust our dataset accordingly.

Part A (written by Max): The target demographic of our product is the visually impaired pedestrian population, but the accessibility of pedestrian crosswalks around the world varies greatly across countries, cities, and even neighborhoods within a single city. It is common to see sidewalks with tactile bumps, pedestrian signals that announce the WALK sign and the name of the street, and other accessibility features in densely populated downtowns. However, sidewalks in rural neighborhoods or less developed countries often do not have any of these features. The benefit of the Self-Driving Human is that it would work at any crosswalk that has the signal indicator. As long as the camera can detect the walk sign, then the helmet is able to run the walk sign classification phase and navigation phases without any issues. Another global factor is the different symbols used to indicate WALK and DON’T WALK. For example, Asian countries often use an image of a green man to indicate WALK, while U.S. crosswalks use a white man. This can only be solved by training the model on country-specific datasets, which might not be as readily available in some parts of the world.

Part B (written by William): The Self-Driving Human has the potential to influence cultural factors by reshaping how society views assistive technology for the visually impaired. In particular, our project would increase mobility and reduce reliance on caregivers for its users. This can lead to cultural benefits like increased participation in certain social events as the user gains more autonomy. Ideally, this would lead to greater inclusivity in city design and social interactions. Additionally, our project could promote a standardized form of audio-based navigation, influencing positive expectations about accessible infrastructure and design. We hope this pushes for broader adoption of assistive technology-driven solutions, which could result in the development of even more inclusive and accessible technologies.

Part C (written by Andrew): The smart hat for visually impaired pedestrians addresses a critical need for independent and safe navigation while keeping key environmental factors in mind. By utilizing computer vision and GPS-based obstacle detection, the device minimizes reliance on physical infrastructure such as paving and audio signals, which may be unavailable or poorly maintained in certain areas. This reduces the dependency on city-wide accessibility upgrades, making the solution more scalable and effective across diverse environments. Additionally, by incorporating on-device processing, the system reduces the need for constant cloud connectivity, thereby lowering energy consumption and emissions associated with remote data processing. Finally, by enabling visually impaired individuals to navigate their surroundings independently, the device supports inclusive urban mobility while addressing environmental sustainability in its design and implementation.

Andrew Wang’s Status Report: 3/8/2025

This week, I worked on fine-tuning the pretrained YOLOv8 models for better performance. Previously, the models worked reasonably well out of the box on an out of distribution dataset, so I was interested in fine-tuning it on this dataset to improve the robustness of the detection model.

Unfortunately, so far the fine-tuning does not appear to help much. My first few attempts at training the model on the new dataset resulted in the model not detecting any objects, and marking everything as a “background”. See below for the latest confusion matrix:

I’m personally a little confused as to why this is happening. I did verify that the out of the box model’s metrics that I generated for my last status report are reproducible, so I suspect that there might be a small issue with how I am retraining the model, which I am currently looking into.

Due to this unexpected issue, I am currently a bit behind schedule, as I had previously anticipated that I would be able to finish the fine tuning by this point in time. However, I anticipate that after resolving this issue, I will be back on track this week as the remaining action items for me are simply to integrate the model outputs with the rest of the components, which can be done regardless of if I have the new models ready or not. Additionally, I have implemented the necessary pipelines for our model evaluation and training for the most part, and am slightly ahead of schedule in that regard relative to our Gantt chart.

For this week, I hope to begin coordinating efforts to integrate the object detection models’ output to the navigation modules in the hardware, as well as resolving the current issues with the model fine-tuning. Specifically, I plan on beginning to handle the miscellaneous code that will be required to pass control between our modules.

Design Review Report: 2/28/25

Here is our design review report (also viewable from this page):