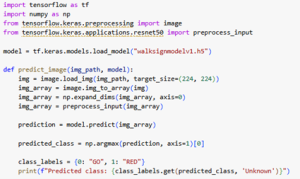

This week I worked on getting the walk sign classification model uploaded onto the Jetson Orin Nano. The Jetson can natively run Python code and can run a Jetson-optimized version of TensorFlow, which we can download as a whl file and install on the board. The only Python libraries needed for the ResNet model are numpy and tensorflow, specifically keras, and both of these can run on the Jetson. The model itself can be saved as a .h5 model after being trained in Google Colab, and can be uploaded to the Jetson. Then the Python program can simply load the model with the help of keras and perform inferencing. The code itself is very simple: we simply import the libraries, load the model, and then it is ready to make predictions. The model itself is 98 MB, which fits comfortably onto the Jetson. The tensorflow library is less than 2 GB and numpy is around 20 MB, so they should fit as well.

The challenge now is getting the video frames as input. Currently we can get the camera working with the Jetson and stream the video on a connected monitor, but this is done through the command line and we need to figure out how to capture frames and give it to the model as input. Either the model connects to the camera through some interface, or some other submodule saves frames into the local file system for the model to then take as input, which is how it currently works.

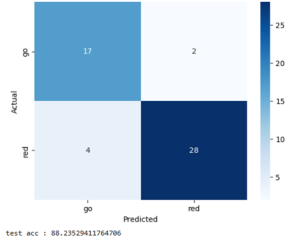

The model is also now performing better on the same testing dataset from last week’s status report. I’m not sure how this happened, since I did not make any changes to the model’s architecture or the training dataset. However, the false negative rate decreased dramatically, as seen in this confusion matrix.