This week I focused on completely finishing up the web app and including all the functionality that it needs. We weren’t able to get reimbursed for the Edit functionality of the Flat.io Embed API so instead if the user wanted to edit the generated score the web app will take them directly to the Flat io website to the where the score is on their account. This way the user is able to edit the score and their changes get reflected onto the website in the Past Transcriptions page. Also on the Past Transcriptions page I added the edit feature on each of the past scores there so any generated score by the user can be edited at any time and the changes are shown on the web app.

Deeya’s Status Report for 4/19/25

This week I focused on finishing up all the functionality and integration of the website. With Shivi I worked on adding the key and time signature user input and integrating in the backend for generating the midi file.

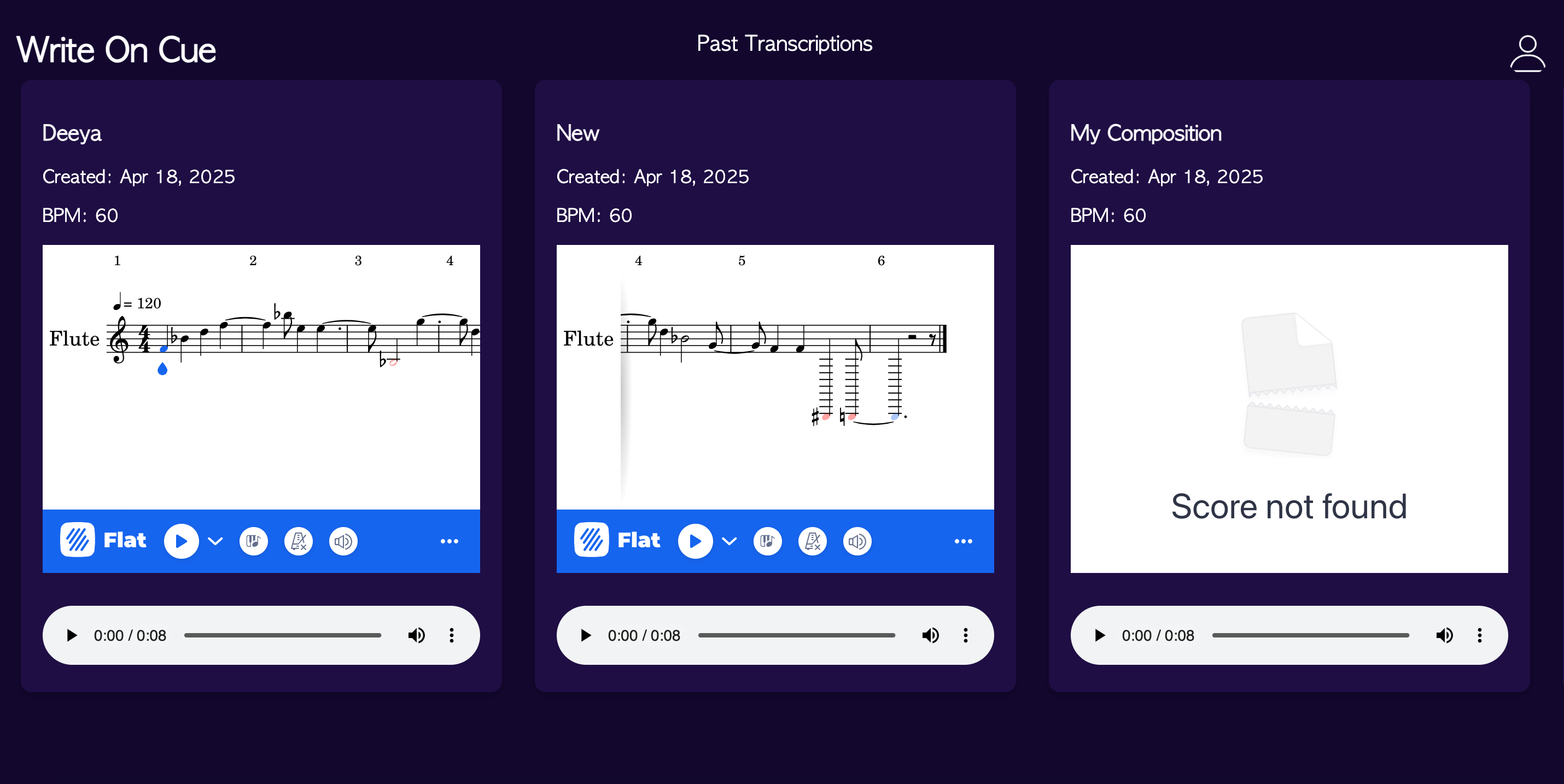

I also worked on the Past Transcriptions page where the user can view each transcription they have made with the web app including the composition name which the user names when they input/record an audio, the bpm of the piece, the generated sheet music, the date it was created, and the audio controls to playback the audio.

The only thing left is integrating the edit functionality which is a paid API call. We are waiting to hear back if it is possible to get reimbursed and we will explore another option which is to redirect the user to the Flat.io page to edit the composition directly there instead of on the web app.

Tools/knowledge I have learned throughout the project:

- Developing a real-time collaborative web application leveraging WebSockets and API calls – Youtube videos and reading documentation

- How to get feedback from the flute students and then be able to incorporate it to make the UI more intuitive

- How to incorporate the algorithms Shivi and Grace have worked on with the frontend and being able to update it seamlessly whenever they make any updates – developing strong communication within the team

Team Status Report for 4/19/25:

This week, we tested staccato and slurred compositions and scales with SOM flute students to evaluate our transcription accuracy. During the two-octave scale tests, we discovered that some higher octave notes were still being misregistered in the lower octave so Shivi worked on fixing this in the pitch detection algorithm. Deeya and Shivi also made progress on the web app by enabling users to view past transcriptions and input key and time signature information. Grace is working on improving our rhythm algorithm to better handle slurred compositions by using Short-Time Fourier Transform (STFT) to detect pitch changes and identify tied notes within audio segments. However, we’re still working on securing access/reimbursement for the Embed AP in Flat.io, which is needed to allow users to edit their compositions. For this week we are preparing for our final presentation, planning on doing two more testing sessions with the flute students, and cleaning up our project.

Deeya’s Status Report for 4/12/25

This week I focused on making the web app more intuitive. Shivi had worked on the metronome aspect of our web app which allows the tempo to change as you change the metronome value using Websockets. I integrated her code into the web app and took the metronome value into the backend so that the bpm changes dynamically based on the user. I also tried to get the editing feature of Flat IO but it seems that the free version using iframe doesn’t work. We are thinking of looking into the Premium version so that we can use the Javascript API. The next step is to work on this and add a Past Transcriptions page.

Deeya’s Status Report for 03/29/25

This week I was able to show sheet music on our web app based on the user’s recording or audio file input. Once the user clicks on Generate Music, the web app is able to call the main.py file that integrates both Shivi and Grace’s latest rhythm and pitch detection algorithms, generate a midi file and store it, convert it into MusicXML, make an API POST request to Flat.io to then display the generated sheet music. The integration process is pretty seamless now so whenever there are more changes made in the algorithms it is easy to integrate the newest code with the web app and have it functioning properly.

In the API part once I convert the MIDI to MusicXML, I use the API method to create a new music score in the current User account. I send a JSON of the title of the composition, privacy (public/private), and data which is the MusicXML file:

This then creates a score and an associated score_id, which enables the Flat.io embedding to place the generated sheet music into our web app:

Flat.io has a feature that allows the user to make changes to the generated sheet music including the key and time signatures, notes, and any articulations and notations. This is what I will be working on next, which then should leave good amount of time for fine-tuning and testing our project with the SOM students.

Team Status Report for 03/22/2025

This week we came up with important tasks for each of us to complete to make sure a working prototype for the interim demo. For the demo we are hoping that the user would be able to upload a recording, the key, and the BPM. The web app should then trigger the transcription pipeline and then the user can view the score. We are aiming to have this all finished for a rhythmically very simple music piece.

These are the assigned tasks we each worked on this week:

Grace: Amplify Audio for Note segmentation, Review note segmentation after amplification, Rhythm Detection

Deeya: Get the Metronome working, Trigger backend from the webapp

Shivi: Learn how to write to MIDI file, Look into using MuseScore API + allowing user to edit generated music score and key signature

After the demo we are hoping to have the user be able to edit the score, refine the code to better transcribe more rhythmically complex audio, do lots of testing on a variety of audio, and potentially add tempo detection.

Also, all of our parts (microphone, xlr cable, and audio interface) have arrived and this week we will try to get some recordings with our mic from the flutists from SOM.

This week we completed the ethics assignment, which made us think a little bit more about plagiarism and user responsibilities when using our web app. We came to the conclusion that we might need to include a disclaimer for the user that they need to be careful and pay attention to where they are recording their piece so that someone else can’t do the same. Also, we have decided not to work on our stretch goal into a project anymore after reflecting on our conversation with Professor Chang. We instead will be focusing on making sure the web app interface is as easy as possible to use and that the user can customize and edit the sheet music our web app generates. Overall we are on track with our schedule.

Deeya Status Report for 3/22/2025

This week I focused on getting the metronome working to play at various speeds the user puts in. Also we don’t need to focus on the pitch of the metronome or making sure that it gets recorded by the microphone because the detection algorithms are using the user inputted BPM instead. Also I focused on getting the backend integrated with the web app. I was able to get the python file Shivi and Grace having been working on to start running when the user clicks on the Generate Music button on the web app. I first started off with a hardcoded recording inputted into the file, and then was able to use the uploaded recording as the input to the python file to do the detection algorithm on. Overall my progress with the web app is on schedule.

Deeya Status Report for 3/15/25

This week I finished up the UI on our website for being able to record the flute and background separately, and I added the feature of being able to hear a playback of the audio being recorded. If a user decides to upload a recording, the ‘Start Recording’ button gets disabled, and if a user presses the ‘Start Recording’ button then the ‘Upload’ button gets disabled. Once the user starts recording they can stop the recording and then replay it or redo their recording. Also the user is able to adjust and hear the tempo of the metronome that gets played at a default of 60bpm. There are still two modifications I need to figure out 1. In the recording, the metronome can’t be heard because the recording only catches sound coming externally from the computer so I am playing around with some APIs I found that are able to catch internal and external recordings from a computer 2. I need to adjust the pitch of the metronome to a value that isn’t in the range a flute can be played at. For the Gen AI part there is a Music Transformer implementation available online using the MAESTRO dataset that focuses on piano music. I am thinking of using this instead of creating this process from scratch. I downloaded the code and tried to understand the different parts of the code. I was able to take a flute midi file and convert it into a format that the transformer can use. I want to continue learning and experimenting with this and see if I can fine tune the model on flute midi files.

Deeya’s Status Report for 3/8/25

I mainly focused on my parts for the design review document and editing it with Grace and Shivi. Shivi and I also had the opportunity to speak to flutists in Professor Almarza’s class about our project, and we were able to recruit a few of them to help us with recording samples and providing feedback throughout our project. It was a cool experience to hear about their thoughts as well as understand how this could be helpful for them during practice sessions they have. Specifically for my parts of the project I continued working on the website and learned how to record audio and store it in our database to be used later. I will now be starting to put more of efforts in the Gen AI part. I am thinking of utilizing a Transformer-based generative model trained on MIDI sequences and I will need to learn how to take MIDI files and convert them into a series of token encodings of musical notes, timing, and dynamics, so that it can be processed by the Transformer model. I will also start compiling a dataset of flute MIDI files.

Team Status Report for 2/22/25

This past week we presented our Design Review slides in class and individually spent time working on our respective parts of this project. Deeya is almost done completing the basic functionality of the website and has so far completed the UI of the website, user profiles and authentication, and navigation of different pages. Grace and Shivi are planning to meet up to combine their preprocessing code and figure out the best way to handle audio segmentation. They want to make sure their approach is efficient and works well with their overall pipeline that they are each creating on their own.

This week we will focus on completing our design report, with each of us working on assigned sections independently before integrating everything into a cohesive report. We are planning to finish the report a few days before the deadline so that we can send it to Ankit and get his feedback. This Wednesday we will also be meeting with Professor Almarza from the School of Music and his flutists to explain our project and to come up with a plan on how we would like to integrate their expertise and knowledge into our project.

Overall we each feel that we are on track with our respective parts of the project and we are excited to meet with the flutists this week. We haven’t changed much to our overall design plan and there aren’t any other new risks we are considering besides the ones we laid out in our summary report last week.