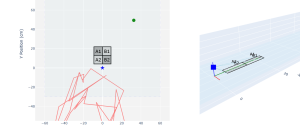

I was able to accurately determine where a user was located in space by calculating the angle from the camera and knowing the depth at a certain point. I also quickly made a small web app to visually debug the gaze estimation. While testing this out I realized that where the camera was located in comparison to the user played a big role in the estimation. For some depths it becomes hard to calculate gaze direction when looking right or the error seems to very big compared to the true gaze direction. I spent the last couple days reading a lot of research papers and found that there was a better dataset called ETHx-Gaze that has around 5 degrees of angular error compared to around 10 that Gaze360 has. The positional error could decrease from around 5.51 to 2.47 inches. I already have the new dataset working using my webcam so I would just have to integrate it with the stereo camera software.

Progress

If I can’t get the gaze estimation to work more accurately in the next day or two, we will have to consider switching to something like a screen to simplify the problem.

Verification:

Since putting LEDs on the board remains uncertain, I anticipate that verification for my subsystem will require significant manual testing. I’ll need to recruit users or volunteers to simulate gameplay scenarios. The process would involve instructing participants to look at specific squares, then using the previously mentioned web app to confirm their gaze is correctly detected. With LEDs implemented, this verification process could be more automated.

Future Deliverables

Switch to ETHx-Gaze dataset

Switch over to Jetson