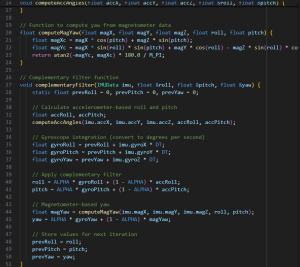

After getting the whole system together, this week, I have just been testing the robot together with Sara. We are testing out different control algorithms. Before, we were just running the robot up the ramp at a fixed speed while adjusting the platform. Now, we are also controlling the drive of the robot based on the velocity of the robot. This is to ensure that the robot does not change angles too quickly when moving up the ramp, so that the water does not spill. We have continued more tests with water, and we see that while the robot adjusts its angle, the water does not spill. However, when the robot first hits the ramp, it causes a large jolt that will cause the water to spill. Since our only sensor is an IMU, we are unable to detect when we are going to hit the ramp, so we know when to slow down. We tried a control system where we would move as slowly as possible, but still, when hitting the ramp, it would cause a spill. In order to fix this issue, we plan to set the robot either at the base of the ramp, so that it does not need to drive up, or we will drive for a fixed distance and then stop at the base of the ramp, before accelerating again to climb up. We also have an issue where the IMU readings will be severely off when adjusting on certain runs. But it is a consistent enough issue, where we have tried to debug the source of the problem. We thought that it might be due to some microvibrations from the linear actuator, so we designed another script to just run the linear actuators while reading from the IMU. We determined that this was not the source of the problem. When examining the IMU data, however, I noticed that there were some outliers in the data. My guess is that when we react to those outliers in our control system, it accentuates the problem. So the plan is to also set an upper bound range for our control loop to react to. This won’t be a problem because we have already stopped the wheels of the robot if the angle becomes too big. So the only time it would exceed the upper bound is if it is an IMU outlier.

We are still behind. We want to get the whole system working consistently with an Arduino before we try to move to an FPGA. This system works, but it is just not consistent enough. With the solutions to the problem already in mind, we should be able to make the system more consistent the next day, so that we can try to swap the Arduino out with the FPGA. If the FPGA does not work by Tuesday, I plan to swap back to the Arduino on Wednesday, so that we can show a consistent system on demo day. Then we will also show the work we accomplished with the FPGA, even if it is not completely integrated into our system. Those are also the deliverables we hope to accomplish, including the poster and the final report.