This week, our group focused on starting to build out our boat model. Based on suggestions from Prof Gloria, we decided to use a plastic base in order to create a first prototype that will be stable in the water, rather than going with our original raft design. I helped my team attach foam board to the boat using a waterproof caulk. While we need to order new propellors (ours were not waterproof as advertised >:( ) I believe that we will be ready to easily attach them to our boat when they come in.

On Monday, Emma and I looked into using threading with the Raspberry Pi so that we could control the camera and motor functions simultaneously. This process was not working as intended as the Pi kept timing out, however she was able to switch to running the motors with an Arduino unit. Emma showed me how to control the motors via Arduino Cloud, so I will be able to help with controls in the future. As we got both of our current motors set up properly, it shouldn’t be too hard to swap them out for the new ones when they come in. This mainly delays how soon we will be able to get our model in the water.

I also made good progress with the ML this week, although I have identified a list of things that I need to iron out in the upcoming week. This includes meeting with Emma to figure out how to extract pictures from the Raspberry Pi in a way that I can use them on my device.

Currently, the ML algorithm:

- Groups pixels from an image into blocks of a given size (for our project 10×10 but this value can be modified)

- Takes the average RGB value of each block

- Excludes blocks that are part of the “background” image

- We plan on removing the background of the coral pictures, so I included white as a color to exclude as well as brown and black to try and get the model to not include the ground in the calculations

- Utilizes the scikit-learn library for the KNN models

- Uses KNN layer #1 to split the remaining blocks into pink coral and blue coral types

- Uses KNN layer #2 to group each of the pink and blue corals into one of four categories (bleached, partially bleached, pale, and healthy) based on the intensity of the color

- Note: there is a separate KNN model for both pink and blue coral so overall I am using 3 KNN models

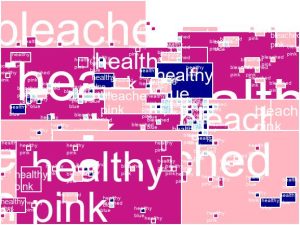

- Creates a new image where the classified blocks are recombined, colored, and labelled

Changes I need to make to the code:

- Add WAY more training points to the data given to the KNN algorithm to increase accuracy

- Figure out a better way to exclude the background as well as the ground/parts of the image that are not the reef

- This will probably improve as I add more classified training points to the KNN

- Decide with the group how we want to display the end result data

- Optimize the code to run quicker/with more images

- Right now I have been running it on test images one at a time

The process:

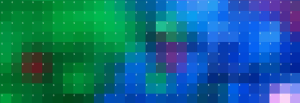

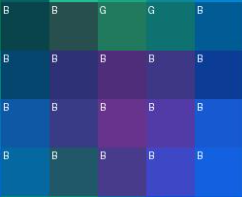

I started by trying to pixelate a random image image. Below, I have included the original image and the image pixelated in groups of 10×10 pixels and 50×50 pixels. (The difference between the first and second image is hard to see here as the quality has been reduced, but the second one is more pixelated in the larger resolution.) I used groupings of 10 and 50 in my testing as it was often easier to see the results with a larger grouping.

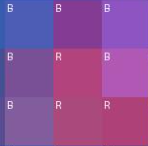

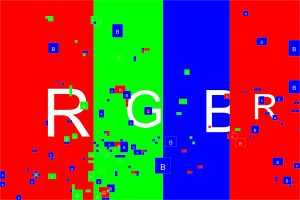

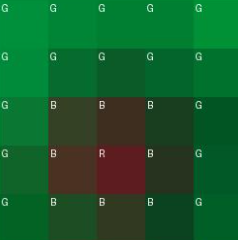

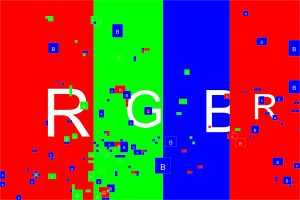

Once I was able to pixelate the image, I worked on seeing if I would be able to classify them as either red, green, or blue using one KNN model. Using a library with a built in KNN model made this process a lot easier to test. Originally, I verified that the model was able to label the groupings by printing out the classifications. After this was done, I started thinking about how I should display these classifications using groupings of 10 and 50. I decided to make each grouping into a square with a small white letter which is either R, G, or B to represent which color it is closest to. As this is hard to see on this scale, I included zoomed in screenshots.

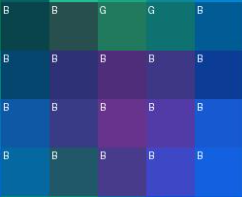

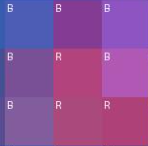

Because this is difficult to see, I decided that my next step should be coalescing the images so that I could combine the blocks that had the same classification and make it so that these blocks are all filled in with the same color. The result is shown below.

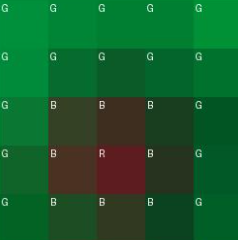

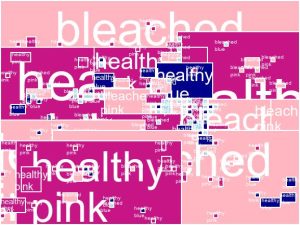

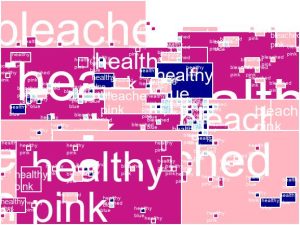

After this step was complete, I felt more confident moving into classifying the coral colors. While there are a lot of different coral varieties, we discussed as a group at the beginning of the project that for the scope of capstone we should start with two types, pink and blue. If we are able, we can work on expanding to include more colors. This is when I implemented the two KNN layers. Using the same original rainbow picture, here is the new image that the classifier returned using chunks of 50×50.

Next, I started using pictures of real coral. Originally, I tested images with the background and images with the background removed. For example, here are the the two original images.

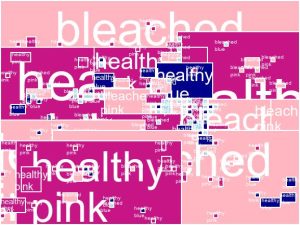

For the image with a background, below are the results I got using groupings of 10×10 and 50×50 pixels. As we can see, the blue background as well as the ground are categorized as healthy coral due to their high saturation.

For the images without the background, below are the results I got when using the classifier on 10×10 and 50×50 pixels. Here, the background is misclassified because the white is interpreted as bleached coral.

Next, I worked on excluding groups whose average color is white, as seen below. While this did help, it does still require further tweaking.

Finally for this week, I tried to include black and brown colors from being classified as well, as these ground colors are not coral. As I said earlier, this will also need to be adjusted next week, although I am optimistic that the inclusion of more training data will help clean up these images greatly. The images with groupings of 25×25 and 10×10 are included below.

Overall I think that I am on track and that our group is in a good place for the upcoming interim demo.