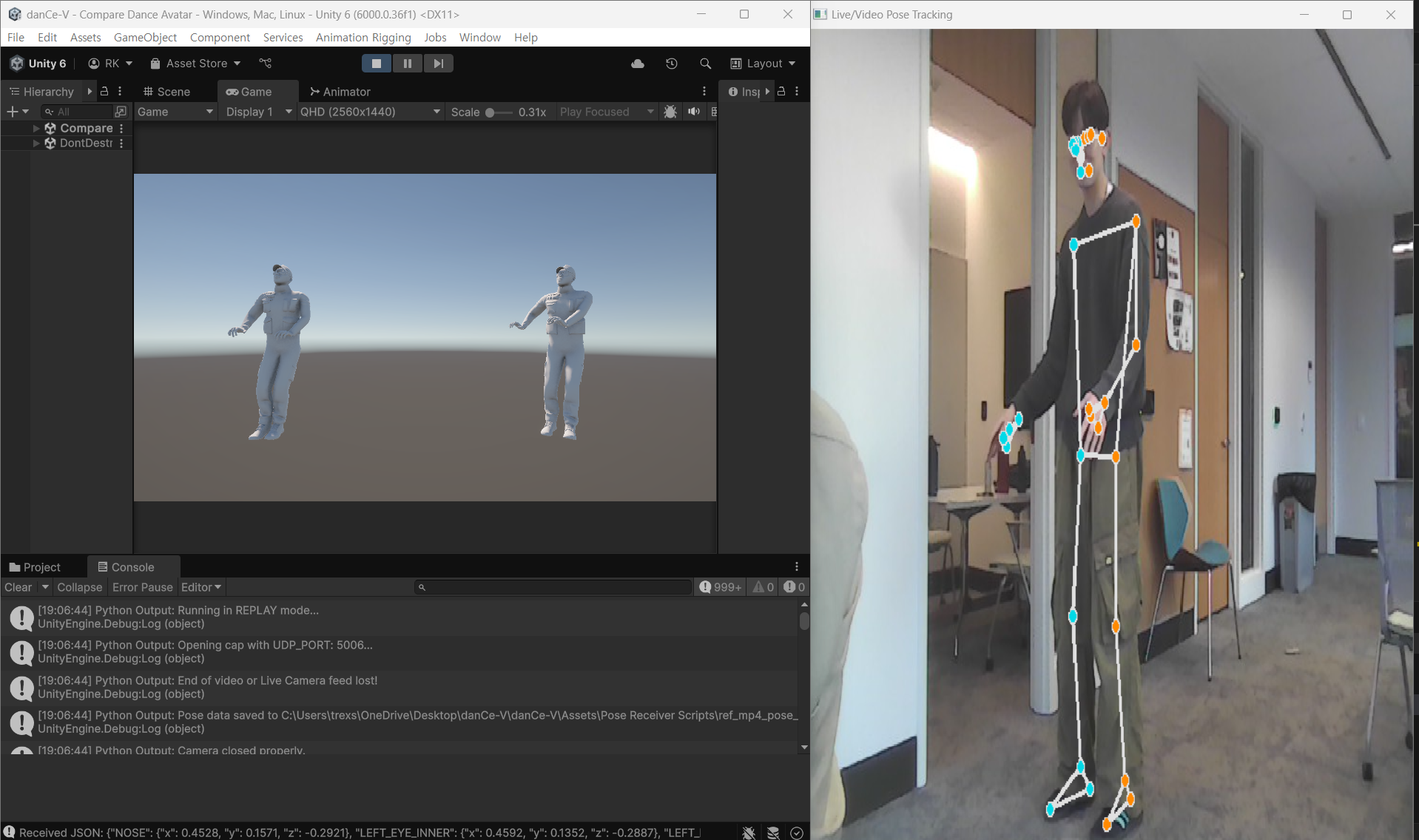

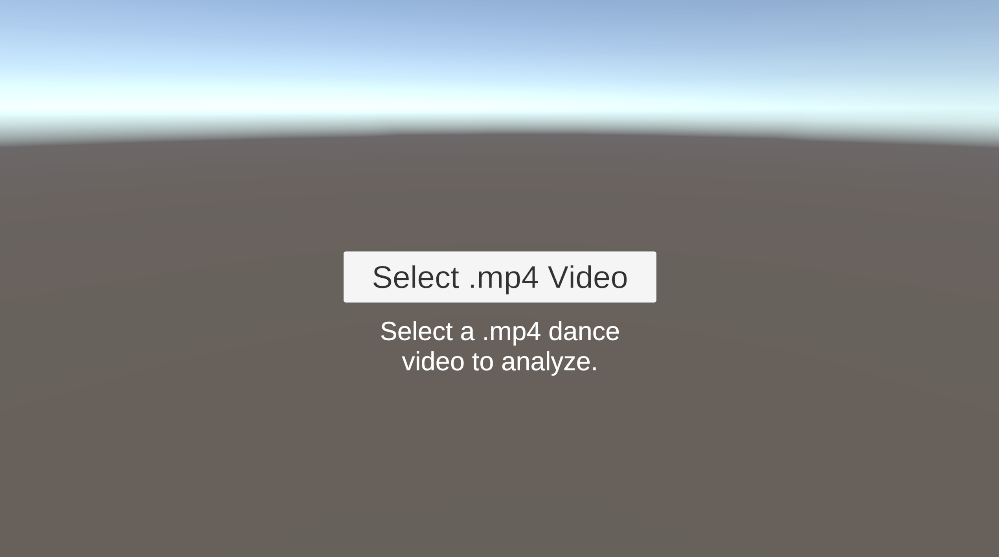

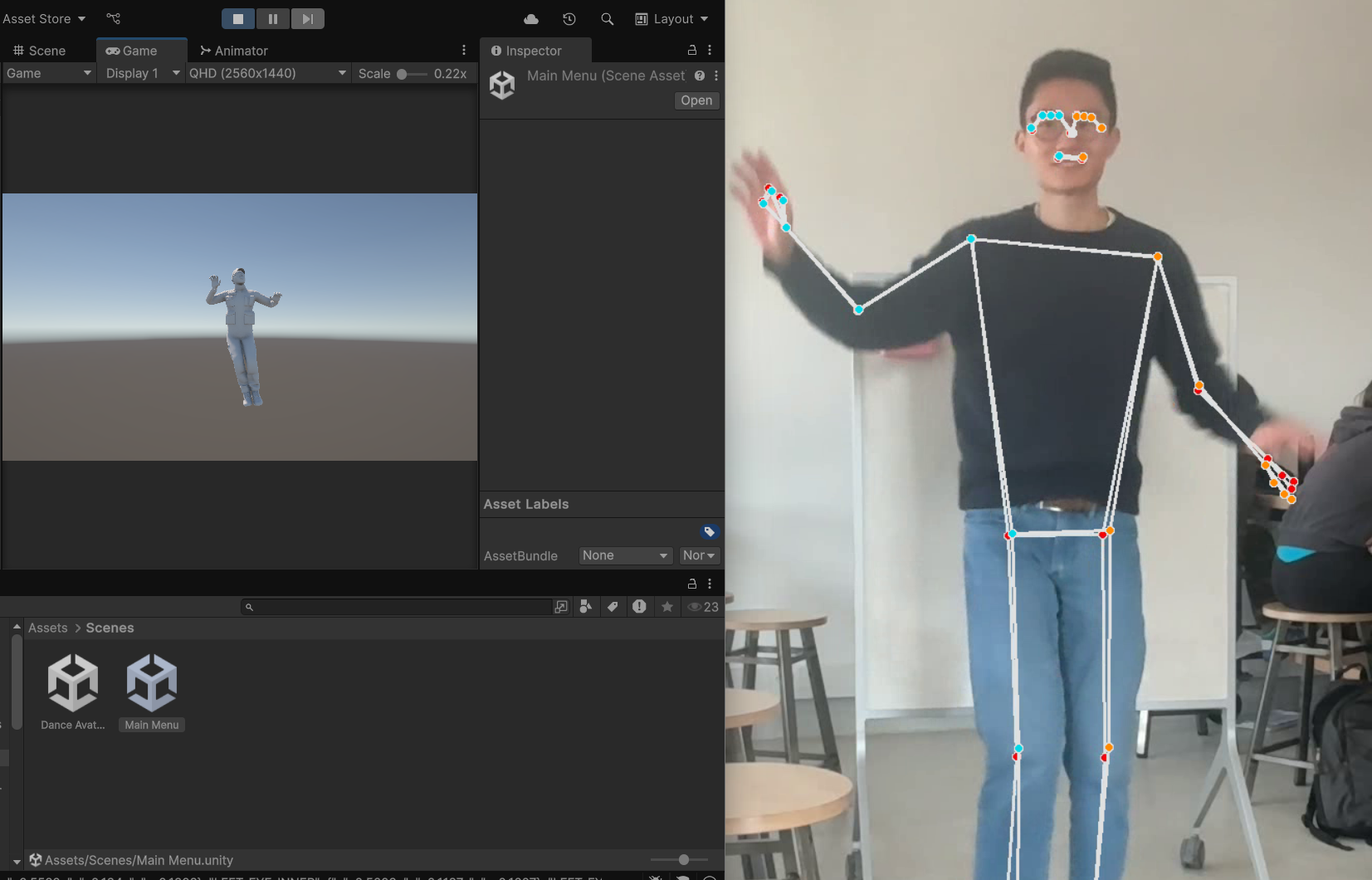

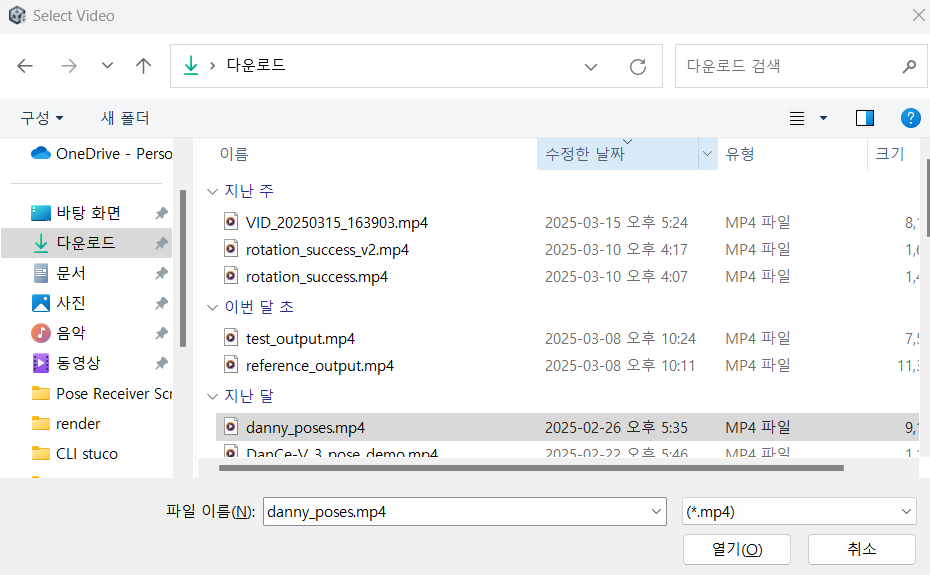

This week, I focused on several important optimizations and validation efforts for our Unity-based dance game project that uses MediaPipe and OpenCV. I worked on fixing a synchronization issue between the reference video and the reference avatar playback. Previously, the avatar was moving slightly out of sync with the video due to timing discrepancies between the video frame progression and the avatar motion updates. After adjusting the frame timestamp alignment logic and ensuring consistent pose data transmission timing, synchronization improved significantly. I also fine-tuned the mapping between the MediaPipe/OpenCV landmark detection and the Unity avatar animation, smoothing transitions and reducing jitter for a more natural movement appearance. Finally, I worked with the team on setting up a more comprehensive unit testing framework and began preliminary user testing to gather feedback on responsiveness and overall play experience.

Overall, we are on schedule relative to our project timeline. While the video-avatar synchronization issue initially caused a small delay, the fix was completed early enough in the week to avoid impacting later goals. Going off of what Peiyu and Tamal said, we have created a questionnaire and are testing with users to receive usability feedback. To ensure we stay on track, we plan to conduct additional rounds of user feedback and tuning for both the scoring system and gameplay responsiveness. No major risks have been identified at this stage, and our current progress provides a strong foundation for the next phase, which focuses on refining the scoring algorithms and preparing for a full playtest.

For testing, we implemented unit and system tests across the major components.

On the MediaPipe/OpenCV side, we performed the following unit tests:

- Landmark Detection Consistency Tests: Fed known video sequences and verified that the same pose landmarks (e.g., elbow, knee) were consistently detected across frames.

- Pose Smoothing Validation: Verified that the smoothing filter applied to landmarks reduced jitter without introducing significant additional lag.

- Packet Loss Handling Tests: Simulated missing landmark frames and confirmed that the system handled them gracefully without crashing or sending corrupted data.

On the Unity gameplay side, we carried out the following unit and system tests:

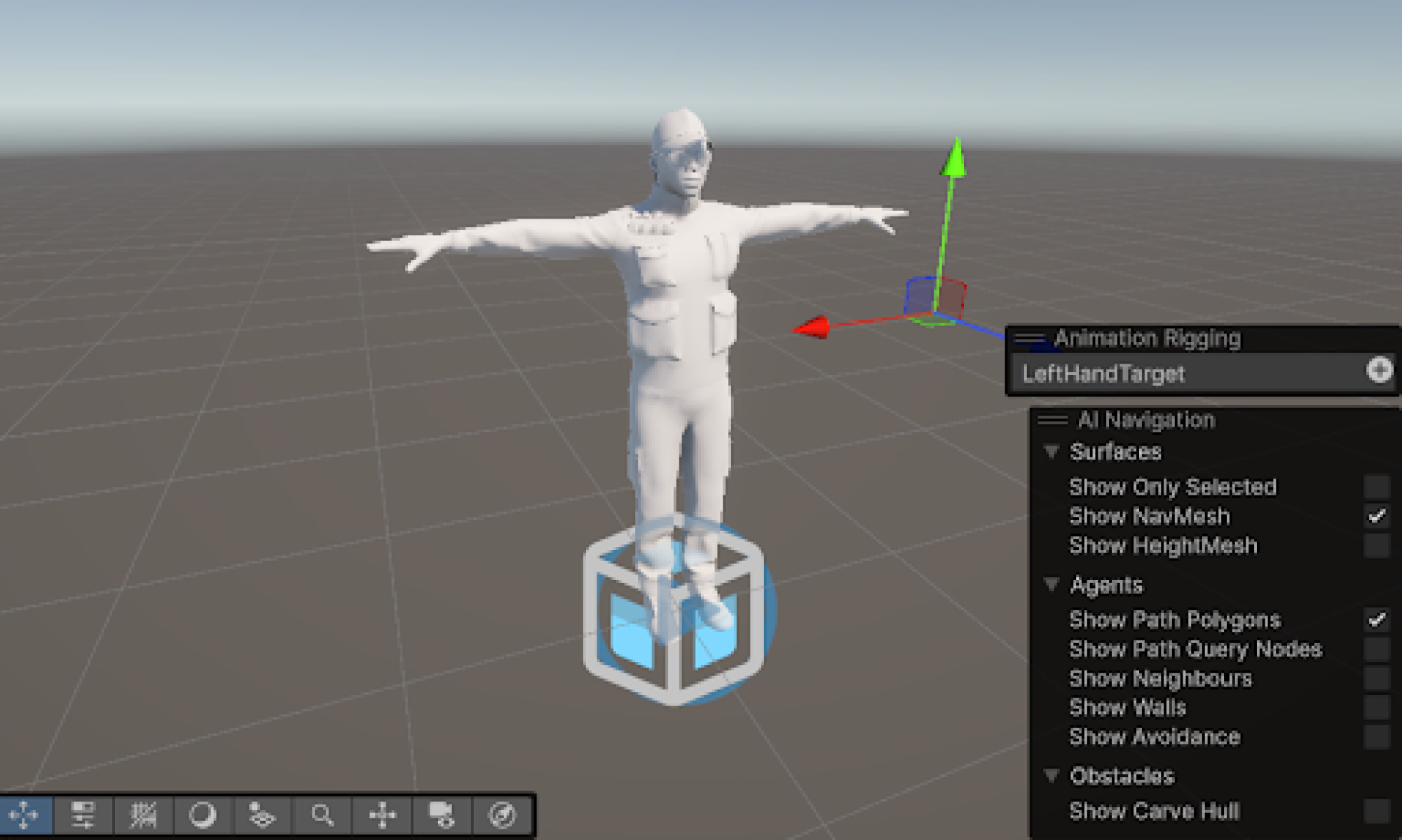

- Avatar Pose Mapping Tests: Verified that landmark coordinates were correctly mapped to avatar joints and stayed within normalized bounds as said in design reqs

- Frame Rate Stability Tests: Ensured the gameplay maintained a smooth frame rate (30 FPS or higher) under normal and stressed system conditions

- Scoring System Unit Tests: Tested the DTW (Dynamic Time Warping) score calculations to ensure robust behavior across small variations in player movement

- End-to-End Latency Measurements: Measured the total time from live player movement to avatar motion on screen, maintaining a latency under 100 ms as required in design reqs

From the analysis of these tests, we found that implementing lightweight interpolation during landmark transmission greatly improved avatar smoothness without significantly impacting latency. We also shifted the reference avatar update logic from Unity’s Update() phase to LateUpdate(), which eliminated subtle drift issues during longer play sessions.

Progress on the project is generally on schedule. While optimizing the avatar processing in parallel took slightly longer than anticipated due to synchronization challenges, the integration of the DTW algorithm proceeded decently once we established the data pipeline. If necessary, I will allocate additional hours next week to refine the comparison algorithm and improve the UI feedback for the player.

Progress on the project is generally on schedule. While optimizing the avatar processing in parallel took slightly longer than anticipated due to synchronization challenges, the integration of the DTW algorithm proceeded decently once we established the data pipeline. If necessary, I will allocate additional hours next week to refine the comparison algorithm and improve the UI feedback for the player.

Additionally, I successfully optimized the avatar

Additionally, I successfully optimized the avatar