This week I focused on conducting user experiments with Rex and Akul. We wrote up a form and are in the process of asking people to test out our application and give their feedback. While we don’t have much time to implement drastic changes to the system, it’s still good to have a direction in case we want to make any future changes to make the dance coach even better than it currently is. Other than that, I’ve helped Rex implement a few final features as well as solve remaining bugs, including the problem we encountered where the reference avatar doesn’t fully line up with the reference video playback.

Team Status Report for 4/26

Team Status Report

Risk Management:

All prior risks have been mitigated. We have not identified any new risks as our projects are approaching it’s grand finale. We have done and are continuing to conduct comprehensive testing to ensure that our project specifications meets user requirements.

Design Changes:

There were no design changes this week.

Testing:

For testing, we implemented unit and system tests across the major components.

On the MediaPipe/OpenCV side, we performed the following unit tests:

- Landmark Detection Consistency Tests: Fed known video sequences and verified that the same pose landmarks (e.g., elbow, knee) were consistently detected across frames.

- Pose Smoothing Validation: Verified that the smoothing filter applied to landmarks reduced jitter without introducing significant additional lag.

- Packet Loss Handling Tests: Simulated missing landmark frames and confirmed that the system handled them gracefully without crashing or sending corrupted data.

On the Unity gameplay side, we carried out the following unit and system tests:

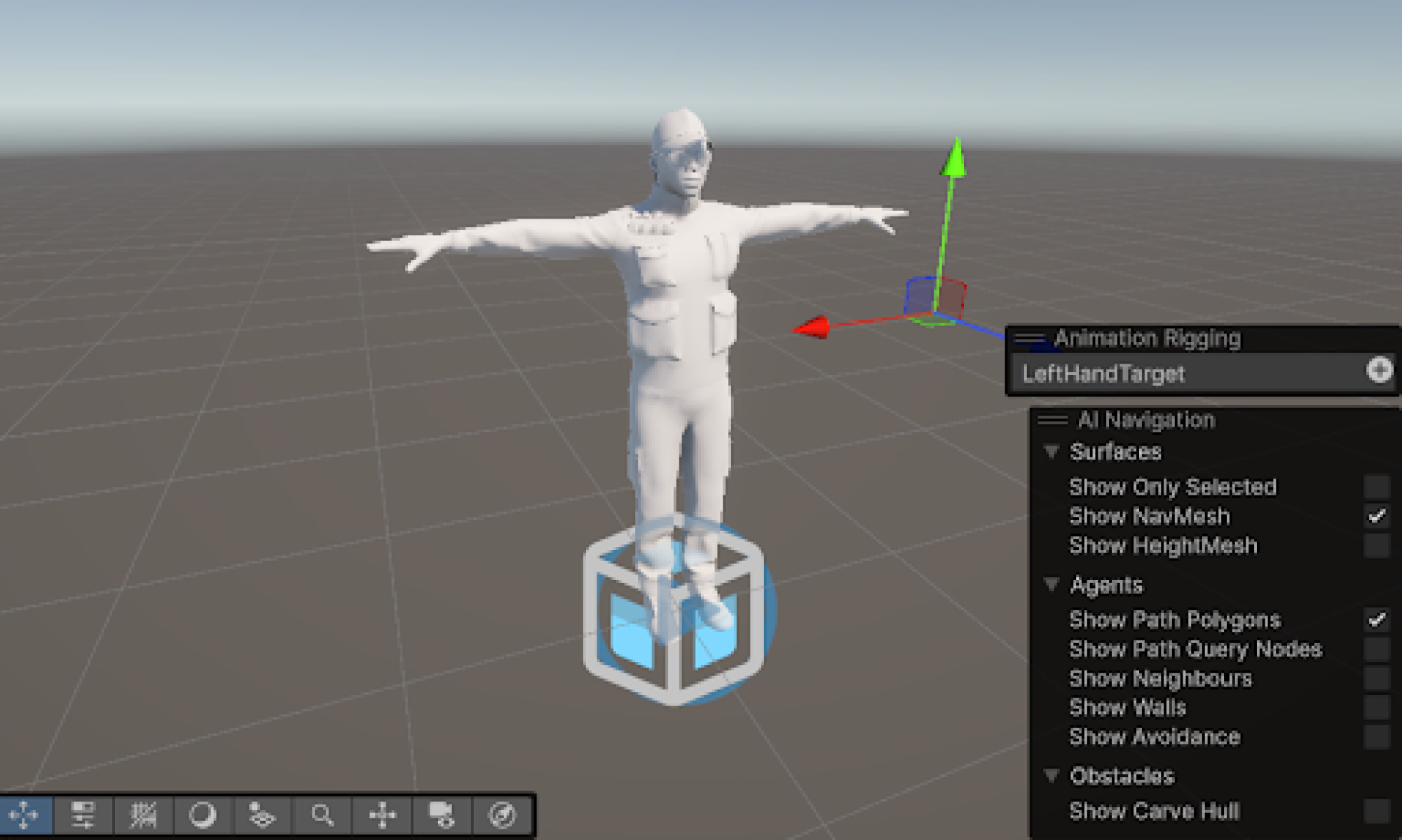

- Avatar Pose Mapping Tests: Verified that landmark coordinates were correctly mapped to avatar joints and stayed within normalized bounds as said in design reqs

- Frame Rate Stability Tests: Ensured the gameplay maintained a smooth frame rate (30 FPS or higher) under normal and stressed system conditions

- Scoring System Unit Tests: Tested the DTW (Dynamic Time Warping) score calculations to ensure robust behavior across small variations in player movement

- End-to-End Latency Measurements: Measured the total time from live player movement to avatar motion on screen, maintaining a latency under 100 ms as required in design reqs

From the analysis of these tests, we found that implementing lightweight interpolation during landmark transmission greatly improved avatar smoothness without significantly impacting latency. We also shifted the reference avatar update logic from Unity’s Update() phase to LateUpdate(), which eliminated subtle drift issues during longer play sessions.

Team Status Report for 4/19

Team Status Report

Risk Management:

All prior risks have been mitigated. We have not identified any new risks as our projects are approaching it’s grand finale. We have done and are continuing to conduct comprehensive testing to ensure that our project specifications meets user requirements.

Design Changes:

Comparison Algorithm:

- We have changed our core algorithm to FastDTW, as our testing shows that it resolves the avatar speed issue without sacrificing comparison accuracy too much.

User Interface:

- Added score board: Users can now easily visualize their real-time scores in the Unity interface

- Added reference ghost: Users now have a reference ghost avatar overlaid with their real time avatar so that users can know where they should be at all times during the dance

- Added reference video playback: Now instead of following a virtual avatar, the user can follow the real reference video, played back in the Unity interface.

Testing:

We have conducted a thorough analysis and comparison of all five comparison algorithms implemented. Here are their descriptions and here are the videos comparing their differences.

Danny’s Status Report for 4/19

This week I focused on testing the finalized comparison algorithm and collecting data to make an informed decision as to which algorithm to use for the final demo. We ran comprehensive testing on five different algorithms (DTW, FastDTW, SparseDTW, Euclidean Distance, Velocity) and collected data on the performance of these algorithms on capturing different aspects of movement similarities and differences.

Throughout this project, two major things I had to learn was Numpy and OpenCV. These tools were completely new to me and I had to learn them from scratch. OpenCV was used to process our input videos and provide us with the 3D capture data, and Numpy was a necessary library that made implementing the complex calculations involved in our comparison algorithms much easier than it otherwise would have been. For OpenCV, I found the official website extremely useful, with detailed tutorials walking users through the implementation process. I also benefited greatly from the code examples they posted on the website, since those provided a good starting point a lot of the time. In terms of Numpy, I resorted to a tool that I have often used when trying to learn a new programming language or library: W3Schools. I found this website to have a well laid out introduction to Numpy, as well as numerous specific examples. With all those resources available, I was able to pick up the library and put it to use relatively quickly.

Danny’s Status Report for 4/12

This week I focused on integrating our comparison algorithm with the Unity interface, collaborating closely with Rex and Akul. We established a robust UDP communication protocol between the Python-based analysis module and Unity. We encountered initial synchronization issues where the avatars would occasionally freeze or jump, which we traced to packet loss during high CPU utilization. We implemented a heartbeat mechanism and frame sequence numbering that improved stability significantly.

We then collaborated on mapping the comparison results to the Unity visualization. We developed a color gradient system that highlights body segments based on deviation severity. During our testing, we identified that hip and shoulder rotations were producing too many false positives in the error detection. We then tuned the algorithm’s weighting factors to prioritize key movement characteristics based on dance style, which improved the relevance of the feedback.

As for the verification and validation portion, I am in charge of the CV subsystem of our project. For this subsystem specifically, my plans are as follows:

Pose Detection Accuracy Testing

- Completed Tests: We’ve conducted initial verification testing of our MediaPipe implementation by comparing detected landmarks against ground truth positions marked by professional dancers in controlled environments.

- Planned Tests: We’ll perform additional testing across varied lighting conditions and distances (1.5-3.5m) to verify consistent performance across typical home environments.

- Analysis Method: Statistical comparison of detected vs. ground truth landmark positions, with calculation of average deviation in centimeters.

Real-Time Processing Performance

- Completed Tests: We’ve measured frame processing rates in typical hardware configurations (mid range laptop).

- Planned Tests: Extended duration testing (20+ minute sessions) to verify performance stability and resource utilization over time.

- Analysis Method: Performance profiling of CPU/RAM usage during extended sessions to ensure extended system stability.

Team Status Report for 4/12

Team Status Report

Risk Management:

Risk: Comparison algorithm slowing down Unity feedback

Mitigation Strategy/Contingency plan: We plan to reduce the amount of computation required by having the DTW algorithm run on a larger buffer. If this does not work, we will fall back to a simpler algorithm selected from the few we are testing now.

Design Changes:

There were no design changes this week. We have continued to execute our schedule.

Verification and Validation:

Verification Testing

Pose Detection Accuracy Testing

- Completed Tests: We’ve conducted initial verification testing of our MediaPipe implementation by comparing detected landmarks against ground truth positions marked by professional dancers in controlled environments.

- Planned Tests: We’ll perform additional testing across varied lighting conditions and distances (1.5-3.5m) to verify consistent performance across typical home environments.

- Analysis Method: Statistical comparison of detected vs. ground truth landmark positions, with calculation of average deviation in centimeters.

Real-Time Processing Performance

- Completed Tests: We’ve measured frame processing rates in typical hardware configurations (mid range laptop).

- Planned Tests: Extended duration testing (20+ minute sessions) to verify performance stability and resource utilization over time.

- Analysis Method: Performance profiling of CPU/RAM usage during extended sessions to ensure extended system stability.

DTW Algorithm Accuracy

- Completed Tests: Initial testing of our DTW implementation with annotated reference sequences.

- Planned Tests: Expanded testing with deliberately introduced temporal variations to verify robustness to timing differences.

- Analysis Method: Comparison of algorithm-identified errors against reference videos, with focus on false positive/negative rates.

Unity Visualization Latency

- Completed Tests: End-to-end latency measurements from webcam capture to avatar movement display.

- Planned Tests: Additional testing to verify UDP packet delivery rates.

- Analysis Method: High-speed video capture of user movements compared with screen recordings of avatar responses, analyzed frame-by-frame.

Validation Testing

Setup and Usability Testing

- Planned Tests: Expanded testing with 30 additional participants representing our target demographic.

- Analysis Method: Observation and timing of first-time setup process, followed by survey assessment of perceived difficulty.

Feedback Comprehension Validation

- Planned Tests: Structured interviews with users after receiving system feedback, assessing their understanding of recommended improvements.

- Analysis Method: Scoring of users’ ability to correctly identify and implement suggested corrections, with target of 90% comprehension rate.

Team Status Report for 3/29

Risk Management:

Risk: Comparison algorithm not being able to handle depth data

Mitigation Strategy/Contingency plan: We plan to normalize the test and reference videos so that they both represent absolute coordinates, allowing us to use euclidean distance for our comparison algorithms. If this does not work, we can fall back to neglecting the relative and unreliable depth data from the CV and rely purely on the xy coordinates, which should still provide good quality feedback for casual dancers.

Risk: Comparison algorithm not matching up frame by frame – continued risk

Mitigation Strategy/Contingency plan: We will attempt to implement a change to our algorithm that takes into account a constant delay between the user and the reference video. If correctly implemented, this will allow the user to not start precisely at the same time as the reference video and still receive accurate feedback. If we are unable to implement this solution, we will incorporate warnings and counters to make sure the users know when to correctly start dancing so that their footage is matched up with the reference video

Design Changes:

There were no design changes this week. We have continued to execute our schedule.

Danny’s Status Report for 3/29

This week I focused on addressing the issues brought up at our most recent update meeting, which is the sophistication of our comparison algorithm. We ultimately decided that we would explore multiple ways to do time series comparisons in real time, and that I would explore a fastDTW implementation in particular.

Implementing the actual algorithm and adapting it to real time proved difficult at first, since DTW was originally used for analysis of complete sequences. However, after some research and experimentation, I realized that we could adapt a sliding window approach to implementing DTW. This meant that I would store a certain number of real time frames in a buffer and try to map that as a sequence onto the reference video.

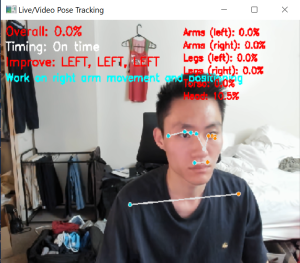

Then, since our feedback system in Unity has not been fully implemented yet, I chose to apply some feedback metrics to the computer vision frames, which allow us to easily digest the results from the algorithm and try to optimize it further.

Example of feedback overlaid on a CV frame:

Danny’s Status Report for 3/22

This week I was deeply involved in collaborative efforts with Rex and Akul to enhance and streamline our real-time rendering and feedback system. Our primary goal was to integrate various components smoothly, but we encountered several significant challenges along the way.

As we attempted to incorporate Akul’s comparison algorithm with the Procrustes analysis into Rex’s real-time pipeline, we discovered multiple compatibility issues. The most pressing problem involved inconsistent JSON formatting across our different modules, which prevented seamless data exchange and processing. These inconsistencies were causing failures at critical integration points and slowing down our development progress.

To address these issues, I developed a comprehensive Python reader class that standardizes how we access and interpret 3D landmark data. This new utility provides a consistent interface for extracting, parsing, and manipulating the spatial data that flows through our various subsystems. The reader class abstracts away the underlying format complexities, offering simple, intuitive methods that all team members can use regardless of which module they’re working on.

This standardization effort has significantly improved our cross-module compatibility, making it much easier for our individual components to communicate effectively. The shared data access pattern has eliminated many of the integration errors we were experiencing and reduced the time spent debugging format-related issues.

Additionally, I worked closely with Akul to troubleshoot various problems he encountered while trying to adapt his comparison algorithm for real-time operation. This involved identifying bottlenecks in the video processing pipeline, diagnosing frame synchronization issues, and helping optimize certain computational steps to maintain acceptable performance under real-time constraints.

By the end of the week, we made substantial progress toward a more unified system architecture with better interoperability between our specialized components. The standardized data access approach has set us up for more efficient collaboration and faster integration of future features.

Team Status Report for 3/15

Risk Management:

Risk: Comparison algorithm not matching up frame by frame

Mitigation Strategy/Contingency plan: We will attempt to implement a change to our algorithm that takes into account a constant delay between the user and the reference video. If correctly implemented, this will allow the user to not start precisely at the same time as the reference video and still receive accurate feedback. If we are unable to implement this solution, we will incorporate warnings and counters to make sure the users know when to correctly start dancing so that their footage is matched up with the reference video

Risk: Color based feedback not meeting user expectations – continued risk

Mitigation Strategy/Contingency plan: We plan to break down our Unity mesh into multiple parts to improve the visual appeal of the feedback coloring, so that users can more immediately understand what they need to do to correct a mistake. We also plan to incorporate a comprehensive user guide to help with the same purpose.

Design Changes:

There were no design changes this week. We have continued to execute our schedule.